Baked Goods Inspection comprises several elements, including consistency in color, shape, texture and flavor. Traditionally, the quality inspection of baking industry was based on subjective assessments using trained persons, with parameters such as baking time & temperature settings as production guides. The current industry trend is to employ objective instruments, such as machine vision, to provide feedback on quality parameters. Recently, the quality inspection of different baked food products is being increasingly implemented using computer vision systems. There are several advantages of using computer aided vision over manual visual inspection in terms of performance and as well as economy[1]. RGB color space was used to monitor the changes in color of the biscuit samples while baking by Yeh et al.[2]. A feed-forward neural network with a back propagation algorithm was trained to identify the biscuits. The proposed algorithm was found to be 40% better in recognition accuracy than the visual inspection manually. An automatic classification system was developed using multivariate discriminant analysis for the recognition of muffins from color images and classification into three grades. The proposed automatic classification system acquired comparatively good recognition accuracy as compared to the human inspectors[3]. Paquet-Durand et al.[4] developed an identification system using modified Viola Jones algorithm for the bread rolls and the current state of the baking process represented by the color and the size of bread rolls, and the developed system was found to be 94.4% accurate. Moreover, a number of color features and surface texture features were developed to test for the sensitivity to the appearance changes and material composition variations in baked products such as bread and biscuits[5-9]. Some of other recently developed food inspection models include Chawanji et al.[10], Hirte et al.[11], Kotwaliwale and Laverse et al.[12-14], Pinzer et al.[15]. Despite ongoing advancements for the use of color images in automatic quality inspection, very few studies have been made on the quality inspection of the bakery food products using image processing techniques inspired by human vision.

Tirnh et al.[16] discussed and illustrated the challenges being faced in such applications. The processing parameters and the quality of the dough directly influence the quality of baked products (Turbin-Orger et al.[17], and Wang et al.[18]). Support Vector Machine (SVM) classifiers have been used in various forms such as binary classification and multi-class problems for the quality inspection of baked goods to recognize and classify different baked goods such as pizza topping and sauce from a color image to their respective class category and the results indicated that the use of SVM acquired comparatively much better recognition and classification accuracy as compared to several other classification and regression counterparts. Sun[19] and Du[20] performed some experiments and the result showed that the use of SVM improved the classification accuracy by a significant amount. Based on these studies, as well as few other studies on application of computer aided vision in baked goods quality inspection, the Support Vector Machine classifiers provide numerous advantages because they are simple to implement, fast and cost-effective. Although SVM classifiers were originally intended for use in binary classification problems, but SVM has been investigated for solving multi-class problems as well. The popular implementations are one versus all (OVA)[21], the one versus one (OVO)[22], the directed acyclic graph (DAG) SVM[23], and binary tree SVM[24].

An automatic quality inspection system for bakery products proposed by this paper establishes biscuit shape, texture and color recognition and classification by extraction of biscuit shape features based on the HMAX model for computer vision (T. Serre[25]; J. Zhang[26]) and biscuit color feature extraction by RGB Three-Color-Opponent-Channel (TCOC) Color Descriptor inspired by the opponent cells in the visual cortex and the support vector machine classification capability. Hence, we propose a biologically plausible processing architecture to detect, extract and process the biscuit shape and color features simultaneously, and perform the recognition and classification invariantly. The objective of this study is to determine the possibility of biologically inspired computer aided vision algorithm to replace other existing computer vision algorithms for automatic quality inspection of baked goods.

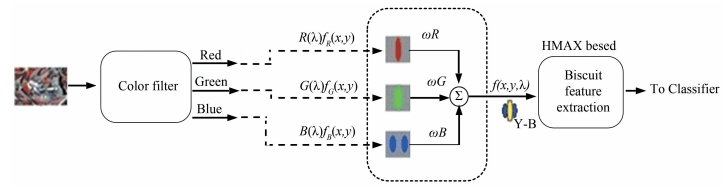

2 Material & MethodsIn this paper, we proposed an Automated Quality Inspection System for biscuit's shape and color features recognition and classification. The proposed method can be divided into two main parts, biscuit's feature extraction module and the feature classification module. The feature extraction module comprises of a popular computer vision model, known as HMAX model integrated with the three-opponent-color-channel color descriptor. The feature classifier module is based on support vector machine (SVM). The overview of the proposed model is also summarized in Fig. 1 with the help of a block diagram.

|

Figure 1 Biscuit's surface, texture and boundary/shape feature extraction from a biscuit sample image |

In our model, we have taken inspiration from one of the famous computer vision model HMAX[25-26], recently developed by T. Serre et al. It was found to be efficient in recognition of different shapes and patterns invariant to position, size and orientation of the object of interest, but it lacked the extraction of object color features. Further studies were conducted by researchers to include the color features into the original HMAX model and a significant improvement of performance over different color dataset images was reported[27-29]. In our application, we want to extract biscuit shape as well as biscuit color features simultaneously. Biscuit shape features are important to recognize and classify biscuit type whereas biscuit color features are very important to measure the baked level of the biscuits. The higher the baked level of the biscuits becomes, the darker will be the color of biscuits as shown in Fig. 4(a). In this paper we propose the color HMAX variant using opponent-color-channel color descriptor (M. Riesenhuber[30]), which is inspired by the color information processing in the primary visual cortex.

2.1 Biscuit Color Descriptor (RGB Color Descriptor)We propose a biscuit's surface color feature extraction algorithm simulating the single opponent and double opponent neuronal function (see Zhang et al.[31], Johnson et al.[32], Solomon et al.[33], Cardini[34], and Heeger[35]) of the human vision system (see Fig. 2). Two main equi-luminant chromatic axes have been emphasized by the classical opponent theories (P. Lennie[36]) of color vision based on the neurological evidences, namely: Red Green and Yellow Blue. We also consider a White Black channel (luminance based), obtained by combining the Red, Green & Blue channels together. We used Gabor filter to combine individual Red, Green and Blue channels into center and surround opponent color channels. Biscuit sample RGB image input is processed by color filters to separate the Red, Green and Blue channel color intensities. These color channels are then used to create three opponent color channels, described briefly as follows. The model response is evaluated by the dot product between the function f(x, y, λ) and an input image patch i(x, y, λ).

|

Figure 2 Spatial-chromatic opponent channel image descriptors |

| $ \begin{array}{l} f\left( {x,y,\lambda } \right) = {\omega _P}R\left( \lambda \right){f_R}\left( {x,y} \right) + {\omega _G}G\left( \lambda \right){f_G}\left( {x,y} \right) + \\ \;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;{\omega _B}B\left( \lambda \right){f_B}\left( {x,y} \right) \end{array} $ | (1) |

where R(λ), G(λ) and B(λ) denote the spectral response function (SRF) for each color component respectively. fR(x, y), fG(x, y) and fB(x, y) denote the sensitivity distributions for red, green and blue color component, respectively (detail in Fig. 2). All the parameters used in the implementation of the single opponent and double opponent color channels are constrained by the neuroscience data (see Johnson et al.[32], Solomon et al.[33] for details).

2.2 HMAX Based Biscuit Feature DescriptorWe propose a feature descriptor for biscuit shape and pattern based on HMAX model. The architecture of HMAX model is a hierarchal feed forward network with 4 layers. Its layers S1, S2, C1 and C2 imitate the hierarchal layers of the primary visual cortex from V1 to ITC (infero-temporal cortex) and PFC (pre-frontal cortex). The four layers of the model follow an alternating sequence of the convolution operation in one layer and the MAX pooling in the layer that follows. Each convolution layer yields a set of feature maps and the following MAX pooling layer achieves robustness to geometric variations in these features like change in scale, rotation, position. Analogous to the functional hierarchy of the layers in the biological visual system, the lower layers S1 and C1 are selective to different orientations of the features and the higher layers S2 and C2 are selective to a particular object or pattern. The input of the HMAX based biscuit feature descriptor (S1 layer) is the biscuit's sample image and the output of the final layer C2 is the invariant feature representation of the biscuit's sample input image. The functional hierarchy of the layers is discussed in detail elsewhere (see T. Serre et al.[25]). In our model, we used the standard HMAX model (T. Serre[25]) with 1 000 features.

2.3 Integration of Color Descriptor with HMAX ModelWe integrated the color descriptor with the HMAX based feature descriptor in a later fusion manner (see Fig. 3). Here we describe a biologically inspired processing architecture for the computation of the biscuit shape and texture, simultaneously. For a biscuit's sample input RGB image, the color features are separated into individual Red, Green and Blue color image and these three separate color features are coupled to three opponent color channels namely Red coupled with Green, Blue coupled with Yellow and Black coupled with White, respectively. This process is also explained in Fig. 2 for Blue-Yellow channel, the same process is repeated two times for Red-Green and Black-White channels. The selection of these particular colors is inspired by the opponent color theory in primary visual cortex. The resultant feature matrix is then fed to the HMAX based feature extraction module for biscuit shape features extraction. In our implementation, the original S1/C1 in standard HMAX unit responses at 16 scales, now also includes 6 single opponent channels at 2 orientations (E.H. Land[37]) and 3 double opponent channels at 4 orientations, a dictionary of 1 000 features for each input image at 16 scales and 4 orientations were created.

|

Figure 3 Illustration of integration of biscuit color and feature descriptor along with the classifier in to the model |

2.4 Support Vector Machine (SVM) Classifier

SVM (Support Vector Machine) classifier is constructed to train and test the developed algorithm over sample images of biscuits and measure the classification accuracy of the proposed quality inspection system. For the classification of the 8 quality groups with respect to the color of biscuits and 5 quality groups with respect to the shape of biscuits, several multi-class SVM classifier has been constructed namely One Versus All (OVA), One Versus One (OVO) and Directed Acyclic Graph. The quality tests for biscuit's baking level, biscuit's shape, biscuit surface color and texture are tested individually; therefore SVM is trained and tested individually for each of these quality tests, one by one.

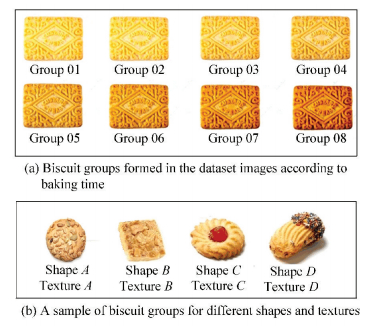

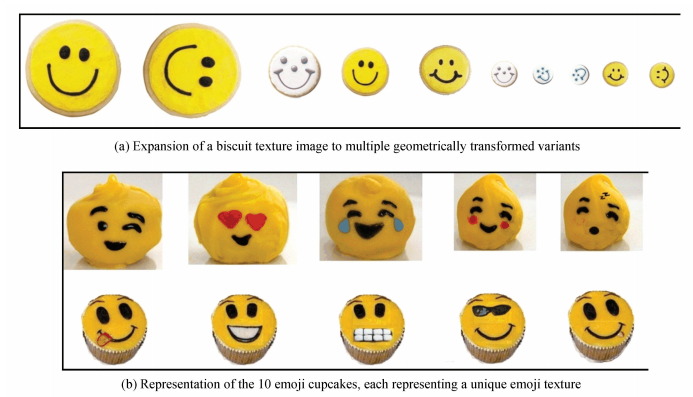

3 ExperimentSince biscuits are the most common type of baked product, we used biscuit samples images to test the proposed model for the quality inspection of baked products. In this study, we performed three independent experiments to test the recognition and classification accuracy of the proposed algorithm for three properties of biscuits namely biscuit shape, biscuit color and biscuit surface texture. Three datasets were formed for the biscuit samples for the three experiments separately. Fig. 4 and Fig. 5 illustrate how the dataset is created with different class categories for biscuit color, biscuit shape and biscuit surface texture, respectively.

|

Figure 4 Biscuit groups at different baking time and the sample with different shapes and textures |

|

Figure 5 Collection of dataset images for different patterns (different emojis in this case) |

Eight different groups were formed by using biscuits baked for different time period, but same temperature (200 ℃). The degree of baking is inversely proportional to the brightness of the color of biscuits (Abdullah et al.[38]).Therefore, the different groups reflect the different degree of baking, with group 01 through group 03 for under-baked and group 06 through group 08 for over-baked biscuits. The moderately baked biscuits are represented by the group 04 & 05. Fig. 4(a) shows a group of biscuit samples serving as a reference for the different baking time period.

In this quality inspection system, three different experiments were performed. The first one was to quantitatively assess the accuracy of the developed algorithm to recognize and categorize the shape of biscuits, for example between round shaped biscuit, square shaped or rectangular shaped biscuit (see Fig. 4(b)). The second one was to assess the accuracy of the developed algorithm to recognize and categorize the color of biscuits (see Fig. 4(a)) and the third experiment was conducted to assess the accuracy of the developed algorithm to recognize different textured biscuits (see Fig. 5). In the color inspection system, the total of 240 images of biscuit samples were randomly categorized into training set and testing set. This left a total of 120 images of biscuit samples for training and also a total of 120 images for testing as well, with each class group comprising 15 sample images for training and also 15 images for testing. The biscuit samples images in the training set were then used to train the machine vision system for the quality inspection of the baked goods and then biscuit sample images in the testing set were used to measure the accurateness of the developed algorithm. Initially, the features related to the color of the biscuits were extracted from the biscuit sample images in the training set using feature descriptor, as described above. Finally, the classification was achieved by using three different multiple class SVM classifiers, which classified the biscuits sample image into their respective group.

The impact of changes in illumination on the developed quality inspection algorithm was also examined by repeating the experiment with varied light intensity by 15%. The results are presented in the following section. By comparing the accuracy results of Tables 1 and 2 with accuracies in Tables 3 and 4, it is clearly evident that the classification accuracy is least affected by changes in illumination. Therefore, this experiment determined the consistency, system accuracy and repeatability.

| Table 1 Biscuit shape recognition and classification accuracy |

| Table 2 Biscuit Baking Level (color) recognition and classification accuracy (*GS-Gray Scale) |

| Table 3 Biscuit Shape Recognition and classification accuracy after 11% illumination change |

| Table 4 Baking level (color) recognition and classification accuracy after 11% illumination change |

The recognition and classification performance was evaluated according to the statistical criteria, classification accuracy and classification error as follows:

| $ Classification\;accuracy = \frac{{number\;of\;correct\;classifications}}{{total\;number\;of\;test\;images}} $ | (2) |

| $ Classification\;error = \frac{{number\;of\;incorrect\;classifications}}{{total\;number\;of\;test\;images}} $ | (3) |

The biscuit shape and color features are extracted using biologically inspired proposed algorithm RGB color descriptor integrated HMAX shape descriptor and then recognized and classified automatically to the respective class using a linear kernel support vector machine (SVM). The automatic recognition and classification accuracy is calculated and compared with the original model in gray scale (see Tables 1 and 2).

All the images in the dataset were of low resolution of up to 250×250 pixel, in JPEG format. The performance evaluation is achieved according to the statistical criteria, recognition and classification accuracy was calculated as number of correct classifications over number of total testing images, whereas classification error was calculated as the number of incorrect classifications over total number of testing images (see Eq.(2) and Eq.(3)).

We created a dataset with number of class categories representing the number of biscuit shapes such as round, square and rectangular shape. A total of 30 images were created in each class category, which may further be divided into 15 training and 15 testing images. We computed the C2 output (color HMAX features) for all the training and testing images and then used them to train and test a SVM structure. As can be seen from Table 1 that at least 5 training images per class give a shape recognition accuracy of more than 90%. Therefore with only a few number of training images, a comparatively high biscuit shape recognition and classification accuracy is achieved.

We created another dataset with number of class categories representing the number of biscuit color with different shades of brown for under-baked, moderately baked and over-baked biscuits. A total of 30 images were created in each class category, which may further be divided into 15 training and 15 testing images. We computed the C2 output (Color HMAX features) for all the training and testing images and then used them to train and test a SVM structure. As can be seen from Table 2 that at least 10 training images per class give a color recognition accuracy of 100%. Therefore, with only a few training images per class, a high recognition and classification accuracy for different biscuit color referring to different baking duration is achieved.

We also summarize the classification result after 11% variation in lighting intensity in Tables 3 and 4. The developed algorithm is capable to provide successful recognition and accurate classification invariantly to the illumination changes. Hence, we conclude that the developed algorithm is able to improve the stability and invariance of the automatic recognition method based on computer vision.

The results in the Tables above show that the best recognition accuracy of the proposed algorithm is achieved by the use of proposed algorithm with one versus one SVM multi-class classifier and DAG-SVM classifier, invariantly to the changes in the illumination. A comparison of Tables 1, 2, 3 and 4 clearly demonstrates that the classification accuracy is not affected by the change in the illumination intensity. The model recognizes the biscuit shape quality 95% accurately, and biscuit baking level 100% accurately. The recognition accuracy of the proposed method for biscuit shape and color simultaneous classification is found to be 97.5% with at least 10 training images per class category (see Table 5). In this experiment, a total of 10 class categories were used with different emoji cupcakes shape/texture (see Fig. 5). Each class was created with 30 sample images, out of which 20 samples were selected randomly for training (see 1st column of Table 5, multiple experiments were conducted with different number of randomly selected training samples) and the remaining randomly selected 10 samples were used for testing. Therefore a total of 300 sample images were used in this experiment, divided equally in to 10 classes so that each class consists 30 samples, of which 20 samples are used for training and 10 samples are used for testing.

| Table 5 Recognition and classification accuracy of Color HMAX for biscuit shape and color, simultaneously |

It is noteworthy that although the number of original training samples demonstrated in Table 5 is 300 samples in 10 classes, 30 samples in each class out of which 20 are training samples and 10 are testing samples, we have also expanded the samples artificially by making geometric transformations such as change in scale, orientation and position of biscuits in the image. As explained in Fig. 5(a) multiple geometrically transformed variants for multiple scale changes, multiple orientations and multiple position changes of a single biscuit image were created for each biscuit for the training and testing dataset, in order to develop and test the 'invariant' recognition capability of the developed biscuit recognition and classification system. In our study, we used 3 scales (0.5x, 1x, and 2x), 3 orientations (-90°, 0°, +90°) and 4 position changes. The number of biscuit sample images in the dataset was expanded to 10 times in this way (3 scales + 3 orientations + 4 positions = 10 times expansion in number of dataset images) because each biscuit sample was expanded to 10 geometric transformations. Table 6 shows that using different multi-class variants of SVM with the proposed algorithm recognizes different patterns efficiently, but the best results are achieved using DAG-SVM, consistent with findings of preceding tables.

| Table 6 Different pattern recognition and classification accuracy |

A comparison of the recognition accuracy of the developed algorithm with others for the same application is presented in Table 7 (Abdullah et al.[3], Paquet-Durand et al.[4]). The details of these references have been discussed in the introduction section of the paper. The comparison proves that biologically plausible computer vision models have the potential for use in various bakery products quality inspection task automation.

| Table 7 A comparison of model accuracy between proposed method and others model |

5 Conclusions

Quality evaluation of bakery products has a major rule in the food industry. We have described a biologically inspired model for biscuit shape, color and texture feature extraction based on known properties of the primate visual cortex, which is capable of recognizing and classifying biscuit shape and color invariantly from color image. Once the algorithm is trained for the biscuit shapes, texture and color features, the algorithm will provide an automatic quality inspection of biscuits to facilitate the process of grading bakery products automatically and efficiently. We have tested the algorithm to recognize biscuit shape, color and different patterns, and found that the COLOR HMAX model was able to recognize the biscuit shapes, patterns and color with a recognition accuracy of 95%, 98% and 100%, respectively. The COLOR HMAX model is found to be 97.5% accurate in recognizing the biscuit shape and color, simultaneously. The results from this study indicate that a biologically inspired computer vision model performs accurately and efficiently in the bakery products quality inspection. It was also discovered that the one versus one SVM and directed acyclic graph SVM acquired the maximum accurate classification rate. The proposed method is also consistently stable and invariant. Therefore, we believe that the computer vision models which are inspired by the visual cortex mechanism, have the capability of being used for the quality inspection of biscuits and other bakery products.

| [1] |

Nashat S, Abdullah M Z. Quality Evaluation of Bakery Products. Chapter 21—Computer Vision Technology for Food Quality Evaluation. 2nd Ed. New York: Academic Press, 2008. 481-522. DOI: 10.1016/b978-0-12-802232-0.00021-9.

( 0) 0)

|

| [2] |

Yeh J C H, Hamey L G C, Westcott T, et al. Color bake inspection system using hybrid artificial neural networks. Proceedings of the IEEE International Conference on Neural Networks. Piscataway: IEEE, 1995. 37-42. DOI: 10.1109/icnn.1995.487873.

( 0) 0)

|

| [3] |

Abdullah M Z, Abdul-Aziz S, Dos-Mohamed A M. Machine vision system for online inspection of traditionally baked Malaysian muffins. Journal of Food Science Technology, 2002, 39(4): 359-366. ( 0) 0)

|

| [4] |

Paquet-Durand O, Solle D, Schirmer M, et al. Monitoring baking processes of bread rolls by digital image analysis. Journal of Food Engineering, 2012, 111(2): 425-431. DOI:10.1016/j.jfoodeng.2012.01.024 ( 0) 0)

|

| [5] |

Mohd Jusoh Y M, Chin N L, Yusof Y A, et al. Bread crust thickness measurement using digital imaging and Lab color system. Journal of Food Engineering, 2009, 94(3/4): 366-371. DOI:10.1016/j.jfoodeng.2009.04.002 ( 0) 0)

|

| [6] |

Purlis E, Salvadori V O. Modeling the browning of bread during baking. Food Research International, 2009, 42(7): 865-870. DOI:10.1016/j.foodres.2009.03.007 ( 0) 0)

|

| [7] |

Nashat S, Abdullah A, Abdullah M Z. Machine vision for crack inspection of biscuits featuring pyramid detection scheme. Journal of Food Engineering, 2014, 120: 233-247. DOI:10.1016/j.jfoodeng.2013.08.006 ( 0) 0)

|

| [8] |

Davies E R. Inspection of baked products. In: Series in Machine Perception and Artifical Intelligence: Volume 37—Image Processing for the Food Industry. Singapore: World Scientific Publishing Co. , 2000. 141-156.

( 0) 0)

|

| [9] |

Senni L, Ricci M, Palazzi A, et al. On-line automatic detection of foreign bodies in biscuits by infrared thermography and image processing. Journal of Food Engineering, 2014, 128: 146-156. DOI:10.1016/j.jfoodeng.2013.12.016 ( 0) 0)

|

| [10] |

Chawanji A S, Baldwin A J, Brisson G, et al. Use of X-ray micro tomography to study the microstructure of loose-packed and compacted milk powders. Journal of Microscopy, 2012, 248: 49-57. DOI:10.1111/j.1365-2818.2012.03649.x ( 0) 0)

|

| [11] |

Hirte A, Primo-Martín C, Meinders M B J, et al. Does crumb morphology affect water migration and crispness retention in crispy breads?. Journal of Cereal Science, 2012, 56(2): 289-295. DOI:10.1016/j.jcs.2012.05.014 ( 0) 0)

|

| [12] |

Kotwaliwale N, Singh K, Kalne A, et al. X-ray imaging methods for internal quality evaluation of agricultural produce. Journal of Food Science and Technology, 2014, 51(1): 1-15. DOI:10.1007/s13197-011-0485-y ( 0) 0)

|

| [13] |

Laverse J, Mastromatteo M, Frisullo P, et al. X-ray microtomography to study the microstructure of cream cheese-type products. Journal of Dairy Science, 2011, 94(1): 43-50. DOI:10.3168/jds.2010-3524 ( 0) 0)

|

| [14] |

Laverse J, Mastromatteo M, Frisullo P, et al. X-ray microtomography to study the microstructure of mayonnaise. Journal of Food Engineering, 2012, 108(1): 225-231. DOI:10.1016/j.jfoodeng.2011.07.037 ( 0) 0)

|

| [15] |

Pinzer B R, Medebach A, Limbach H, et al. 3D characterization of three-phase systems using X-ray tomography: tracking the microstructural evolution in ice cream. Soft Matter, 2012(17): 4584-4594. DOI:10.1039/c2sm00034b ( 0) 0)

|

| [16] |

Trinh L, Lowe T, Campbell G M, et al. Bread dough aeration dynamics during pressure step-change mixing: Studies by X-ray tomography, dough density and population balance modelling. Chemical Engineering Science, 2013, 101: 470-477. DOI:10.1016/j.ces.2013.06.053 ( 0) 0)

|

| [17] |

Turbin-Orger A, Babin P, Boller E, et al. Growth and setting of gas bubbles in a viscoelastic matrix imaged by X-ray microtomography: the evolution of cellular structures in fermenting wheat flour dough. Soft Matter, 2015(17): 3373-3384. DOI:10.1039/c5sm00100e ( 0) 0)

|

| [18] |

Wang S, Austin P, Bell S. It's a maze: the pore structure of bread crumbs. Journal of Cereal Science, 2011, 54(2): 203-210. DOI:10.1016/j.jcs.2011.05.004 ( 0) 0)

|

| [19] |

Sun D-W, Brosnan T. Pizza quality evaluation using computer vision. Journal of Food Engineering, 2003, 57(1): 81-95. DOI:10.1016/s0260-8774(02)00276-5 ( 0) 0)

|

| [20] |

Du C-J, Sun D-W. Multi-classification of pizza using computer vision and support vector machine. Journal of Food Engineering, 2008, 86(2): 234-242. DOI:10.1016/j.jfoodeng.2007.10.001 ( 0) 0)

|

| [21] |

Vapnik V. Statistical Learning Theory. New York: John Wiley and Sons, 1998.

( 0) 0)

|

| [22] |

Kreßel U. Pairwise classification and support vector machines. In: Advances in Kernel Methods— Support Vector Learning. Cambridge, MA: MIT Press, 1999. 255-268.

( 0) 0)

|

| [23] |

Platt J C, Christiani N, Shawe-Taylor J. Large Margin DAGs for Multi-class Classification. In: Advances in Neural Information Processing Systems. Cambridge: MIT Press, 1999. 547-553.

( 0) 0)

|

| [24] |

Wang A, Liu J, Wang H, et al. A novel fault diagnosis of analog circuit algorithm based on incomplete wavelet packet transform and improved balanced binary-Tree SVMs. International Conference on Life System Modeling and Simulation. Berlin: Springer, 2007. 482-493. DOI: 10.1007/978-3-540-74769-7_52.

( 0) 0)

|

| [25] |

Serre T, Wolf L, Bileschi S, et al. Robust object recognition with cortex-Like mechanism. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2007, 29(3): 411-426. DOI:10.1109/tpami.2007.56 ( 0) 0)

|

| [26] |

Zhang J, Xie Z, Gao J, et al. Beyond shape: incorporating color invariance into a biologically inspired feed-forward model of category recognition. Proceedings of the 7th Indian Conference on Computer Vision, Graphics & Image Processing. New York: ACM, 2010. 85-92. DOI: 10.1145/1924559.1924571.

( 0) 0)

|

| [27] |

van de Weijer J, Schmid C. Coloring local feature extraction. In: Leonardis A, Bischof H, Pinz A. Computer Vision-ECCV 2006. ECCV 2006. Lecture Notes in Computer Science. Berlin: Springer, 2006. 334-348. DOI: 10.1007/11744047_26.

( 0) 0)

|

| [28] |

Everingham M, van Gool L, Williams C K I, et al. The PASCAL visual object classes challenge. International Journal of Computer Vision, 2010, 88(2): 303-338. DOI:10.1007/s11263-009-0275-4 ( 0) 0)

|

| [29] |

Nilsback M E, Zisserman A. A visual vocabulary for flower classification. IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE Computer Society, 2006. 1447-1454. https://doi.org/10.1109/cvpr.2006.42.

( 0) 0)

|

| [30] |

Riesenhuber M, Poggio T. Model of object recognition. Nature Neuroscience, 2000, 3: 1199-1204. DOI:10.1038/81479 ( 0) 0)

|

| [31] |

Zhang J, Barhomi Y, Serre T. A new biologically inspired color image descriptor. Proceeeings of the 12th European Conference on Computer Vision. Berlin: Springer, 2012. 312-324. DOI: 10.1007/978-3-642-33715-4_23.

( 0) 0)

|

| [32] |

Johnson E N, Hawken M K, Shapley R. The orientation selectivity of the color-responsive neurons in the macaque V1. The Journal of Neuroscience, 2008, 28(32): 8096-8106. DOI:10.1523/jneurosci.1404-08.2008 ( 0) 0)

|

| [33] |

Solomon S G, Lennie P. Chromatic gain controls in visual cortical neurons. The Journal of Neuroscience, 2005, 25(19): 4779-4792. DOI:10.1523/jneurosci.5316-04.2005 ( 0) 0)

|

| [34] |

Caradini M, Heeger D J, Movshon J A. Linearity and normalization in simple cells of the macaque primary visual cortex. The Journal of Neuroscience, 1997, 17(21): 8621-8644. ( 0) 0)

|

| [35] |

Heeger D J. Normalization of cell responses in cat striate cortex. Visual Neuroscience, 1992, 9(2): 181-197. DOI:10.1017/s0952523800009640 ( 0) 0)

|

| [36] |

Lennie P, Krauskopf J, Sclar G. Chromatic mechanism in the striate cortex of macaque. The Journal of Neuroscience, 1990, 10(2): 649-669. ( 0) 0)

|

| [37] |

Land E H, McCann J J. Lightness and retinex theory. Journal of the Optical Society of America, 1971, 61(1): 1-11. DOI:10.1364/josa.61.000001 ( 0) 0)

|

| [38] |

Nashat S, Abdullah M Z. Multi-class color inspection of baked foods featuring support vector machine and Wilk's λ analysis. Journal of Food Engineering, 2010, 101(4): 370-380. DOI:10.1016/j.jfoodeng.2010.07.022 ( 0) 0)

|

2017, Vol. 24

2017, Vol. 24