2. National Engineering Laboratory for Mobile Network Technologies, Beijing University of Posts and Telecommunications, Beijing 100876, China

With the convergence of the physical and virtual world, billions of Internet of Things (IoT) devices will be connected to share the collected information for monitoring and even reconstructing the physical world in cyber space. Meanwhile, since the development of 5G technology is moving to its final phase and the commercial deployment of 5G networks begins, it is predicted that trillions of IoT devices would be connected in the next decade[1], which enables the unprecedented proliferation of new IoT services. These emerging IoT services require end-to-end co-design of communication, control, computing, and consciousness functionalities[2-3]. However, such requirements have been largely overlooked in 5G, which is marketed to support basic IoT services, thereby making it debatable whether the current system design of 5G can support the future smart IoT applications.

Based on 5G, 6G aims at realizing a ubiquitous communication network including human-machine-thing-genie[3-4], which will provide full-dimensional wireless coverage and integrate all functions, including sensing, communication, computing, caching, control, positioning, radar, navigation, and imaging, to support full-vertical applications. Our earlier work[5] proposed Ubiquitous-X, which is a novel network architecture for 6G network to support the integration of human-machine-thing-genie communication with the aim of realizing ubiquitous artificial intelligence (AI) services from the network core to end devices. The "X" in "Ubiquitous-X" represents the evolution from traditional mobile networks to future intelligent digital world which is full of possibilities. To support the paradigm shift from connected IoT devices to connected smart IoT devices, 6G will realize the concept of Ubiquitous-X by exploiting technologies such as AI, edge computing, end-to-end distributed security, and big data analytics[6-7]. With the merging of AI and IoT, the Artificial Intelligence of Things (AIoT) is considered as the stepping stone to build the 2030 intelligent information society[8]. By forming a highly sustainable, secure, and cost-effective networks, it enables vendors to provide intelligent services for various industry vertical markets.

Currently, AIoT is still in its infancy, which adopts single machine intelligence[9]. To unleash the potential of AIoT services, it requires the massive deployment of IoT devices in industry applications. Without effective connections among the devices, each device would become isolated data island and the collected data cannot be fully utilized to support AIoT services[10].

The important issues to be addressed in order to enable large-scale AIoT device deployments over 6G are as follows:

·What would be the new performance challenges posed by the massive deployment of diverse AIoT devices in 6G networks?

·How to realize efficient processing of large-scale data collected by AIoT devices?

·How to enhance the security and privacy of AIoT?

·How to customize the design of 6G network to support massive and intelligent connectivity in AIoT?

This paper aims to answer the above questions with highlights on the main challenges and potential enabling technologies. It is worth noting that to efficiently support AIoT, it requires co-design of the enabling technologies to fully exploit the benefit of each technology through the convergence of various technologies.

2 AIoT under 6G Ubiquitous-X 2.1 6G Ubiquitous-XThe combination of mobile networks, edge computing, and AI are the key enabling technologies for IoT applications through cyber-physical spaces[11]. However, with the development of information technology, mobile communication has been transformed by novel applications. Recently, the International Telecommunication Union-Telecommunication Standardization Sector (ITU-T) reported seven representative use cases for network 2030[12]. Some of the key network requirements go beyond the utmost performance of 5G. For example, human-size holographic communication requires 1Tbps bandwidth, and some industrial IoT applications require 99.999 999% availability. Bandwidth, delay, reliability, and especially capabilities in terms of intelligence are insufficient nowadays.

To address the aforementioned challenges, "genie" was introduced to represent autonomous agents[3]. Genie exists in the virtual world which can communicate and make decisions without human involvement. It has intelligent "consciousness" and can represent feelings, intuitions, emotions, ideas, rationality, sensibility, exploration, learning, and cooperation through characterization, extension, mixing, and even compilation[5]. Therefore, communication objective of 5G "human-machine-thing" will evolve to 6G "human-machine-thing-genie."

Ubiquitous-X is a fundamental architecture of the 6G network and a specific carrying form of "human-machine-thing-genie". Genie can reside on any communication and computing node or the virtual world formed around the user to enable the information exchange of all communication objects. It will definitely reshape the 6G network and solve the limitations of 5G networks, especially regarding endogenous intelligence, which is to achieve the ubiquity of communications, computing, control, and consciousness (UC4)[5]. Some key enabling techniques and challenges for Ubiquitous-X can be summarized as follows.

2.1.1 Multi-dimensional bearing resourcesUbiquitous-X coordinates multi-dimensional resources, namely communication (i.e., frequency, time, code, and space), computing, and caching resources. The incorporation of computing and caching functionality into communication systems enable information processing, distribution, and high-speed retrieval within the network. By utilizing multi-dimensional resources, it is possible for users to access services and information through any platform, any device, and any network. With the emerging of applications with rate requirement in the order of Tbps, such as holographic type communications (HTC) or brain-computer interactions (BCI), the frequency band should be extended from mmWave to terahertz (THz). The transmission characteristics of THz wave are quite different from the lower frequency bands. The THz signal will suffer from severe path loss and weak diffraction ability, as well as severe molecular absorption loss. Fortunately, due to the short wavelength, the application of ultra-large-scale multiple-input-multiple-output (MIMO) becomes possible, which can make full use of space resources and perform beamforming in multiple directions, thereby further improving the user's Signal to Interference and Noise Ratio (SINR). On the flip side, it will aggravate the non-stationary characteristics of the channel. The measurement and modelling of the propagation characteristics of THz channels still need further exploration[13]. To cope with massive URLLC scenarios which merge 5G URLLC with legacy mMTC, such as the Industrial IoT scenario, the Ubiquitous-X platform should be able to support smaller scheduling time unit, non-orthogonal multiple access (NOMA) technology, and AI-based scheduling algorithms.

2.1.2 End-to-end UC4 aggregationIntelligence will become an inherent feature of Ubiquitous-X networks, and be deployed across the core network to edge computing nodes. With the increasing demand for truly immersive user experience, the requirement of higher-dimensional perception, representation, transmission, and decision making emerges. Ubiquitous-X will orchestrate communication, computing, control, and consciousness to represent the traditional five senses, namely sight, hearing, taste, smell, and touch. It will also support the representation of human emotions, especially through the Wireless BCI to provide a fully immersive experience. The relevant factors affecting immersive services, such as brain cognition, body physiology, and gestures, as well as the new performance indicators like Quality-of-Physical-Experience (QoPE)[14] will also be considered. Meanwhile, thanks to the intelligent features of connected IoT devices, it is possible to support real-time control of physical entities to realize tactile Internet.

2.1.3 Expansion of the communication dimensionUnmanned aerial vehicles (UAVs) are widely discussed as a supplement or extension to 5G coverage in academia and industry[15]. However, due to the limitations of battery capacity and complicated interference among transmissions, the vision of ubiquitous connection cannot be fully achieved. The 6G Space-Air-Ground-Sea Integrated Communication Networks (SAGSIN) consisting of satellite systems, aerial networks, terrestrial communications, and undersea wireless communications can support truly seamless full coverage communication by dynamically and adaptively converging various types of networks enabled by all kinds of communication technologies to meet the service requirements of complex and diverse application scenarios. The geographical communication dimension of intelligent IoT devices will be greatly expanded in the 6G era.

Different from geosynchronous satellites, Low Earth Orbit (LEO) satellites usually work 500-2 000 kilometres from above the earth. Therefore, the transmission delay is greatly reduced, which can provide a better user experience. By using Ka or Ku frequency bands, the transmission rate of a single LEO beam can reach the order of Gbps. With technological improvement, the manufacturing, launching, and maintenance costs of LEO are becoming affordable. Meanwhile, with the rapid advancement of deep-sea manned and unmanned detector, undersea wireless communication-related technologies will also be included as part of Ubiquitous-X networks[16].

2.1.4 Endogenous securitySecurity and privacy should be the key features of 6G networks. The endogenous security aims to improve the reliability of the networks. Ubiquitous-X's endogenous security can be achieved both in the network layer and the physical layer. Blockchain technologies are exploited to support distributed security service, and it will be further elaborated in Section 4.2. To realize physical layer security, technologies including channel, code, power, and signal design[17] along with flexible key management mechanisms should be adopted. Note that polar code is considered as a promising channel coding method for control channel in 5G networks due to its low coding and decoding complexity. Since polar code can achieve the secrecy capacity of the wiretap channels[18], it has a good prospect in data channel coding and physical layer security in 6G.

2.2 AIoT ArchitectureWith the rapid evolution of AI and wireless infrastructure, their merging will realize the endogenous intelligence of 6G networks. Based on IoT which provides ubiquitous connectivity to various end devices, AIoT takes a step further to integrate AI with IoT, aiming at providing intelligent connectivity, efficient computing, security, and high scalability for terminal devices. AIoT will construct an intelligent ecosystem, where the massive amount of data will be sensed by end devices, stored and analysed using ubiquitous caching and computing resources at network edge via big data analytics technique to realize real-time cognition and reasoning. As the inevitable trend of the development of IoT, AIoT will become the main driver for intelligent upgrading of traditional industries.

The development of AIoT can be envisioned as three stages: single machine intelligence, interconnection intelligence, and active intelligence. As for the stage of single machine intelligence, AIoT devices need to wait for interaction requirements initiated by users. Therefore, AIoT devices need to accurately perceive, recognize, and understand various instructions of users, such as voice and gesture and make correct decisions. Then at the stage of interconnection intelligence, AIoT devices are interconnected, adopting the mode of "one brain (cloud or central control), multiple terminals (perceptron)." At the stage of active intelligence, the AIoT system has the ability of self-learning, self-adaptability, and self-improvement. According to user's behavior preference and surrounding environment, it works like a private secretary and actively provides suitable services without waiting for instructions. Compared with the stage of interconnection intelligence, active intelligence achieves real intelligence and automation of AIoT.

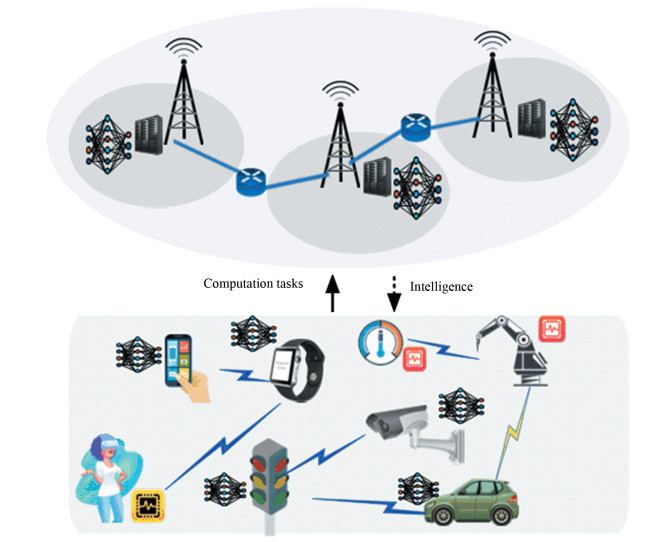

As shown in Fig. 1, the architecture of AIoT under 6G Ubiquitous-X includes four layers, namely perception layer, network layer, processing layer, and application layer.6G Ubiquitous-X empowers AIoT devices with UC4. These AIoT devices work as the traditional five senses in the digital society and exchange feelings, intuitions, emotions, and ideas via exploration, learning, and cooperation. In this way, AIoT under 6G Ubiquitous-X supports the integration of human-machine-thing-genie communication with the aim of realizing ubiquitous AI services from the core network to AIoT devices. While AIoT could be the intelligent digital nervous system, genie acts like the brain of the overall system to give each unit entity in the four layers intelligent "consciousness".

|

Fig.1 Four-layer architecture for AIoT under 6G Ubiquitous-X |

The perception layer is the basement of other upper layers, where sensing-type, communication-type, processing-type, and control-type IoT devices are widely deployed. These IoT devices function like eyes, noses, ears, and skins of human to obtain information via identification, positioning, tracking, and monitoring. The network layer is the most import infrastructures of AIoT, which plays the role of a link between perception layer and processing layer. It works like human nerves and transmits perception information and signaling information over the unlicensed networks (WiFi/LoRa/Zigbee/Bluetooth) and licensed networks (2G/3G/4G/5G/6G/…/XG). Exploiting AI, big data analytics, cloud computing, multi-access edge computing (MEC), blockchain, and trusted computing, the processing layer will process the massive data generated from perception layer. It works like human brain and performs data storage, classification, security protection, and results acquisition. Other than the development of innovative supporting technologies for AIoT, the implementation of AIoT functionalities to support real-world applications ranging from smart city to smart factory is a key challenge. The implementation of AIoT can be considered as human body which carries out decisions made by human brain (the processing layer of AIoT).

3 Challenges Moving Toward AIoTThe exponential growth of smart IoT devices and the fast expansion of applications pose significant challenges for the evolution of AIoT, including massive and intelligent connectivity, efficient computing, security, privacy, authentication, and high scalability and efficiency. In this section, these challenges in advancing toward AIoT will be discussed in detail.

3.1 Massive and Intelligent ConnectivityWith the development of information and communication technologies, the network scale is being immensely extended and the number of smart IoT devices keeps increasing dramatically. Massive and intelligent connectivity is the combination of mobile networks, AI, and the linking of billions of devices through the IoT. To support flexible and reliable communication, the connectivity technologies among a variety of devices have been extensively investigated for many years, including cellular technology (2G/3G/4G), bluetooth, Low Power Wide Area (LPWA), Wi-Fi, WiMAX, and Narrowband IoT (NB-IoT)[19-23]. Besides, to support the large-scale number of intelligent IoT devices on the limited bandwidth and maximize the spectrum efficiency, some promising radio access techniques, such as massive MIMO, cognitive radio (CR), beamforming, and NOMA, are widely researched in IoT[24-27]. While massive connectivity and intelligent techniques are already being exploited in 5G networks, the AIoT deployments will be much denser, more heterogeneous, and with more diverse service requirements. Taking the massive and intelligent connectivity of AIoT into consideration, there are still many challenges which are as follows.

3.1.1 Multi-radio access challengesIn dynamic network environments, the rapid and precise channel division and multiple-radio access are essential for the massive IoT connections. For example, time division duplex (TDD), orthogonal frequency-division multiplexing (OFDM), NOMA, and MIMO, which utilize the time/frequency/power/code/space domain to support multiple access to massive IoT devices, have been extensively investigated in a variety of IoT scenarios supported by WiFi, WiMAX, NB-IoT, etc. The core foundation of these multi-radio access challenges is accurate and real-time channel state information (CSI). However, in IoT, the network traffic and topology change frequently, i.e., out of all devices, only a few are active at any given moment. It is critical to explore the sparsity in the IoT device activity pattern to deal with the high-dimensional channel estimation problem. Moreover, considering the huge computational and communication overhead, it is still challenging to embrace intelligent learning algorithms in IoT connected by massive diverse devices. In summary, conventional multi-radio access methods cannot satisfy the massive and intelligent connectivity. How to intelligently manage the multi-radio access for massive connectivity is a significant challenge.

3.1.2 Radio and network configuration challengeThe radio configuration usually specifies the amount of wireless resources allocated to each group of AIoT devices for accessing to network and transmitting data. Considering the connected billions of constrained AIoT devices, light-weight and automated radio configuration on distinct communication functions as well as network configuration on all layers of the network stack become urgent research areas. While the radio and network configuration are time-consuming and infeasible for the highly dynamic large-scale AIoT. Soft-defined network (SDN) and network functions virtualization (NFV) are promoted to facilitate much more flexible configuration and handle the evolution of IoT services[28-29]. However, in SDN-enabled IoT, the flow handling may bring additional network overhead and the configuration supported by the controller, which may take additional time and aggravate extra latency in end-to-end flow transactions. Then, how to make radio and network configuration more feasible and intelligent for supporting massive and intelligent connectivity is an emerging topic in the future AIoT.

3.1.3 Beamforming challengeTo support massive connectivity, beamforming combined with mmWave and massive MIMO technology becomes a promising solution, which can effectively improve energy efficiency. However, the traditional beamforming methods may bring high overload of mmWave in dynamic and complicated IoT communication. In order to make the mmWave and the corresponding beamforming strategy intelligent, machine learning (ML) based beamforming optimization might be a potential solution.

In summary, 6G facilitates the deployment of massive IoT in ultra-wide areas with ultra-high density. By combining the cutting-edge AI and the linking of billions of devices of IoT, massive and intelligent connectivity are enabling transformational new capabilities in transport, entertainment, industry, public services, and so on. Therefore, to support a huge volume of low-cost, low-energy consumption devices on a network with excellent coverage, AIoT should support a huge number of connections and bring more intelligent interconnection of physical things on a massive scale.

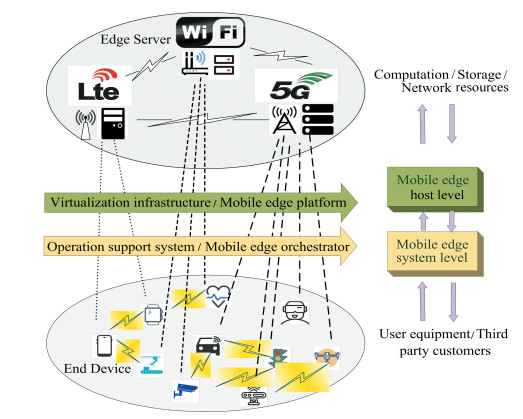

3.2 Efficient ComputingWith the deep integration of AI and IoT, our time has witnessed a paradigm shift from using dedicated sensors to collect data for offline processing to collective intelligence, where end devices are no longer just data collectors but also intelligent agents. However, due to the limited battery capacity and computing capability at end devices, it is hard to meet the stringent requirements of intelligence acquisition. Therefore, it is of critical importance that the ubiquitous computing resources can be fully exploited to realize the evolution from connected things to connected intelligence. As illustrated in Fig. 2, end devices could offload their computing-intensive tasks to edge server or central cloud server for fast processing.

|

Fig.2 Mobile computing enabled AIoT |

3.2.1 Processing of computation intensive tasks

Mobile cloud computing (MCC) provides powerful computing capabilities at the cloud server to tackle the limited capabilities of end devices by offloading computation tasks to the cloud server[30]. By relieving the burden of computation at end devices, MCC decreases the manufacturing cost of end devices and extends the lifetime of end devices[31]. However, since the cloud server is located in the core network, the transmission and result feedback delay of cloud computing is in the order of tens to hundreds of milliseconds, which cannot meet the requirement of ultra-reliable and low latency communication in future 6G[32]. As predicted by Cisco[33], mobile data traffic will grow dramatically and nearly 850 ZB per year will be generated by people and machines by 2021. Therefore, the transmission and processing delay caused by network congestion at core network is a major drawback of MCC and calls for improved architecture design.

3.2.2 Highly responsive computing serviceWith the advancement in computing and storage technology, computing power at network edge (e.g., base stations, access points (APs), and end devices) has multi-fold increase during the last decade. MEC is regarded as a promising technology to cope with the drawback of long delay in cloud computing by pushing the computing service to the close proximity of end devices[34-35]. This emerging technology leverages the computation resources at massive edge devices and edge servers for task processing, thus the overhead of uploading computation tasks to the core network in MCC is avoided[36-37]. The highly responsive service provided by MEC have attracted widespread attention from both the academia and industry. It has been applied to innovative applications such as IoT, autonomous driving, and real-time big data analytics[38-39]. Particularly, in AIoT, massive devices will generate a large amount of data which calls for real-time processing to enable novel applications such as smart cities and industrial automation. With the assistance of MEC, the collected data at end devices can be offloaded to nearby edge servers or other devices with idle computing resources for fast processing.

3.2.3 Joint multi-dimensional resource allocationTo realize efficient computing service, task offloading strategy design and resource management scheme design are the key issues that need to be addressed in MEC[40]. Task offloading strategy investigates the problems of when and where to process the computation tasks, including whether or not the task should be offloaded to edge server or a nearby device for processing, and the time and order of task offloading. Considering the limited computational and communication resources, resource management schemes must be carefully designed to coordinate the task offloading among multiple devices and edge servers to achieve efficient computing. Due to the highly dynamic environment of MEC system, such as the random arrival of tasks, the time-varying wireless channels, and the stochasticity of computational resources at edge servers, the task offloading and resource management scheme design is not trivial.

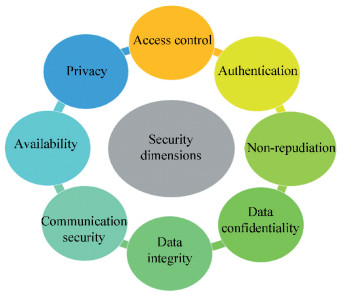

3.3 Security, Privacy, and AuthenticationSecurity, privacy, and authentication are critical tasks for the standardization of any mobile communications system. ITU-T security recommendations explore a set of security dimensions to provide protection against all major security threats[41], as shown in Fig. 3. The eight security dimensions cover user information, network, and applications. Their relationships with the AIoT are introduced as follows.

|

Fig.3 Security dimensions defined by ITU-T |

·Access control is the basic and important strategy for AIoT security. It guarantees that only authorized AIoT devices can use network resources and prevents unauthorized devices from accessing the network elements, services, stored information, and information flows.

·Authentication confirms identities of AIoT devices, ensures validity of their claimed identities, and provides assurance against masquerade or replay attacks.

·Non-repudiation requires that neither the transmitter nor the receiver can deny the transmitted information.

·Data confidentiality protects data from unauthorized AIoT devices disclosure and ensures that the data content cannot be understood by unauthorized AIoT devices.

·Data integrity guarantees the correctness or accuracy of data, and its protection from unauthorized AIoT devices creation, modification, deletion, and replication. It also provides indications of unauthorized AIoT devices activities related data.

·Communication security ensures that information flows only between the authorized AIoT devices and is not diverted or intercepted while in transit.

·Availability makes sure that there is no denial of authorized AIoT devices access to network resources, stored information or its flow, services, and applications.

·Privacy protects the information of AIoT devices that might be derived from the observation of their activities.

Even though the computing power, energy capacity, and storage capabilities of AIoT have been significantly improved, the negative impact of potential possible attacks on AIoT is drastically increasing due to the insufficient enforcement of security requirements as shown in Fig. 3. For example, different from wired communication, wireless networks are open to outside intrusions and do not need physical connections. It makes the wireless-enabled AIoT devices more vulnerable to potential attacks due to the broadcast natures of wireless transmissions. In a smart factory, if confidentiality is not ensured, an adversary could discover the manufacturing process. If data are not integrity protected, an adversary could inject false data in the system, thus modifying the manufacturing process with a potential impact on safety. If availability is not guaranteed, the factory could stop all its machines in order to maintain a safe state. Moreover, due to the innate heterogeneity of AIoT system, the conventional trust and authentication mechanisms are inapplicable. The non-negligible threats to AIoT devices make security, privacy, and authentication still the major challenges for AIoT in XG. Therefore, to simplify the development of secure AIoT, it is important to comprehend the potential solutions that can provide a secure, privacy-compliant, and trustworthy-authentication mechanism for AIoT.

3.4 High Scalability and EfficiencyThe unbounded increase of heterogeneous AIoT devices and the huge data they generate demand scalable and energy-efficient infrastructure for uninterrupted communication. In AIoT, ML coupled with AI can utilize these generated data to predict the network conditions and user behaviors to offer insight into which processes are redundant and time-consuming, and which tasks can be fine-tuned to enhance scalability and efficiency. The scalability of the AIoT refers to the capacity of a network or its potential to support increasing reach and number of users. It also requires the network to be able to add any device, service or function without negatively affecting the quality of existing services. Researchers have extensively investigated AI-based solutions for optimizing IoT in the context of lower communication overhead and higher energy and computational efficiency these years[42-46]. Leveraging the huge volume of data in various IoT applications, AI-based solutions could analyze and learn from the complicated network environments and then optimize the network and resource maintenance. Furthermore, using some online learning mechanisms, the learn-and-adapt network management schemes can greatly enhance AIoT user experience in terms of low service delay, high system resilience, and adaptivity[47]. However, AI-based applications are usually computationally intensive and may be unable to enhance themselves continuously and scalably from a flowing stream of massive AIoT data. Since the AIoT applications are more bandwidth-hungry, computation- intensive, and delay-intolerant, the scalability and efficiency issues are critical parts of the AIoT network optimization as well as user's experience. The challenges in terms of high scalability and efficiency are illustrated in the following sections.

3.4.1 Routing optimization challengeIn AIoT, massive devices may collect, process, and store data and information through the network with the single or multiple hops relay. The routing mechanism is one of the key components and has significant impact on the scalability and efficiency of future AIoT for enabling effective link and communication among different devices. Generally, different AIoT services usually have distinct requirements of network resources and quality of service, and different routing solutions should be designed to handle different AIoT traffic effectively and support these varieties of AIoT applications. Adaptive routing and shortest path routing algorithms are two types of most popular routing optimization approaches. However, the high computational complexity of the adaptive routing algorithm limits its performance in current AIoT. Meanwhile, shortest path routing is a best-effort routing protocol and may result in low resource utilization. Moreover, in the ultra-dense heterogeneous AIoT, most devices are very close and multiple networks are mostly overlapping. The interference issues among these AIoT devices cannot be ignored. Thus, how to optimize routing protocols in future AIoT to enhance system performance in terms of scalability, reliability, and efficiency is still a challenge to be tackled.

3.4.2 Network optimization challengeIt is critical to optimize AIoT to adaptively support new operations and services without negatively affecting the quality of existing services due to the diverse networking and communications protocols. Specifically, AIoT nodes and servers, which adopt heterogeneous networking technologies, convey a multitude of data types that correspond to different applications, and bring forward stringent need for flexible network architecture and intelligent network optimization solutions. This frequent data cross border may increase the difficulty of network management. Moreover, optimizing networks for the provision of AIoT services is not similar to the management of traditional cellular networks due to the different profile of AIoT traffic. As discussed in Section 3.1, AIoT data traffic is typically sporadic, so network optimization should not only handle the average throughput but also support the peaks. Then, to promote intelligent network management, it is necessary to introduce some autonomous mechanisms into the network, including planning, configuration, management, optimization, and self-repair. However, due to the limited computing and communication resources, it is still critical to predict the AIoT traffic accurately by learning the huge volume of data. Therefore, how to design new network architecture and optimize network management to support scalable and efficient AIoT are still testing.

3.4.3 Resource optimization challengeThe huge number of AIoT devices and a variety of AIoT applications will lead to increasing demands for storage, computing, and communication resources to handle data processing. Due to the heterogeneous and resource-limited AIoT devices, resource optimization for handling different types of devices become much more demanding. Considering the stochastic and time/space-varying features of AIoT environment, real-time and accurate CSI plays a very important role in resource optimization. However, because of limited signaling resources, it may not be practical for the AIoT devices to acquire real-time CSI from a wide range of heterogeneous AIoT devices. Thus, how to learn from the heterogeneous AIoT environment and schedule the multiple types of resources adaptively and intelligently to maximize resource efficiency and increase scalability of AIoT systems are still challenges.

To achieve high scalability and efficiency in AIoT with large-scale devices and the huge volume of data, it is imperative to develop new architecture and solution by introducing some enabling technologies, including big data analytics, MEC, ML, blockchain, etc. The future AIoT with highly sophisticated data computing and analytics capabilities can support autonomous network operation and maintenance, but there are still many challenges in those mentioned techniques to satisfy the high scalability and efficiency of future AIoT.

4 AIoT under 6G Ubiquitous-X: Potential Enabling TechnologiesSince AIoT combining AI and IoT is currently emerging, its development course can be influenced by a lot of other promising technologies, such as MEC, blockchain, AI, and physical layer security, while AIoT may impact them as well. This section will exploit the potential benefits and interactions when these technologies are applied into AIoT.

4.1 Multi-Access Edge ComputingWith the fast merging of AI and IoT, massive amounts of various end devices with computing capabilities will be connected to support delay-sensitive and computation-intensive applications. Mobile edge computing is envisioned as a promising technique to support these innovative applications at resource-limited end devices by providing cloud-computing capabilities at the edge of the network[48]. As networks are shifting toward heterogeneous architecture, to address the multi-access characteristics, the MEC Industry Specification Group of European Telecommunication Standard Institute (ETSI) extended the concept of mobile edge computing to multi-access edge computing.

The MEC framework proposed by ETSI describes a hierarchical structure, which includes three levels from the bottom to the top, namely network level, mobile edge host level, and mobile edge system level[49]. In the networks level, various networks including 3GPP mobile networks, local access networks, and other external networks, are interconnected. As illustrated in Fig. 4, heterogeneous networks include LTE, WiFi, and 5G. As for mobile edge host level, by leveraging the vitalization technologies including virtual machines (VM), SDN, and NFV, the mobile edge host offers virtualization infrastructure that provides computation and storage resources. In the mobile edge system level, user equipment (UE) application can directly exploit MEC via customer facing service (CFS) portal.

|

Fig.4 Illustration of multi-access edge computing scheme |

To support AIoT, innovative technology companies have released new hardware to support the realization of AI at network edge. In 2017, Microsoft proposed Azure IoT Edge, which can support certain workloads such as AI and big data analytics running on IoT edge devices via standard containers. In 2018, Google declared two products, namely Edge TPU and Cloud IoT Edge. Edge TPU is a hardware chip designed for ML model, and Cloud IoT Edge is a software stack that extends AI capability to gateways and connected devices. Nvidia developed Jestson TX2 as a powerful embedded AI computing device, which can be deployed at the edge of the network to enable edge AI. There has been extensive research on the system design and resource optimization of MEC, and the following sections will give a concise overview.

4.1.1 Computation offloading designDue to the limited power and computation capability at end device, it is hard to meet the service requirement of computation intensive tasks using local computing resources only. Therefore, the end devices could leverage the communication resources and the computing resources at network edge to process the tasks. The convergence of computing and communication could enhance the system capability to support large-scale end devices[50-51]. Considering the application preference, the remaining power at the end device, and the availability of communication resources and computing resources at network edge, computation offloading scheme mainly solves the problem of when and where to offload the computation tasks[52]. In single-user MEC systems, the authors in Ref. [53] investigated computation offloading schemes by exploiting the time-varying channel and helper-CPU conditions to minimize the energy consumption of devices. The authors in Ref. [54] proposed a Lyapunov optimization-based dynamic computation offloading algorithm to minimize the cost of executing tasks consisting of the execution delay and task failure. In Ref. [55], the authors jointly optimize the computational speed of device, transmission power of device, and offloading ratio to minimize the energy consumption of device and the latency of application execution. In multi-user MEC systems, the computation offloading design is more complicated due to the resource competition among multiple users. In Ref. [56], the authors designed a decentralized tit-for-tat mechanism to incentivize selfish devices to participate in fog computing in distributed wireless networks. The authors in Ref. [57] proposed an alternating direction method of multipliers (ADMM) based task offloading algorithm to minimize the total system cost of energy and the delay of task execution in fog and cloud networks with NOMA. In Ref. [58], according to the multi-armed bandit theory, the authors proposed an adaptive learning-based task offloading algorithm to minimize the average offloading delay in a distributed manner without requiring frequent state exchange. Moreover, MEC has been employed to timely reveal the status information embedded in sampled data. The authors in Ref. [59] employed a new performance metric based on Age of Information to jointly optimized task generation, computation offloading, and resource allocation to maintain the freshness of status updates obtained by executing computation tasks in MEC-enabled status update systems.

4.1.2 MEC with heterogeneous computation resourceWith the proliferation of new applications, the processing requirements exceed the capability of central processing unit (CPU). Graphics processing unit (GPU) has evolved to accelerate the processing of computation tasks due to its highly parallel computing capability[60]. Computing architectures are becoming hybrid systems with GPUs working in tandem with CPUs. By leveraging heterogeneous computation resource, end device can offload computation tasks to the best suited processor to achieve better performances. Therefore, how to jointly design computation task partitioning scheme and resource allocation strategy in MEC system under heterogeneous computation resource will be an attractive research direction.

4.1.3 MEC with heterogeneous caching and communication resourcesIncorporating computing and caching functionality into the communication systems can support highly scalable and efficient content retrieval. Meanwhile, the duplicate content transmissions within the systems can be significantly reduced[61]. The authors in Ref. [62] studied economically optimal mobile station (MS) association to trade off the cache-hit ratio and the ratio of MSs with satisfied quality of service (QoS) in cache-enabled heterogeneous cloud radio access networks. To address the complex and dynamic control issues, the authors in Ref. [63] proposed a federated deep reinforcement learning-based cooperative edge caching (FADE) framework for IoT with mobile edge caching. They modeled the content replacement problem as a Markov decision process (MDP) and proposed a federated learning framework based on double deep Q-network (DDQN) to reduce the performance loss, average delay, and offloading backhaul traffic. The authors in Ref. [64] studied the computation offloading method with cached data and proposed a novel cache-aware computation offloading strategy for edge-cloud computing in IoT. They formalized the cache-aware computation offloading location problem to minimize the equivalent weighted response time of all jobs with computing power and cache capacity constraints. The authors in Ref. [65] proposed a software-defined information centric-Internet of Things (IC-IoT) architecture to bring caching and computing capabilities to the IoT network. Based on the proposed IC-IoT architecture, they designed a joint resource scheduling scheme to uniformly manage the computing and caching resources. The authors in Ref. [66] proposed an AI-enabled smart edge with heterogeneous IoT architecture that combines edge computing, caching, and communication to minimize total delay and confirm the computation offloading decision.

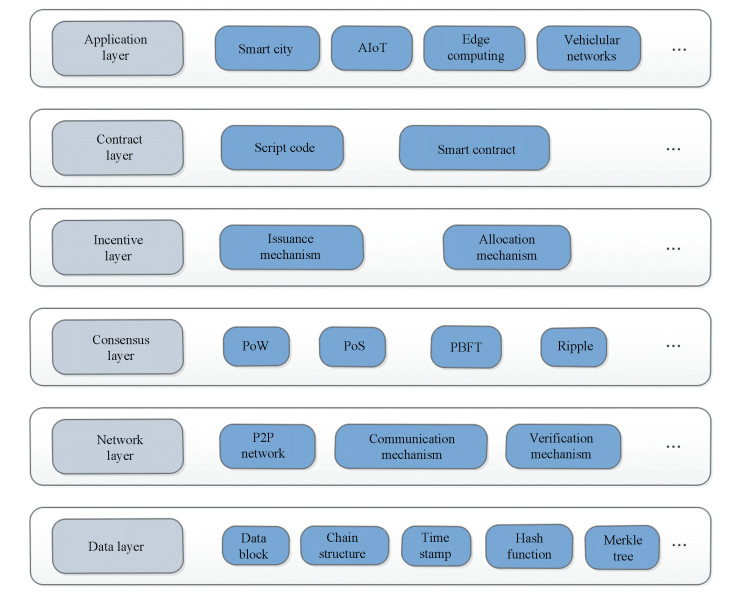

4.2 BlockchainBlockchain, which was proposed as the underlying technology of the digital cryptocurrency, has drawn widespread attention from both academia and industry. It is essentially a distributed and practically immutable ledger of transactions. Each block in a blockchain contains one or more transactions and points to the prior one[67]. As the primary purpose of a blockchain, transaction records can be securely saved and validated in an untrustworthy peer-to-peer system by applying a consensus mechanism in a decentralized manner. In addition, transactions can implement and execute the operational code saved in blockchain, enabling software services between untrustworthy devices. Since first used in cryptocurrency[68], blockchain has raised increasing interests with lots of use cases and applications. Particularly, blockchain has been envisioned as a promising solution to many problems when new technologies are integrated with AIoT.

The general hierarchical technical structure of the blockchain is shown in Fig. 5[69]. From the bottom to the top, there are six layers, including the data layer, network layer, consensus layer, incentive layer, contract layer, and application layer.

|

Fig.5 Six-layer architecture in blockchain protocol stack |

The data layer includes the basic data structure of the blockchain. The structures of distributed ledgers are not only a chain of blocks but also have other structures like directed acyclic graph (DAG). Considering a chain of blocks, each block includes a number of transactions and is linked to the previous block, forming an ordered list of blocks. The block consists of the block header and main data. The block header specifies the metadata, including block version, the hash of previous and current blocks, timestamp, Merkle root, and other information. Explicitly, block version stores the relevant version of blockchain system and protocol. Using Hash pointer, all blocks can be linked together to form a chain based on the hash of the previous block. Merkle tree is defined as a binary search tree with its tree nodes linked to one another using hash pointers. Utilizing the hash value of the Merkle tree root in blockchain, all the recorded transactions on the current block could be checked easily and quickly. The block generation time is recorded in the timestamp. The main data of the block stores all transactions ever executed. The data type depends on the service on blockchain.

The network layer, which is the basis of blockchain information interaction, is responsible for the consensus process and data transmission among the nodes, mainly including the P2P network and its security mechanism built on the basic network.

The consensus layer guarantees the consistency of node data, encapsulates various consensus algorithms and the reward and punishment mechanism that drives the node's consensus behavior. There are a lot of consensus protocols being applied in blockchain systems, which could be roughly divided into consensus protocols with proof of concept (e.g., Proof of Work (PoW) and Proof of Stake (PoS)) and Byzantine fault-tolerant replication protocols (e.g., Practical Byzantine Fault Tolerance (PBFT) and Ripple). According to the different types of blockchains, consensus protocols are selected differently.

The incentive layer introduces economic incentive to make the nodes contribute their efforts to verify data in blockchain systems. It plays an important role in maintaining the decentralized blockchain system without any centralized authority.

The contract layer enables programmability in blockchain systems. Various script codes, smart contracts, and other programmable codes can be utilized to enable more complex programmable transactions.

The application layer includes relevant application scenarios and practice cases of the blockchain. These applications may revolutionize these fields and provide efficient, secure, and decentralized management and optimization.

By leveraging blockchain, AIoT could be considerably improved from many perspectives. It could solve the single point of failure problem of the AIoT center management and control architecture, overcome the security and privacy challenges of ubiquitous sensing device data, provide solutions for data sharing and access control, and offer incentive valuable data and resources sharing, which has constructed a new type of Internet value ecology. The benefits of blockchain on AIoT are explored in the following sections.

4.2.1 Security and privacyExisting works have discussed how to apply blockchain to improve security and privacy in the IoT from different points of view[70-73]. First, blockchain nodes are decentralized and supportive to network robustness. Even if some nodes in the network are compromised by various attacks, other nodes can work normally and the data will not be lost. This feature of blockchain increases the overall network robustness compared with existing centralized and distributed data systems. Second, the transparency of blockchain can be utilized to make the data flow in the network completely open to users. This makes user's personal data usage traceable and they are informed how their data is used. Third, the immutability of data in the blockchain will increase the reliability of activities in the network and enhance the mutual trust between users and service providers. Finally, the pseudonym of blockchain helps users in network keep their real-world identity hidden for privacy protection.

4.2.2 Data and model sharingSharing data and information in a safe and effective manner is still challenging for many applications in AIoT. Blockchain can protect network data from tampering, and effectively preserve the privacy for network users. Moreover, blockchain is more transparent and secure in data sharing. By better protecting the privacy for users' data and the security of users' application, blockchain-empowered AIoT can provide better personalized services with more add-on values.

4.2.3 Credibility and malicious operation tracingThe application of blockchain can protect privacy and increase the credibility of network at the same time. When a node disseminates malicious messages, the public availability of blockchain means that the malicious node in the network can be traced back by using the transaction records saved in the blockchain.

4.2.4 Enhancement of decentralized solutionsBlockchain is actually a form of distributed book-keeping technology. Each node of the system runs compatible consensus mechanism or protocol and can access the transaction node that participates in the network and sends information. These nodes can interact with the adjacent nodes while cannot change any records practically. The essence of the decentralized ledger is to protect it from network attacks, which is quite helpful for storing data in a safe and decentralized way for AIoT.

4.3 AI Technologies: Big Data Analytics and Machine LearningWith the explosive growth of IoT applications, there are various requirements in different vertical domains include, i.e., health, transportation, smart home, smart city, agriculture, education, etc. AIoT is evolving toward highly complex systems driven by large volume of data. Recently, the rapid development and widespread application of big data analytics and ML have brought promising opportunities to reshape the network maintenance and architectures, especially with the assistance of analysis capabilities driven by the knowledge and statistical patterns hidden in massive data[74].

4.3.1 Big data analyticsBig data is one of the most important features for the future networks, where skyrocketing IoT traffic brings new requirements for the data communication, and more importantly, huge data generated by networks themselves imply a great deal of useful information to improve the network management, resource allocation, security control, etc. Considering the huge amount and various characteristics of network data, big data analytics could perform comprehensive data fusing and analyzing over the collected information, correlate various influencing factors and network status, and find out the causality and logistics behind them with the help of ever-increasing computing capacity. Big data analytics, which processes large volumes of disparate and complex data comprehensively, is widely used to improve the performance of IoT applications and enhance IoT functions in various aspects, such as intelligent healthcare systems[75], smart home[76], smart city[77], intelligent transportation systems[78], etc.

4.3.2 Machine learningMachine learning (ML), which possesses powerful data processing capabilities and provides feasible solutions to a variety of problems, are widely applied in IoT scenarios[79-81]. Current IoT typically involves a large number of network elements and IoT devices. They may generate huge volume of data that can be used to analyze, learn, and train ML models to optimize the system performance. Moreover, multi-layer and multi-vendor IoT applications become highly complicated and need to be operated by more efficient solutions. Compared with conventional techniques, ML-based techniques, which train models and learn from large data sets, can substantially diminish human intervention and reduce the operation cost for handling large-scale complex IoT systems[82]. Generally, the ML techniques can be classified into four different areas, i.e., supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning (RL). Supervised learning algorithm uses labeled training dataset to construct the model while unsupervised learning uses the training dataset without labels. RL introduces reward and punishment methods to perform training models. Leveraging ML techniques, AIoT applications can support predictive analysis to ensure the requirements for resources to be accurately met and to improve the efficiency of network operation. It can also optimize network control and operation compared with other methods, especially when the network behavior is complex and involves massive parameters. Furthermore, the application of the ML in AIoT can detect malicious behavior on the networks by deploying the trained models and algorithms.

In summary, future AIoT with highly sophisticated data computing and analysis capabilities can support autonomous network operation and maintenance. With the help of virtualization and ever-increasing computing capacity, network elements become more intelligent and quickly react to the network environment changes. Data from all network ingredients at various levels and different ramifications could be stored and analyzed to produce an optimized solution, which can cut down the operating expense (OPEX) and capital expenditure (CAPEX). More intelligent self-maintaining scripts and algorithms would be invented for resource allocation and network operation to free engineers from cumbersome manual processes. Then, the AIoT can update the network parameters autonomously during network operation. With the advent of big data analytics and ML technologies, massive intelligent IoT devices will generate huge and various data and then get much smarter and more intelligent to learn, train, and perform decisions in a better manner.

4.4 Physical Layer SecurityAs a promising complement to high-layer encryption techniques, physical layer security has received much attention in wireless communications. Without relying on the upper layer encryptions and cryptographic approaches, physical layer security has its unique built-in security mechanism based on the characteristics of wireless channel and provides a feasible idea for the realization of "one-time pad". With the new radio technologies development in 5G, such as massive MIMO[83], mmWave[84] and NOMA[85-86], the channel spatial resolution will be greatly improved and the built-in security elements become more abundant and achievable. It significantly enhances the physical layer security technology with wireless endogenous security attributes. The basic idea of physical layer security is utilizing channel coding and signal processing techniques to transmit secret message between the source and the destination while guaranteeing confidentiality against the eavesdroppers. Based on whether there is key generation, the physical layer security is divided into two folds: information theoretic security and physical layer encryption, both of which can be effective in resolving the boundary, efficiency, and link reliability issues.

4.4.1 Information theoretic securityInformation-theoretic security, which is associated with key-less-based physical layer security techniques, was initiated by Shannon[87] in 1949 and further strengthened by Wyner[88] in theoretical basis using information-theoretic approach in 1975. In 1978, it was extended to Gaussian channels[89] and broadcast channels[90]. Information-theoretic security exploits the differences under channel conditions and in interference environments to boost the received signal at intended receiver while degrading the received signal of unauthorized users. Early works in field of information-theoretic security were mostly measured by secrecy capacity, which is defined as the maximum achievable rate between the main channel and eavesdropper's channel, and non-zero secrecy capacity can be achieved if the eavesdropper's channel is a degraded version of the main channel. The metrics for information-theoretic security were later extended to secrecy outage probability, secrecy throughput, fractional-equivocation-based metrics, BER-based and PER based metrics[91]. However, due to the characteristics of time-varying random fading in wireless channels, the secrecy capacity will suffer from a significant loss especially when the main channel is in a deep fade. Hence, combined with new radio technology in 5G and 6G, artificial-noise-aided security technique, and security-oriented beamforming techniques[92] will further enhance the security performance.

4.4.2 Channel-based secret key generationChannel-based secret key generation is also known as physical layer encryption, in which wireless devices exploits reciprocity and randomness of wireless fading channels to generate a shared secret key between source and destination. Combined with LDPC[93-94] and polar coding[95-96], the generated secret key is manipulated at the upper layer (i.e., bit level) or lower physical layer (i.e., symbol level) to protect the entire physical layer packet. It is lightweight without depending on additional complexity and just relies on the physical characteristics of the wireless fading channels. The passive attacker located more than half a wavelength away from the legitimate users will suffer independent fading and is unable to infer the generated secret key. A typical channel-based secret key generation scheme includes channel probing, randomness extraction, quantization, reconciliation, and privacy amplification, and is often measured by the key generation rate, the key entropy, and the key disagreement probability[91]. However, they are often limited by the unique characteristics of wireless channel, such as the channel coherence time and the channel quality. Furthermore, it is hard to measure the main channel and eavesdropper's channel in AIoT era because the number of channel training is limited and high-rate feedback channel is forbidden to avoid signaling overhead. Therefore, it is full of challenges in designing an efficient and secure-proven key generation scheme in practice.

5 ConclusionsThe current 5G networks mainly solves the problem of information interaction and connectivity, while the 6G will further provide "Intelligent connectivity", "Deep connectivity", "Holographic connectivity", and "Ubiquitous connectivity", which can be summarized as "everything follows your heart wherever you are". The Ubiquitous-X architecture of 6G explores more possibilities and opportunities for AIoT at application level. In 6G, AIoT is not just AI enabled IoT, but "All in IoT". Meanwhile, AIoT faces various challenges. This paper laid out some technical challenges for AIoT in 6G and presented the major potential technologies. Since AIoT under 6G network is still incipient, there are many technical challenges to be solved for its implantation. But undoubtedly, AIoT will enable the 6G network to support the intelligent society.

| [1] |

Katz M, Pirinen P, Posti H. Towards 6G: getting ready for the next decade. Proceedings of the 16th International Symposium on Wireless Communication Systems (ISWCS 2019). Oulu, 2019. 714-718.

(  0) 0) |

| [2] |

Saad W, Bennis M, Chen M Z. A vision of 6G wireless systems: applications, trends, technologies, and open research problems. IEEE Networks, 2019, 1-9. DOI:10.1109/MNET.001.1900287 (  0) 0) |

| [3] |

Zhang P, Niu K, Tian H, et al. Technology prospect of 6G mobile communications. Journal on Communications, 2019, 40(1): 141-148. (in Chinese) DOI:10.11959/j.issn.1000-436x.2019022 (  0) 0) |

| [4] |

Zhang Z Q, Xiao Y, Ma Z, et al. 6G wireless networks: vision, requirements, architecture, and key technologies. IEEE Vehicular Technology Magazine, 2019, 14(3): 28-41. DOI:10.1109/MVT.2019.2921208 (  0) 0) |

| [5] |

Zhang P, Zhang J H, Qi Q, et al. Ubiquitous-X: constructing the future 6G networks. SCIENTIA SINICA Informationis. DOI:10.1360/SSI-2020-0068.(inpress) (  0) 0) |

| [6] |

Letaief K B, Chen W, Shi Y M, et al. The roadmap to 6G: AI empowered wireless networks. IEEE Communications Magazine, 2019, 57(8): 84-90. DOI:10.1109/MCOM.2019.1900271 (  0) 0) |

| [7] |

Heath R W. Going toward 6G. IEEE Signal Processing Magazine, 2019, 36(3): 3-4. (  0) 0) |

| [8] |

Latva-Aho M. Radio access networking challenges towards 2030. Proceedings of the 1st International Telecommunication Union Workshop on Network 2030. New York, 2018.

(  0) 0) |

| [9] |

Kato N, Mao B M, Tang F X, et al. Ten challenges in advancing machine learning technologies toward 6G. IEEE Wireless Communications. DOI:10.1109/MWC.001.1900476.(inpress) (  0) 0) |

| [10] |

Gui G, Liu M, Tang F X, et al. 6G: opening new horizons for integration of comfort, security and intelligence. IEEE Wireless Communications. DOI:10.1109/MWC.001.1900516.(inpress) (  0) 0) |

| [11] |

Ma N, Huang Y Z, Qin X Q. Information and Communications Technology (ICT) and Smart Manufacturing. Beijing: Chemical Industry Press, 2019.

(  0) 0) |

| [12] |

ITU-T. Representative Use Cases and Key Network Requirements for Network 2030. https://www.itu.int/md/T17-SG13-200313-TD-GEN-0391, 2020-02-13.

(  0) 0) |

| [13] |

Zhang J H, Tang P, Yu L, et al. Channel measurements and models for 6G: current status and future outlook. Frontiers of Information Technology & Electronic Engineering, 2020, 21(1): 39-61. DOI:10.1631/FITEE.1900450 (  0) 0) |

| [14] |

Saad W, Bennis M, Chen M Z. A vision of 6G wireless systems: applications, trends, technologies, and open research problems. IEEE Network. DOI:10.1109/MNET.001.1900287.(inpress) (  0) 0) |

| [15] |

Zhang L, Liang Y C, Niyato D. 6G visions: mobile ultra-broadband, super internet-of-things, and artificial intelligence. China Communications, 2019(8): 1-14. (  0) 0) |

| [16] |

Zhao Y J, Yu G H, Xu H Q. 6G mobile communication networks: vision, challenges, and key technologies. SCIENTIA SINICA Informationis, 2019, 49(8): 963-987. DOI:10.1360/N112019-00033 (  0) 0) |

| [17] |

Shiu Y S, Chang S Y, Wu H C, et al. Physical layer security in wireless networks: a tutorial. IEEE Wireless Communications, 2011, 18(2): 66-74. DOI:10.1109/MWC.2011.5751298 (  0) 0) |

| [18] |

Mahdavifar H, Vardy A. Achieving the secrecy capacity of wiretap channels using polar codes. Information Theory, IEEE Transactions on Information Theory, 2011, 57(10): 6428-6443. DOI:10.1109/TIT.2011.2162275 (  0) 0) |

| [19] |

Liberg O, Sundberg M, Wang E, et al. Cellular Internet of Things: Technologies, Standards, and Performance. London: Academic Press, 2017.

(  0) 0) |

| [20] |

Nieminen J, Gomez C, Isomaki M, et al. Networking solutions for connecting bluetooth low energy enabled machines to the internet of things. IEEE Network, 2014, 28(6): 83-90. DOI:10.1109/MNET.2014.6963809 (  0) 0) |

| [21] |

Raza U, Kulkarni P, Sooriyabandara M. Low power wide area networks: an overview. IEEE Communications Surveys & Tutorials, 2017, 19(2): 855-873. DOI:10.1109/COMST.2017.2652320 (  0) 0) |

| [22] |

Gubbi J, Buyya R, Marusic C, et al. Internet of Things (IoT): a vision, architectural elements, and future directions. Future Generation Computer Systems, 2013, 29(7): 1645-1660. DOI:10.1016/j.future.2013.01.010 (  0) 0) |

| [23] |

Ratasuk R, Vejlgaard B, Mangalvedhe N, et al. NB-IoT system for M2M communication. Proceedings of the 2016 IEEE Wireless Communications and Networking Conference Workshops. Piscataway: IEEE, 2016. 1-5. DOI: 10.1109/WCNCW.2016.7552737

(  0) 0) |

| [24] |

Liu M, Song T C, Gui G. Deep cognitive perspective: resource allocation for NOMA-based geterogeneous IoT with imperfect SIC. IEEE Internet of Things Journal, 2019, 6(2): 2885-2894. DOI:10.1109/JIOT.2018.2876152 (  0) 0) |

| [25] |

Ye N, Li X M, Yu H X, et al. Deep learning aided grant-free NOMA toward reliable low-latency access in tactile Internet of Things. IEEE Transactions on Industrial Informatics, 2019, 15(5): 2995-3005. DOI:10.1109/TⅡ.2019.2895086 (  0) 0) |

| [26] |

Goudos S K, Deruyck M, Plets D, et al. A novel design approach for 5G massive MIMO and NB-IoT green networks using a hybrid jaya-differential evolution algorithm. IEEE Access, 2019(7): 105687-105700. DOI:10.1109/ACCESS.2019.2932042 (  0) 0) |

| [27] |

Li T, Yuan J, Torlak M. Network throughput optimization for random access narrowband cognitive radio Internet of Things (NB-CR-IoT). IEEE Internet of Things Journal, 2018, 5(3): 1436-1448. DOI:10.1109/JIOT.2017.2789217 (  0) 0) |

| [28] |

Bera S, Misra S, Roy S K, et al. Soft-WSN: software-defined WSN management system for IoT applications. IEEE Systems Journal, 2018, 12(3): 2074-2081. DOI:10.1109/JSYST.2016.2615761 (  0) 0) |

| [29] |

Ojo M, Davide A, Stefano G. A SDN-IoT architecture with NFV implementation. Proceedings of the 2016 IEEE Globecom Workshops (GC Wkshps). Piscataway: IEEE, 2016. 1-6. DOI: 10.1109/GLOCOMW.2016.7848825.

(  0) 0) |

| [30] |

Mao Y Y, You C S, Zhang J, et al. A survey on mobile edge computing: the communication perspective. IEEE Communications Surveys & Tutorials, 2017, 19(4): 2322-2358. DOI:10.1109/COMST.2017.2745201 (  0) 0) |

| [31] |

Ren J, Zhang Y X, Zhang K, et al. Exploiting mobile crowdsourcing for pervasive cloud services: challenges and solutions. IEEE Communications Magazine, 2015, 53(3): 98-105. DOI:10.1109/MCOM.2015.7060488 (  0) 0) |

| [32] |

Shafi M, Molisch A F, Smith P J, et al. 5G: a tutorial overview of standards, trials, challenges, deployment and practice. IEEE Journal on Selected Areas in Communications, 2017, 35(6): 1201-1221. DOI:10.1109/JSAC.2017.2692307 (  0) 0) |

| [33] |

Cisco. Cisco (2018) Cisco Global Cloud Index: Forecast and Methodology, 2016-2021, White Paper. https://www.cisco.com/c/en/us/solutions/collateral/service-provider/global-cloud-index-gci/white-paper-c11-738085.html, 2020-05-21.

(  0) 0) |

| [34] |

Mukherjee M, Shu L, Wang D. Survey of fog computing: fundamental, network applications, and research challenges. IEEE Communications surveys & tutorials, 2018, 20(3): 1826-1857. DOI:10.1109/COMST.2018.2814571 (  0) 0) |

| [35] |

Zhang P, Liu J K, Yu F R, et al. A survey on access control in fog computing. IEEE Communications Magazine, 2018, 56(2): 144-149. DOI:10.1109/MCOM.2018.1700333 (  0) 0) |

| [36] |

Abbas N, Zhang Y, Taherkordi A, et al. Mobile edge computing: a survey. IEEE Internet of Things Journal, 2017, 5(1): 450-465. DOI:10.1109/JIOT.2017.2750180 (  0) 0) |

| [37] |

Taleb T, Samdanis K, Mada B, et al. On multi-access edge computing: a survey of the emerging 5G network edge architecture and orchestration. IEEE Communications Surveys & Tutorials, 2017, 19(3): 1657-1681. DOI:10.1109/COMST.2017.2705720 (  0) 0) |

| [38] |

Porambage P, Okwuibe J, Liyanage M, et al. Survey on multi-access edge computing for Internet of Things realization. IEEE Communications Surveys & Tutorials, 2018, 20(4): 2961-2991. DOI:10.1109/COMST.2018.2849509 (  0) 0) |

| [39] |

Zhang J, Letaief K B. Mobile edge intelligence and computing for the Internet of vehicles. Proceedings of IEEE, 2020, 108(2): 246-261. DOI:10.1109/JPROC.2019.2947490 (  0) 0) |

| [40] |

Mach P, Becvar Z. Mobile edge computing: a survey on architecture and computation offloading. IEEE Communications Surveys & Tutorials, 2017, 19(3): 1628-1656. DOI:10.1109/COMST.2017.2682318 (  0) 0) |

| [41] |

International Telecommunications Union-Telecommunication Standardization Sector (ITU-T). Security architecture for systems providing end-to-end communications. International Telecommunication Union, Tech. Rep. X.805, 2003.

(  0) 0) |

| [42] |

Zhang P N, Liu Y N, Wu F, et al. Low-overhead and high-precision prediction model for content-based sensor search in the Internet of Things. IEEE Communications Letters, 2016, 20(4): 720-723. DOI:10.1109/LCOMM.2016.2521735 (  0) 0) |

| [43] |

Kaur N, Sood S K. An energy-efficient architecture for the Internet of Things (IoT). IEEE Systems Journal, 2017, 11(2): 796-805. DOI:10.1109/JSYST.2015.2469676 (  0) 0) |

| [44] |

Li P, Chen Z K, Yang L T, et al. Deep convolutional computation model for feature learning on big data in internet of things. IEEE Transactions on Industrial Informatics, 2017, 14(2): 790-798. DOI:10.1109/TⅡ.2017.2739340 (  0) 0) |

| [45] |

Chen T Y, Barbarossa S, Wang X, et al. Learning and management for Internet of Things: accounting for adaptivity and scalability. Proceedings of the IEEE, 2019, 107(4): 778-796. DOI:10.1109/JPROC.2019.2896243 (  0) 0) |

| [46] |

Anisha G, Christie R, Manjula P R. Scalability in Internet of Things: features, techniques and research challenges. Int. J. Comput. Intell. Res, 2017, 13(7): 1617-1627. (  0) 0) |

| [47] |

Hassan T, Aslam S, Jang J W. Fully automated multi-resolution channels and multithreaded spectrum allocation protocol for IoT based sensor nets. IEEE Access, 2018, 6: 22545-22556. DOI:10.1109/ACCESS.2018.2829078 (  0) 0) |

| [48] |

Hu Y C, Patel M, Sabella D, et al. Mobile edge computing—a key technology towards 5G. ETSI White Paper, 2015, 11: 1-16. (  0) 0) |

| [49] |

ETSI. v1.1.1. ETSI GS MEC Standard 003 Mobile edge computing (MEC): Framework and Reference Architecture. Nice: ETSI, 2016.

(  0) 0) |

| [50] |

Zhou Y Q, Tian L, Liu L, et al. Fog computing enabled future mobile communication networks: a convergence of communication and computing. IEEE Communications Magazine, 2019, 57(5): 20-27. DOI:10.1109/MCOM.2019.1800235 (  0) 0) |

| [51] |

Zhou Y Q, Li G J. Convergence of communication and computing in future mobile communication systems. Telecommunications Science, 2018, 3: 1-7. DOI:10.11959/j.issn.1000-0801.2018120 (  0) 0) |

| [52] |

ur Rehman Khan A, Othman M, Madani S A, et al. A survey of mobile cloud computing application models. IEEE Communications Surveys & Tutorials, 2014, 16(1): 393-413. DOI:10.1109/SURV.2013.062613.00160 (  0) 0) |

| [53] |

Tao Y Z, You C S, Zhang P, et al. Stochastic control of computation offloading to a helper with a dynamically loaded CPU. IEEE Transactions on Wireless Communications, 2019, 18(2): 1247-1262. DOI:10.1109/TWC.2018.2890653 (  0) 0) |

| [54] |

Mao Y Y, Zhang J, Letaief K. B. Dynamic computation offloading for mobile-edge computing with energy harvesting devices. IEEE Journal on Selected Areas in Communications, 2016, 34(12): 3590-3605. DOI:10.1109/JSAC.2016.2611964 (  0) 0) |

| [55] |

Wang Y T, Sheng M, Wang X J, et al. Mobile-edge computing: partial computation offloading using dynamic voltage scaling. IEEE Transactions on Communications, 2016, 64(10): 4268-4282. DOI:10.1109/TCOMM.2016.2599530 (  0) 0) |

| [56] |

Lyu X C, Ni W, Tian H, et al. Distributed online optimization of fog computing for selfish devices with out-of-date information. IEEE Transactions on Wireless Communications, 2018, 17(11): 7704-7717. DOI:10.1109/TWC.2018.2869764 (  0) 0) |

| [57] |

Liu Y M, Yu F R, Li X, et al. Distributed resource allocation and computation offloading in fog and cloud networks with non-orthogonal multiple access. IEEE Transactions on Vehicular Technology, 2018, 67(12): 12137-12151. DOI:10.1109/TVT.2018.2872912 (  0) 0) |

| [58] |

Sun Y X, Guo X Y, Song J H, et al. Adaptive learning-based task offloading for vehicular edge computing systems. IEEE Transactions on Vehicular Technology, 2019, 68(4): 3061-3074. DOI:10.1109/TVT.2019.2895593 (  0) 0) |

| [59] |

Liu L, Qin X Q, Zhang Z, et al. Joint task offloading and resource allocation for obtaining fresh status updates in multi-device MEC systems. IEEE Access, 2020, 8: 38248-38261. DOI:10.1109/ACCESS.2020.2976048 (  0) 0) |

| [60] |

Gamatie A, Devic G, Sassatelli G, et al. Towards energy-efficient heterogeneous multicore architectures for edge computing. IEEE Access, 2019, 7: 49474-49491. DOI:10.1109/ACCESS.2019.2910932 (  0) 0) |

| [61] |

Wang C, He Y, Yu F R, et al. Integration of networking, caching, and computing in wireless systems: a survey, some research issues, and challenges. IEEE Communications Surveys & Tutorials, 2018, 20(1): 7-38. DOI:10.1109/COMST.2017.2758763 (  0) 0) |

| [62] |

Liu L, Zhou Y, Yuan J, et al. , Economically optimal MS association for multimedia content delivery in cache-enabled heterogeneous cloud radio access networks. IEEE Journal on Selected Areas in Communications, 2019, 37(7): 1584-1593. DOI:10.1109/JSAC.2019.2916280 (  0) 0) |

| [63] |

Wang X F, Wang C Y, Li X H, et al. Federated deep reinforcement learning for Internet of Things with decentralized cooperative edge caching. IEEE Internet of Things Journal. DOI:10.1109/JIOT.2020.2986803.(inpress) (  0) 0) |

| [64] |

Wei H, Luo H, Sun Y, et al. Cache-aware computation offloading in IoT systems. IEEE Systems Journal, 2020, 14(1): 61-72. DOI:10.1109/JSYST.2019.2903293 (  0) 0) |

| [65] |

Xu F M, Yang F, Bao S J, et al. DQN inspired joint computing and caching resource allocation approach for software defined information-centric Internet of Things network. IEEE Access, 2019, 7: 61987-61996. DOI:10.1109/ACCESS.2019.2916178 (  0) 0) |

| [66] |

Hao Y X, Miao Y M, Hu L, et al. Smart-edge-CoCaCo: AI-enabled smart edge with joint computation, caching, and communication in heterogeneous IoT. IEEE Network, 2019, 33(2): 58-64. DOI:10.1109/MNET.2019.1800235 (  0) 0) |

| [67] |

Gao W C, Hatcher W G, Yu W. A survey of blockchain: techniques, applications, and challenges. Proceedings of the 2018 27th International Conference on Computer Communication and Networks (ICCCN). Piscataway: IEEE, 2018. 1-11. DOI: 10.1109/ICCCN.2018.8487348.

(  0) 0) |

| [68] |

Nakamoto S. Bitcoin: A Peer-to-Peer Electronic Cash System. http://bitcoin.org/bitcoin.pdf, 2020-03-24.

(  0) 0) |

| [69] |

Wu M L, Wang K, Cai X Q, et al. A comprehensive survey of blockchain: from theory to IoT applications and beyond. IEEE Internet of Things Journal, 2019, 6(5): 8114-8154. DOI:10.1109/JIOT.2019.2922538 (  0) 0) |

| [70] |

Xie L X, Ding Y, Yang H Y, et al. Blockchain-based secure and trustworthy Internet of Things in SDN-enabled 5G-VANETs. IEEE Access, 2019, 7: 56656-56666. DOI:10.1109/ACCESS.2019.2913682 (  0) 0) |

| [71] |

Wan J F, Li J P, Imran M, et al. A blockchain-based solution for enhancing security and privacy in smart factory. IEEE Transactions on Industrial Informatics, 2019, 15(6): 3652-3660. DOI:10.1109/TⅡ.2019.2894573 (  0) 0) |

| [72] |

Yu Y, Li Y N, Tian J F, et al. Blockchain-based solutions to security and privacy issues in the Internet of Things. IEEE Wireless Communications, 2018, 25(6): 12-18. DOI:10.1109/MWC.2017.1800116 (  0) 0) |

| [73] |

Gai K K, Wu Y L, Zhu L H, et al. Permissioned blockchain and edge computing empowered privacy-preserving smart grid networks. IEEE Internet of Things Journal, 2019, 6(5): 7992-8004. DOI:10.1109/JIOT.2019.2904303 (  0) 0) |

| [74] |

Yin H, Jiang Y, Lin C, et al. Big data: transforming the design philosophy of future internet. Network IEEE, 2014, 28(4): 14-19. DOI:10.1109/MNET.2014.6863126 (  0) 0) |

| [75] |

Chen M, Yang J, Zhou J H, et al. 5G-smart diabetes: toward personalized diabetes diagnosis with healthcare big data clouds. IEEE Communications Magazine, 2018, 56(4): 16-23. DOI:10.1109/MCOM.2018.1700788 (  0) 0) |

| [76] |

Zhou P, Zhong G H, Hu M L, et al. Privacy-preserving and residential context-aware online learning for IoT-enabled energy saving with big data support in smart home environment. IEEE Internet of Things Journal, 2019, 6(5): 7450-7468. DOI:10.1109/JIOT.2019.2903341 (  0) 0) |

| [77] |

Moreno M V, Terroso-Sáenz F, González-Vidal A, et al. Applicability of big data techniques to smart cities deployments. IEEE Transactions on Industrial Informatics, 2017, 13(2): 800-809. DOI:10.1109/TⅡ.2016.2605581 (  0) 0) |

| [78] |

Zhao J J, Qu Q, Zhang F, et al. Spatio-temporal analysis of passenger travel patterns in massive smart card data. IEEE Transactions on Intelligent Transportation Systems, 2017, 18(11): 3135-3146. DOI:10.1109/TITS.2017.2679179 (  0) 0) |

| [79] |

Samie F, Bauer L, Henkel J. From cloud down to things: an overview of machine learning in Internet of Things. IEEE Internet of Things Journal, 2019, 6(3): 4921-4934. DOI:10.1109/JIOT.2019.2893866 (  0) 0) |

| [80] |

Park T, Saad W. Distributed learning for low latency machine type communication in a massive Internet of Things. IEEE Internet of Things Journal, 2019, 6(3): 5562-5576. DOI:10.1109/JIOT.2019.2903832 (  0) 0) |

| [81] |

Zhu J, Song Y H, Jiang D D, et al. A new deep-Q-learning-based transmission scheduling mechanism for the cognitive Internet of Things. IEEE Internet of Things Journal, 2018, 5(4): 2375-2385. DOI:10.1109/JIOT.2017.2759728 (  0) 0) |

| [82] |

Liu Y M, Yu F R, Li X, et al. Blockchain and machine learning for vommunications and networking systems. IEEE Communications Surveys Tutorials. DOI:10.1109/COMST.2020.2975911.(inpress) (  0) 0) |

| [83] |

Kapetanovic D, Zheng G, Rusek F. Physical layer security for massive MIMO: an overview on passive eavesdropping and active attacks. IEEE Communications Magazine, 2015, 53(6): 21-27. DOI:10.1109/MCOM.2015.7120012 (  0) 0) |

| [84] |

Ju Y, Wang H M, Zheng T X, et al. Secure transmissions in millimeter wave systems. IEEE Transactions on Communications, 2017, 65(5): 2114-2127. DOI:10.1109/TCOMM.2017.2672661 (  0) 0) |

| [85] |

Han S J, Xu X D, Fang S D, et al. Energy efficient secure computation offloading in NOMA-based mMTC networks for IoT. IEEE Internet of Things Journal, 2019, 6(3): 5674-5690. DOI:10.1109/JIOT.2019.2904741 (  0) 0) |

| [86] |

Han S J, Xu X D, Tao X F, et al. Joint power and sub-channel allocation for secure transmission in NOMA-based mMTC networks. IEEE Systems Journal, 2019, 13(3): 2476-2487. DOI:10.1109/JSYST.2018.2890039 (  0) 0) |

| [87] |

Shannon C E. Communication theory of secrecy systems. The Bell System Technical Journal, 1949, 28(4): 656-715. DOI:10.1002/j.1538-7305.1949.tb00928.x (  0) 0) |

| [88] |

Wyner A D. The wire-tap channel. The Bell System Technical Journal, 1975, 54(8): 1355-1387. DOI:10.1002/j.1538-7305.1975.tb02040.x (  0) 0) |

| [89] |

Leung-Yan-Cheong S, Hellman M. The Gaussian wire-tap channel. IEEE Transactions on Information Theory, 1978, 24(4): 451-456. DOI:10.1109/TIT.1978.1055917 (  0) 0) |

| [90] |

Csiszár I, Körner J. Broadcast channels with confidential messages. IEEE Transactions on Information Theory, 1978, 24(3): 339-348. DOI:10.1109/TIT.1978.1055892 (  0) 0) |

| [91] |

Hamamreh J M, Furqan H M, Arslan H. Classifications and applications of physical layer security techniques for confidentiality: a comprehensive survey. IEEE Communications Surveys & Tutorials, 2019, 21(2): 1773-1828. DOI:10.1109/COMST.2018.2878035 (  0) 0) |

| [92] |

Zou Y L, Zhu J, Wang X B, et al. A survey on wireless security: technical challenges, recent advances, and future trends. Proceedings of the IEEE, 2016, 104(9): 1727-1765. DOI:10.1109/JPROC.2016.2558521 (  0) 0) |

| [93] |

Thangaraj A, Dihidar S, Calderbank A R, et al. Applications of LDPC codes to the wiretap channel. IEEE Transactions on Information Theory, 2007, 53(8): 2933-2945. DOI:10.1109/TIT.2007.901143 (  0) 0) |

| [94] |

Klinc D, Ha J, McLaughlin S W, et al. LDPC codes for the Gaussian wiretap channel. IEEE Transactions on Information Forensics and Security, 2011, 6(3): 532-540. DOI:10.1109/TIFS.2011.2134093 (  0) 0) |

| [95] |

Wang H W, Tao X F, Li N, et al. Polar coding for the wiretap channel with shared key. IEEE Transactions on Information Forensics and Security, 2017, 13(6): 1351-1360. DOI:10.1109/TIFS.2017.2774499 (  0) 0) |

| [96] |

Chou R A, Bloch M R. Polar coding for the broadcast channel with confidential messages: a random binning analogy. IEEE Transactions on Information Theory, 2016, 62(5): 2410-2429. DOI:10.1109/TIT.2016.2539145 (  0) 0) |

2020, Vol. 27

2020, Vol. 27