2. People's Liberation Army Air Force 93756, Tianjin 300131, China

In wireless communication system, antenna is usually adopted to receive radio waves and can transfer information between locations without any equipment in the middle. Antenna array pattern synthesis is the technology that the characteristics of antennas are changed to meet the requirements of the antenna by using multiple optimization algorithms, which plays a vital role in the fields of traditional antenna and smart antenna. In the research of pattern synthesis of homogeneous array, numerous algorithms for low sidelobe pattern synthesis in homogeneous linear array have been proposed by Dolph[1], Taylor[2] and other scholars. In the same research area, different kinds of self-adaptive algorithms were proposed, such as LMS[3], SMI[4], RLS[5] and so on. However, there is an obligation of sufficient homogeneous array elements to achieve the requirements of antenna in these studies, which significantly increases the complexity and the cost of equipment.

In the design of antenna array, the method of sparse array has been utilized to construct an antenna array of low-gain and strong-directivity to meet the requirements of antenna with fewer elements and substantially reduce production cost[6-9].

As early as the 1960s, some researchers carried out considerable research on the problem of non-uniform arrays and sparse arrays. Harrington[10] obtained a lower sidelobe level than a uniform array of the same array elements by optimizing the element position. Andreasen[11]demonstrated the relation of sidelobe level with the number of array elements and the average array spacing. Later, Steinberg[12] made a summary of random arrays and aperiodic arrays in his work. Domestically, Zhang Yuhong[13] of Xidian University deduced an optimal array element distribution equation based on the work of Ishimaru. The equation comprehensively analyzes the sidelobe level and the estimation problem of the exponentially spaced array over the entire viewing area, and the lower limit of the peak sidelobe level of the sparse array and the estimation formula of beam width is theoretically derived.

New methods have sprung up over the years. In 2008, based on matrix pencil method (MPM), Liu proposed a sparse array synthesis method and applied it for one-dimensional linear array synthesis[14]. Since 2011, Prisco[15]of the Italy ELEDIA research center and Fuchs[16]of Ryan University in France drew on the latest research results of the theory of compressive sensing (CS) and sparse reconstruction in the field of signal processing to propose a new method for comprehensive sparse array. The method transforms the array synthesis problem into the sparse signal recovery problem under linear constraint and uses the sparse reconstruction algorithm to quickly gain the maximum sparse array layout. Compared with traditional methods, the method is fast in speed and can intelligently determine the minimum number of elements to satisfy the array characteristics. Moreover, the method is not limited by the topological structure of the array and is applicable to linear, planar, and conformal arrays.

On the basis of the previous research, the pattern synthesis technology based on the sparse perception theory is discussed in this paper. By further improving the compressed sensing reconstruction algorithm and the low rank matrix recovery (LRMR) algorithm, a more effective recovery algorithm was obtained and applied to the synthesis of sparse array pattern. Finally, with less sparse array elements, the desired pattern effect was achieved, which was beneficial for the miniaturization and economy of the equipment.

2 MethodThis paper considers an improved compressed sensing reconstruction method andapplies the LRMR technique to sparse array pattern synthesis. The two methods are described below:

(1) Improved Orthogonal Matching Pursuit (OMP) Algorithm.

Matching tracking (MP)algorithm is a commonly used algorithm for signal sparse decomposition, and OMP is an improved algorithm for matching tracking algorithm, which selects the best atom. Similar to MP, OMP can find the atom that best matches the signal to be decomposed from the over-complete library. The difference is that OMP needs to use the Gram-Schmidt orthogonalization method for the selected atom. The signal is then projected onto the space formed by these orthogonal bases. The signal component and the residual component can be obtained, and the residual component is decomposed in the same way.

In this paper, the operating principle of the OMP algorithm is analyzed in detail. An improved OMP algorithm, Orthogonal Multi-Matching Pursuit (OMMP) algorithm, is proposed by introducing multi-matching principle. For OMMP, let Λ be the index set formed by measuring the column vectors in the matrix Φ, and then Λk is the index set composed of the atoms selected after k iterations. In the iterative process, the new atom comes from two stages of calculation, and the correlation of the residual with the remaining atoms comes from the first stage to calculate the inner product of the current residual and the remaining atoms.

The specific steps of the improved algorithm are shown in Table 1.

| Table 1 Steps of OMMP algorithm |

According to the description of the basic steps of the algorithm, it can be found that the selection method of the new algorithm atom was changed due to the introduction of the multi-matching principle. Since the correlation was large in two successive iterations, the selected atom was more robust and faster for selection than that of the OMP algorithm.

(2) Low Rank Matrix Recovery(LRMR) Technique.

When the original matrix is low rank, LRMR can automatically identify the damaged element and recover the original matrix. Therefore, the algorithm is also called Low Rank and Sparse Matrix Decomposition (LRSMD) or Robust Principal Component Analysis (RPCA). n represents the number of the iteration steps[17].

Assume that matrix D is composed of low rank A and noise matrix E, where E is a sparse matrix. In other words, the non-zero elements in the matrix E is few. Then the problem can be resolved by the LRMR technique, which is described by the optimization problem as follows:

| $ {\rm{min}}\;{\rm{rank}}\left( \mathit{\boldsymbol{A}} \right) + \lambda \parallel \mathit{\boldsymbol{E}}{\parallel _0}{\rm{s}}.{\rm{t}}.\mathit{\boldsymbol{A}} + \mathit{\boldsymbol{E}} = \mathit{\boldsymbol{D}} $ | (1) |

where ‖E‖0 is the l0 norm which represents the number of non-zero elements in the matrix. Since Eq.(1) is an NP-hard problem with a large amount of calculation, it is necessary to find a suitable method to solve the optimization problem. Candès[17] proved that the solution obtained by l1 norm (the sum of absolute values of elements in the matrix) minimization is very close to the solution of l0 norm minimization, so the l0 norm minimization problem can be relaxed to the l1norm minimization problem. Function rank() in Eq. (1) is a non-convex and discontinuous function, whose value is the l0 norm of singular value, and the l1 norm of singular value is the nuclear norm, so the function rank() of the matrix can be approximated to the nuclear norm as

| $ {\rm{min}}\parallel \mathit{\boldsymbol{A}}\parallel {\;_*} + \mathit{\lambda }\parallel \mathit{\boldsymbol{E}}\parallel {\;_1}\;{\rm{s}}.{\rm{t}}.\mathit{\boldsymbol{A}} + \mathit{\boldsymbol{E}} = \mathit{\boldsymbol{D}} $ | (2) |

where

The low rank matrix restoration algorithm contains three classical algorithms, i.e., ALM, EALM, and IALM, where EALM algorithm has high accuracy, good sparsity, but long operation time. The reason for the long operation time of the EALM algorithm is that in each iteration, it takes too much time in the internal cycle to obtain the approximate value of the low rank matrix A and the noise matrix E. Therefore, in each iteration of the improved Semi-Exact Augmented Lagrange Multiplier algorithm (SEALM), the maximum iteration number of the internal cycle of A and E was established. When the number of iterations exceeds a certain range, the inner loop will jump out into the next iteration process.

The steps of the algorithm for SEALM are as follows:

Step 1 Y0*, E0*=0, μ0, k=0, NUM=C, where C is a constant

Step 2 while not converged do

Step 3

Step 4 while not converged do

Step 5 if j > NUM, break

Step 6

Step 7

Step 8

Step 9 j=j+1

Step 10 end while

Step 11

Step 12 k=k+1

Step 13 end while

According to the above steps, the main difference between the SEALM algorithm and the EALM algorithm lies in the fact that the EALM algorithm continuously iterates in the inner loop each time until A and E meet the accuracy requirements. However, the SEALM algorithm has a maximum number of iterations for each inner loop that whether or not A and E meet the accuracy requirements, the inner loop will jump out of the loop after a certain number of iterations. Therefore, in the computation of A and E, SEALM algorithm satisfies the accuracy requirements of A and E to a certain extent and can significantly improve the speed of the program operation. In the SEALM algorithm, through experimental analysis, it can be found that when ρ and NUM are 2 and 600, the algorithm has a better balance between recovery precision and running time.

3 Simulation ExperimentIn this section, a typical array synthesis example is selected for simulation analysis and compared with other existing integrated methods. In order to objectively analyze the combined results, the matching error ε is defined as

| $ \varepsilon = \frac{{\int\limits_{ - 90^\circ }^{90^\circ } | {F_d}\left( \theta \right) - \sum\limits_{n = 1}^N {{\omega _n}{\rm{exp}}\left( {2{\rm{ \mathsf{ π} }}i{d_n}{\rm{sin}}\theta /\lambda } \right){|^2}{\rm{d}}\theta } }}{{\int\limits_{ - 90^\circ }^{90^\circ } | {F_d}\left( \theta \right){|^2}{\rm{d}}\theta }} $ | (3) |

Results of the numerical simulation examples in this section took the average of 100 trials. The simulation experiment environment of the example is Lenovo G460, Intel(R) Core(TM) i3-380M 2.53 GHz, 2.00 GB, Windows 7, Matlab 2014.

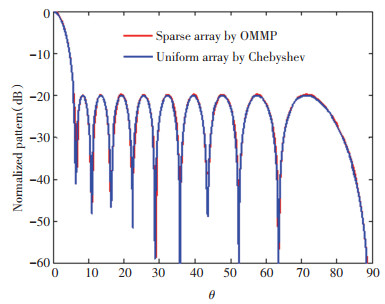

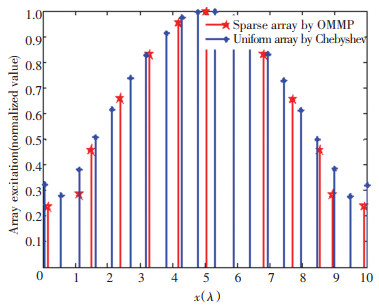

Example 1: Chebyshev pattern synthesis.

First, it is required to synthesize a thin-line linear array and implement a Chebyshev pattern with a sidelobe level of -20 dB using as few array elements as possible. The desired pattern was obtained by a Chebyshev uniform array of 20 array elements, the array aperture was 9.5λ, and all array elements were non-directional point sources. The array synthesis was performed by the proposed OMMP method. Fig. 1 and Fig. 2 show the results of the final synthesis. Table 2 reveals the comparison between the results obtained in this paper and those in previous studies. By comparing with the algorithms such as CVX[21] and FOCUSS[22], it can be found that the matching error between the final integrated pattern and the expected pattern of the OMMP method was the smallest, and the time spent was less than those of the CVX and the FOCUSS algorithms.

|

Fig.1 Comparison of the pattern obtained by OMMP with the expected pattern |

|

Fig.2 Element distribution of OMMP synthesizing Chebyshev pattern |

| Table 2 Comparison of OMMP and other algorithms in synthesizing Chebyshev pattern |

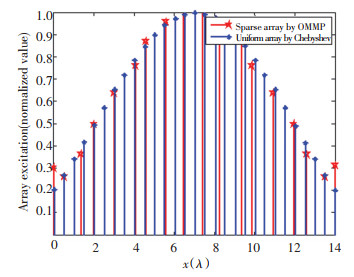

Example 2: Taylor pattern synthesis.

Next, it is required to synthesize a thin-line linear array to realize a 29-yuan Taylor pattern with a sidelobe level of -25 dB using as few array elements as possible. Fig. 3 and Fig. 4 show the results of array synthesis using the proposed method. Table 3 illustrates the comparison between the results obtained by the method proposed in this paper and those in previous literature. The research in Ref. [21] used the CVX method to optimize and successfully combined an 18-element thin cloth array with a matching error of ε=2.43×10-6. In Ref. [22], the FOCUSS method was adopted and an 18-element array with matching error of ε=6.7×10-5 was successfully synthesized.

|

Fig.3 Comparison of the pattern synthesized by OMMP with the expected Taylor pattern |

|

Fig.4 Element distribution of OMMP synthesizing Taylor pattern |

| Table 3 Comparison of OMMP and other algorithms for synthesizing Taylor pattern |

By comparison, the OMMP method ultimately gained 18 array elements, but the matching accuracy with the desired pattern was the highest with the least operation time.

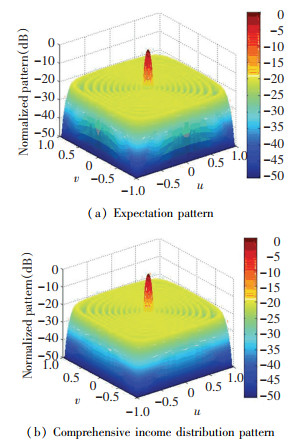

Example 3: Chebyshev plane array synthesis.

Assume that the desired pattern is produced by an inseparable Chebyshev plane array. The array aperture is 5.5λ×5.5λ, the array element spacing is half wavelength, which is uniformly distributed on the rectangular grid, and the peak sidelobe level is -20 dB. In Ref. [21], CVX algorithm was successfully used to reconstruct the desired pattern with 96 array elements, and the matching error is 3.65×10-3. The SEALM method is used in this paper for synthesis and compared with that in Ref. [21]. In the process of turning this comprehensive problem into a sparse signal recovery problem, a completely consistent strategy was adopted. That is, the symmetry of the array was fully considered. Only a virtual uniform array was constructed in the first quadrant, and the sampling point of the desired pattern was taken as 10×20. The reconstruction was then performed by the SEALM method. Simulation results show that the synthetic sparse array finally succeeded in reconstructing the desired pattern with 92 array elements, and the matching error was 8.1 s. Compared with CVX algorithm, the SEALM-based pattern synthesis method could achieve the desired pattern with fewer array elements and better matching accuracy. The combined results are shown in Fig. 5 and Fig. 6.

|

Fig.5 Comparison of the three-dimensional pattern of Example 3 |

|

Fig.6 Final optimization of the planar array for Example 3 |

4 Conclusions

Aiming at the problems in antenna design, the perception matrix was constructed by setting virtual uniform spaced array, and the measurement vector was obtained by low-dimensional sampling of the desired pattern. The problem of pattern synthesis was transformed into the problem of sparse signal recovery. An improved OMP algorithm and an improved EALM algorithm were introduced to recover sparse signals. Finally, the radiation pattern which is very close to the original uniform array could be obtained by saving 20%-30% of the array elements. The paper provides a new method for the miniaturization and economy of array antenna.

| [1] |

Dolph C L. A current distribution for broadside arrays which optimizes the relationship between beamwidth and side-lobe level. Proceedings of the IRE, 1946, 34(6): 335-348. DOI:10.1109/JRPROC.1946.225956 (  0) 0) |

| [2] |

Taylor T T. Design of line-source antennas for narrow beamwidth and low side lobes. Antennas andPropagation, Transactions of the IRE Professional Group on, 1955, 3(1): 16-28. DOI:10.1109/TPGAP.1955.5720407 (  0) 0) |

| [3] |

Shi Qingyan, Zhong Lunlong, Wu Renbiao. Adaptive interference suppression method of smart antenna based on ls-lms. Signal processing, 2010, 26(5): 677-681. (in Chinese) (  0) 0) |

| [4] |

Shubair R, Jimaa S A, Omar A A. Enhanced adaptive beamforming using LMMN algorithm with SMI initialization. Proceedings of 2009 Antennas and Propagation Society International Symposium. APSURSI '09. Piscataway: IEEE, 2009. DOI:10.1109/APS.2009.5171469 (  0) 0) |

| [5] |

Deng Chengwang, Tang bin, Xu Jing. Active correction of antenna array based on RLS algorithm in CDMA mobile communication system. Signal Processing, 2005(6): 681-683. (in Chinese) (  0) 0) |

| [6] |

Cen L, Ser W, Yu Z L, et al. Linear sparse array synthesis with minimum number of sensors. IEEE Transactions on Antennas and Propagation, 2010, 58(3): 720-726. DOI:10.1109/TAP.2009.2039292 (  0) 0) |

| [7] |

Unz H. Linear arrays with arbitrarily distributed elements. IRE Transactions on Antennas and Propagation, 1960, 8(2): 222-223. DOI:10.1109/TAP.1960.1144829 (  0) 0) |

| [8] |

Lo Y, Lee S. A study of space-tapered arrays. IEEE Transactions on Antennas and Propagation, 1966, 14(1): 22-30. DOI:10.1109/TAP.1966.1138612 (  0) 0) |

| [9] |

Ishimaru A. Theory of unequally-spaced arrays. IRE Transactions on Antennas and Propagation, 1962, 10(6): 691-702. DOI:10.1109/TAP.1962.1137952 (  0) 0) |

| [10] |

Harrington R. Sidelobe reduction by nonuniform element spacing. IRE Transactions on Antennas and Propagation, 1961, 9(2): 187-192. DOI:10.1109/TAP.1961.1144961 (  0) 0) |

| [11] |

Andreasen M. Linear arrays with variable interelement spacings. IRE Transactions on Antennas and Propagation, 1962, 10(2): 137-143. DOI:10.1109/TAP.1962.1137832 (  0) 0) |

| [12] |

Zhu Q, Steinberg B D. Wavefront amplitude distortion and image sidelobe levels. I. Theory and computer simulations. IEEE Transactions on Ultrasonics Ferroelectrics & Frequency Control, 40(6): 747-753. (  0) 0) |

| [13] |

Zhang Yuhong. Sidelobe level limitation of nonuniform spaced sparse array. Journal of Xi'an University of Electronic Science and Technology, 1992(4): 45-49. (in Chinese) (  0) 0) |

| [14] |

Liu Y H, Nie Z P, Liu Q H. Reducing the number of elements in a linear antenna array by the matrix pencil method. IEEE Transactions on Antennas and Propagation, 2008, 56(9): 2955-2962. DOI:10.1109/TAP.2008.928801 (  0) 0) |

| [15] |

Prisco G, D'Urso M. Maximally sparse arrays via sequential convex optimizations. IEEE Antennas & Wireless Propagation Letters, 2012, 11: 192-195. DOI:10.1109/LAWP.2012.2186626 (  0) 0) |

| [16] |

Fuchs B. Antenna selection for array synthesis problems. IEEE Antennas and Wireless Propagation Letters, 2016, 16: 868-871. DOI:10.1109/LAWP.2016.2612762 (  0) 0) |

| [17] |

Candès E, Romberg J. Sparsity and incoherence in compressive sampling. Inverse Problems, 2007, 23(3): 969-985. (  0) 0) |

| [18] |

Candès E J, Recht B. Exact matrix completion via convex optimization. Foundations of Computational Mathematics, 2009, 9: 717. DOI:10.1007/s10208-009-9045-5 (  0) 0) |

| [19] |

Werner K, Jansson M. Reduced rank linear regression and weighted low rank approximations. IEEE Transactions on Signal Processing, 2006, 54(6): 2063-2075. DOI:10.1109/TSP.2006.873502 (  0) 0) |

| [20] |

Zhang C C, Liu R S, Qiu T S, et al. Robust visual tacking via incremental low-rank features learning. Neurocomputing, 2014, 131(4): 237-247. DOI:10.1016/j.neucom.2013.10.020 (  0) 0) |

| [21] |

Zhao X W. Study of Sparse Array Antenna Synthesis based on Compressed Sensing and Invasive Weed Optimization. Beijing: Chinese Academy of Sciences, 2016. (in Chinese) (  0) 0) |

| [22] |

Yan F. Synthesis of Large Antenna Arrays with Sparse Reconstruction. Chengdu: University of Electronic Science and Technology of China, 2017. (in Chinese) (  0) 0) |

2020, Vol. 27

2020, Vol. 27