Isolating each speech signal from a mixture in noisy environment is an easy task for human but a difficult work for machine. The problem is called "cocktail party", and its solution is useful in various applications, such as automatic meeting transcription, automatic caption for Audio/Video recordings (e.g., YouTube), applications that need human-machine interaction (e.g., Internet of things (IoT)), and advanced hearing aids.

Cocktail party was formalized by Cherry E C in 1953[1], and many solutions were proposed. Most of the proposed solutions tried to mimic actions of human ears by filtering or extracting auditory properties from mixture, and then grouping T-F bins of the same speaker. These methods belong to Computational Auditory Speech Analysis (CASA)[2], but CASA methods are not enough for analysis. Another approach for multi-speaker separation is non-negative Matrix Factorization (NMF)[3], which decomposes spectrogram matrix into two matrices (templates and activations). By using activations and non-negative dictionaries, the separated signal can be approximated. Statistical methods were also utilized as solutions for speech separation, such as Independent Component Analysis (ICA)[4], which assumes that speech signal and interference signal are statistically independent, so the separation can be conducted by maximizing the independence. However, ICA only works for overdetermined environment, while cocktail party is an issue in underdetermined environment [5].

Deep learning performs significantly better than other methods, which has been applied in speech enhancement and separation[6-9] as well as in music separation[10-11]. However, deep learning has two obstacles[12], i.e., fixed number of outputs and permutation of sources. Training neural network to separate (n) signals does not work for any number that differs from (n), thus resulting in fixed number of outputs. Permutation of sources occurs due to the order of sources at the outputs of network. Training the network on two different orders of sources will increase the training error and lead to convergence problem. The difficulty of "permutation of sources" can be solved through Permutation Invariant Training (PIT)[13] by calculating the error between separated signal and the targets and choosing the target corresponding to the minimum error. However, PIT can only remove the permutation obstacle, while the problem of fixed number of outputs remains. Deep clustering (DC)[14] outperforms PIT in solving the problems of "fixed number of outputs" and "permutation of sources". The main idea of DC is to produce new space of embeddings, which has desirable features that can make speaker segmentation much easier. Each T-F bin of spectrogram corresponds to an embedding vector, so the embedding space is dense and able to show the T-F bins in a separable way. Clustering algorithm is applied to the embeddings to get each speaker, so it is feasible to determine the number of clusters to vary the outputs of the network. In this way, DC can solve the problem of fixed number of outputs. Loss function of DC is Frobenius norm between affinity matrix of embedding and affinity matrix of target binary mask, so the permutation problem disappears since the affinity matrices are not affected by the order. The trouble of DC is that it does not represent end-to-end system, because mask generation is done separately after the neural network [12]. Deep Attractor Network (DANet)[12] depends on DC algorithm, but after extracting the embeddings, central points of each speaker's embedding will be created, which are called "attractors". By calculating the distance between each embedding vector and the attractors, the mask for each speaker will be generated. DANet uses reconstruction error by comparing between reconstructed and ideal signals, so it provides end-to-end system. The disadvantage of DANet is its complexity, which takes a long time in training, and relatively long time in estimating the masks. Gated Recurrent Units (GRU)[15] is a new version of recurrent neural network (RNN), which has better results than LSTM in many cases [16]. In this paper, a new version of DANet is proposed, which is less complex and more accurate. Embeddings were created by Bidirectional GRU instead of BLSTM, which can make the model less complex. Therefore, the neural network can be trained on normal workstation. Gaussian Mixture Model (GMM) clustering algorithm was employed, instead of k-means, for a more accurate model.

The rest of the paper is organized as follows. Speech separation problem is introduced in Section 1. In Section 2, the proposed system is explained. The experimental results are discussed in Section 3.

1 Single Channel Speech Separation ProblemSingle channel speech separation problem is defined as follows:

Estimate the signals si(t), i=1, 2, …, N, given only signal x(t), where x(t) is the mixture of N speech signals

To write x(t) in time-frequency (T-F) domain, Short-time Fourier Transform (STFT) is calculated as

| $ \boldsymbol{X}(f, t)=\sum\limits_{i=1}^{N} \boldsymbol{S}_{i}(f, t) $ | (1) |

where X, Si are the Fourier transform for x and si, respectively.

2 The Proposed ModelThe proposed method is very similar to DANet[12], except that the hidden Layers are BGRU rather than BLSTM, and GMM is the alternative to the k-means algorithm.

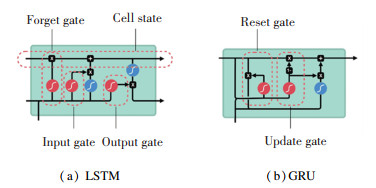

2.1 GRUFig. 1 shows the architecture of LSTM and GRU[17].

|

Fig.1 Architecture of LSTM and GRU |

RNN can use hidden state to process sequences of inputs, and there are three categories of RNN:

1) Vanilla RNN: It is the simplest type of RNN, which has problems when training "vanishing/exploding gradient".

2) LSTM: It first appeared in 1997, and has three gates, i.e., input, forget, and output. The method can solve the vanishing/exploding gradient but with high complexity.

3) GRU: It was found in 2014, and is considered the simplest version of LSTM, which replaces forget and input gates with update gate.

LSTM has achieved satisfactory results in speech processing tasks, but the complexity remains to be a trouble, so the network needs more data to learn. Recently, GRU has become a strong competitor of LSTM, and in some cases both of them have nearly the same result, but GRU outperforms the standard LSTM in most times[16]. Merging input and forget gates in one-gate results in combining hidden state with cell state, which is one of the reasons for the superiority of GRU[18].

2.2 GMMk-means is a special case of GMM, while it has restrictions that it is only suitable when clusters are spherical. The biggest limitation of k-means is probably that each cluster has the same diagonal covariance matrix. GMM is more flexible and almost more accurate than k-means. In this paper, it is proposed that each cluster resulting from GMM has its own general covariance matrix.

Fig. 2 shows the flow chart of the whole method. The steps can be summarized as preprocessing, training phase, and testing phase.

|

Fig.2 The proposed model using BGRU and GMM |

2.2.1 Preprocessing

1) Calculate the magnitude of spectrogram of the mixture using STFT as flatten vector X∈ R1×FT to be the input for the neural network.

2) Build ideal binary mask for each speaker using Weiner-filter like mask as

| $ \mathrm{WFM}_{i, f t}=\frac{\left|s_{i, f t}\right|^{2}}{\sum\limits_{j=1}^{N}\left|s_{j, f t}\right|^{2}} $ | (2) |

| $ \boldsymbol{m}_{i}=\left\{\begin{array}{l} 1, \mathrm{WFM}_{i, f t}>\tau \\ 0, \text { otherwise } \end{array}\right. $ | (3) |

where mi∈ R1×FT.

Choose τ=0.5 as a threshold. Ideal masks will be used in training phase only.

2.2.2 Training phase1) Generate the embedding space V using four BGRU layers and fully connected layer, where each T-F bin of magnitude of spectrogram maps K-dimensional vector.

| $ \boldsymbol{V}=f(\boldsymbol{X}), \quad \boldsymbol{V} \in \bf{R}^{K \times F T} $ | (4) |

The fully connected layer is used to represent the spectrogram into the dense embedding space.

2) Form attractor ai∈ R1×K for each cluster by calculating the weighted average of embeddings as

| $ \boldsymbol{a}_{i}=\frac{\boldsymbol{m}_{i} \cdot \boldsymbol{V}^{\mathrm{T}}}{\sum\nolimits_{f, t} \boldsymbol{m}_{i}}, i=1,2, \cdots, N $ | (5) |

3) Measure the distance di between each embedding vector and the attractors as

| $ \boldsymbol{d}_{i}=\boldsymbol{a}_{i} \boldsymbol{V}, i=1,2, \cdots, N $ | (6) |

where di∈ R1×FT. By normalizing the distance, each mask will be estimated by sigmoid function as

| $ \hat{\boldsymbol{m}}_{i}=\operatorname{sigmoid}\left(\boldsymbol{d}_{i}\right) $ | (7) |

4) Update the weights of the network by minimizing the reconstruction error as follows:

| $ L=\frac{1}{N} \sum\limits_{i}\left\|\boldsymbol{X} \odot\left(\boldsymbol{m}_{i}-\hat{\boldsymbol{m}}_{i}\right)\right\|_{2}^{2} $ | (8) |

1) Calculate the phase of spectrogram;

2) Generate the embedding using the previous trained model;

3) Cluster the last embedding using GMM;

4) Find the attractors, which are the center of the clusters;

5) Estimate the mask for each speaker by calculating the distance di following Step 3 in the Training Phase;

6) Reconstruct the speech signal for each speaker by multiply the magnitude of the spectrogram by the corresponding estimated mask, and then apply inverse STFT using the phase of the mixture calculated in Step 2.

3 Experimental Results 3.1 Network ArchitectureThe network is similar to that of DANet, but differs in the way of extracting embeddings and in the clustering algorithm. The dimension of the input feature is 129. The optimizer algorithm is ADAM with training rate starting at 10-3, and will be halved if the validation error does not reduce in 3 epochs. The number of epochs was chosen as 150. Four Bi-directional GRU layers were used, and each had 600 units. The dimension of the embedding vector was set to be 20 [14], so each T-F bin in spectrogram space was represented by a vector of dimension 20 in embedding space, resulting in the space larger than spectrogram by 20 times. After BGRU layers, there is fully connected feed forward network, which maps from 129 input to 2580 as output dimension (20×129=2580).

3.2 DatasetSinceWSJ corpus is not a free dataset, another one was searched. TIMIT is a free speech dataset. A new competitor dataset named TIMIT-2mix was constructed by randomly choosing utterances from different speakers, which were down-sampled to 8 kHz to reduce computation and save memory, and then mixed at various randomly selected SNRs within [-3 dB, 3 dB]. TIMIT-2mix is similar to WSJ0-2mix[14], and it contains 30 h training set, 10 h validation set, and 5 h testing set. The input feature is logarithm of magnitude of the spectrogram computed by Short-Time Fourier Transform (STFT) using 32 ms window length with 75% overlapping between the windows and the square root of Hanning window.

3.3 Evaluation MetricsThe proposed model was evaluated using objective measures, which are widely used in speech separation field[19], such as Signal to Distortion Ratio (SDR), Signal to Interference Ratio (SIR), Signal to Artifact Ratio (SAR), and Perceptual Evaluation Speech Quality (PESQ) score [20].

3.4 Results 3.4.1 Results of complexity decreaseTwo models were trained, one using BLSTM and the other using BGRU. The training was performed by a computer with the following specifications: Intel core-i5-8250U central processing unit at 1.8 GHz, GPU NVidia MX130, 8 GB RAM under Windows 10, 64-bit platform, and Python-3.5 under jupyter notebook web-based environment. Table 1 shows the comparison results between the two models using the same dataset TIMIT-2mix.

| Table 1 Comparison of the two models in terms of complexity |

Table 1 indicates that BGRU model was less complex than BLSTM model, where the improvement in the number of parameters was about 20.7% and 17.9% in training period.

Reduction of function error was greater in the proposed model, as shown in Table 2.

| Table 2 Reduction of validation error versus epochs |

It can be seen in Fig. 3 that BGRU converged faster than BLSTM.

|

Fig.3 Comparison of error loss between GRU and LSTM |

3.4.2 Results of accuracy increase

The architecture of the network (BGRU or BLSTM) and the clustering algorithm (k-means or GMM) can improve accuracy. Effects of the parameters were studied individually and then together.

First, the clustering algorithm was changed to evaluate the effects. Table 3 shows the separation results using BLSTM network with k-means and BLSTM network with GMM algorithm.

| Table 3 Comparison of [BLSTM+k-means, BLSTM+ GMM] in terms of accuracy of separation using TIMIT-2mix dataset |

Then, the architecture of the network was changed to check its effects. Table 4 shows the separation results using BLSTM network with k-means and BGRU network with k-means algorithm.

| Table 4 Comparison of [BLSTM+k-means, BGRU+ k-means] in terms of accuracy of separation using TIMIT-2mix dataset |

Third, the effect of using BGRU with GMM instead of BLSTM with k-means was studied, and the proposed model was compared with the DANet. The two models should be trained by the same dataset for comparison, but WSJ0-2mix is not free. Thus, DANet was trained on TIMIT-2mix, and the DANet model was established, which consists of BLSTM and k-means. All parameters were mentioned in Ref. [12]. In this case, the comparison is convincing because the two models were trained using TIMIT-2mix.

As can be seen in Table 5, the proposed model, which depends on BGRU network with GMM, outperformed the DANet model.

| Table 5 Comparison of DANet with the proposed model in terms of accuracy of separation using TIMIT-2mix dataset |

The results of our model can be seen clearly from Fig. 4, where Fig. 4(a) and Fig. 4(b) show the two separated speakers in time domain and in spectrogram domain, respectively.

|

Fig.4 Speech separation results by the model |

The system is language independent, because it can work in Arabic although it learned in English language. It relies on features of the human voice and does not depend on language. According to the study, the separation over Arabic mixture was better in English, for it depends on the speed of talking, and speaking in Arabic is often slower than in English. Table 6 shows the performance of the proposed model on Arabic and English mixtures.

| Table 6 Comparison of separation results between Arabic and English mixtures |

3.5 Discussion

Table 1 reveals how the complexity is reduced by using GRU. GRU is more and more used and is much simpler than LSTM. The reason is that LSTM has three gates and internal memory (cell state), while GRU only has two gates without cell state, which yields to less computational power and faster training. Therefore, GRU can be used to form really deep networks.

As shown in Table 3, the separation accuracy was improved by GMM, and BGRU was more useful in separation speech (Table 4). In Table 5, the BGRU network with GMM was better than BLSTM with k-means. The architecture of the network and the clustering algorithm had different influences on increasing the accuracy.

In our task (i.e., speech separation), according to the experimental results, GRU performed better than LSTM, and the reasons for the superiority of GRU over LSTM in speech separation are as follows:

1) GRU does not limit the amount of information added to the cell in each time step, and it is controlled by the forget gate in LSTM, which sometimes leads to the loss of some features.

2) GRU exposes the output (hidden state) in its entirety not as LSTM, which limits the output by hyperbolic tangent and sigmoid functions. It helps the GRU network to find patterns easier.

3) It is possible for GRU to obtain the overall sequence, which makes it more powerful in classification and clustering tasks. While the complexity of LSTM (more gates and cell state) makes it able to learn complicated relationships between words, besides classification and clustering tasks.

In general, the performance of k-means was not better than GMM because of the assumption that all clusters will have spherical models determined by the covariance. In our case, it is not necessary for each cluster to have a spherical shape. GMM is much more flexible in terms of cluster covariance than k-means. Due to the standard deviation parameter, the clusters can take on any shape, rather than being restricted to spheres. k-means is actually a special case of GMM, in which the covariance of each cluster along all dimensions approached zero.

4 ConclusionsIn this work, a new version of DANet was proposed, which was less complex and more accurate by using BGRU for generating embeddings and GMM with general covariance matrix for each cluster. These modifications in DANet structure improved the speed learning and separation accuracy, which is beneficial in using normal PC for training neural network instead of using high performance PC. It was found that the proposed system was language independent, but it performed better in Arabic than in English. Another advantage of this study is that a new challenging dataset TIMIT-2mix is proposed, which may be an alternative of WSJ0-2mix.

AcknowledgementThe authors would like to thank Dr. Jonathan Le Roux of Mitsubishi Electric Research Lab for his valuable advices.

| [1] |

Cherry E C. Some experiments on the recognition of speech, with one and with two ears. The Journal of the Acoustical Society of America, 1953, 25(5): 975-979. DOI:10.1121/1.1907229 (  0) 0) |

| [2] |

Hu K, Wang D L. An unsupervised approach to cochannel speech separation. IEEE Transactions on Audio, Speech, and Language Processing, 2013, 21(1): 122-131. DOI:10.1109/TASL.2012.2215591 (  0) 0) |

| [3] |

Schmidt M N, Olsson R K. Single-channel speech separation using sparse non-negative matrix factorization. Proceedings of Interspeech 2006- ICSLP, Ninth International Conference on Spoken Language Processing Interspeech. Baixas, France: ISCA, 2006. 2614-2617.

(  0) 0) |

| [4] |

Chien J T, Chen B C. A new independent component analysis for speech recognition and separation. IEEE Transactions on Audio, Speech, and Language Proceeding, 2006, 14(4): 1245-1254. DOI:10.1109/TSA.2005.858061 (  0) 0) |

| [5] |

Gu F L, Zhang H, Wang W W, et al. An expectation-maximization algorithm for blind separation of noisy mixtures using Gaussian mixture model. Circuits, Systems, and Signal Processing, 2017, 36(7): 2697-2726. DOI:10.1007/s00034-016-0424-2 (  0) 0) |

| [6] |

Lu X G, Tsao Y, Matsuda S, et al. Speech enhancement based on deep denoising autoencoder. Proceedings of Interspeech 2013. Baixas, France: ISCA, 2013. 436-440.

(  0) 0) |

| [7] |

Chen Z, Watanabe S, Erdogan H, et al. Speech enhancement and recognition using multi-task learning of long short-term memory recurrent neural networks. Proceedings of Interspeech 2015-the Sixteenth Annual Conference of the International Speech Communication Association. Baixas, France: ISCA, 2015. 3274-3278.

(  0) 0) |

| [8] |

Hori T, Chen Z, Erdogan H, et al. The MERL/SRI system for the 3RD CHiME challenge using beamforming, robust feature extraction, and advanced speech recognition. Proceedings of 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU). Piscataway: IEEE, 2015, 475-481. DOI:10.1109/ASRU.2015.7404833 (  0) 0) |

| [9] |

Huang P S, Kim M J, Hasegawa-Johnson M, et al. Joint optimization of masks and deep recurrent neural networks for monaural source separation. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2015, 23(12): 2136-2147. DOI:10.1109/TASLP.2015.2468583 (  0) 0) |

| [10] |

Huang P S, Kim M, Hasegawa-Johnson M, et al. Singing-voice separation from monaural recordings using deep recurrent neural networks. ISMIR, 2014, 477-482. (  0) 0) |

| [11] |

Luo Y, Chen Z, Hershey J R, et al. Deep clustering and conventional networks for music separation: Stronger together. Proceedings of 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Piscataway: IEEE, 2017, 61-65. DOI:10.1109/ICASSP.2017.7952118 (  0) 0) |

| [12] |

Luo Y, Chen Z, Mesgarani N. Speaker-independent speech separation with deep attractor network. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2018, 26(4): 787-796. DOI:10.1109/TASLP.2018.2795749 (  0) 0) |

| [13] |

Yu D, Kolbæk M, Tan Z H, et al. Permutation invariant training of deep models for speaker-independent multi-talker speech separation. Proceedings of 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Piscataway: IEEE, 2017, 241-245. DOI:10.1109/ICASSP.2017.7952154 (  0) 0) |

| [14] |

Hershey J R, Chen Z, Le Roux J, et al. Deep clustering: Discriminative embeddings for segmentation and separation. Proceedings of 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Piscataway: IEEE, 2016, 31-35. DOI:10.1109/ICASSP.2016.7471631 (  0) 0) |

| [15] |

Cho K, van Merriënboer B, Gulcehre C, et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation. Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP). Doha, Qatar: Association for Computational Linguistics, 2014, 1724-1734. DOI:10.3115/v1/D14-1179 (  0) 0) |

| [16] |

Jozefowicz R, Zaremba W, Sutskever I. An empirical exploration of recurrent network architectures. Proceedings of the 32nd International Conference on Machine Learning. Lille, France: JMLR, 2015. 2342-2350.

(  0) 0) |

| [17] |

Nguyen M. Illustrated guide to LSTM's and GRU's: A step by step explanation. Towards Data Science. https: //towardsdatascience.com/illustrated-guide-to-lstms-and-gru-s-a-step-by-step-explanation-44e9eb85bf21, 2019-09-27.

(  0) 0) |

| [18] |

Andermatt S, Pezold S, Cattin P. Multi-dimensional gated recurrent units for the segmentation of biomedical 3D-data. Deep Learning and Data Labeling for Medical Applications. Cham: Springer, 2016. 142-151. DOI: 10.1007/978-3-319-46976-8_15.

(  0) 0) |

| [19] |

Vincent E, Gribonval R, Fevotte C. Performance measurement in blind audio source separation. IEEE Transactions on Audio, Speech, and Language Processing, 2006, 14(4): 1462-1469. DOI:10.1109/TSA.2005.858005 (  0) 0) |

| [20] |

Rix A W, Beerends J G, Hollier M P, et al. Perceptual evaluation of speech quality (PESQ) - A new method for speech quality assessment of telephone networks and codecs. Proceedings of 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Piscataway: IEEE, 2001. 749-752. DOI: 10.1109/ICASSP.2001.941023.

(  0) 0) |

2021, Vol. 28

2021, Vol. 28