Computer-assisted education can ensure the openness of world-class teaching resources and reduce the increasing cost of learning. Knowledge tracking uses time-based modeling of students to accurately predict the next performance of students and capture clear needs. According to this feature, learning resources can be suggested to students according to their personal needs, and what is predicted to be too simple or too difficult can be skipped or postponed.

The learning process of students is often manifested as a potential learning model. According to the students' reaction to the new problems, the students' learning of these problems can be updated. Then, the model uses a series of questions the students have tried before and the correctness of each question to judge the student's situation on a new problem. There are two famous models, Bayesian Knowledge Tracing (BKT)[1] and Performance Factors Analysis (PFA)[2]. Because they can capture students' progress with reliable accuracy, the model has shown success in predicting accuracy and has been extensively explored and applied to the tutoring system[3-5]. BKT uses Bayesian networks to learn four parameters, each of which is just a component of skill, while the PFA model uses logistic regression to make predictions.

Nowadays, deep learning has been achieved in many fields due to the effect of machine learning[6]. However, the features extracted by deep learning are difficult to explain to a large extent due to their complexity, unlike BKT and PFA that try to combine interpretability with estimation. Based on the method of deep learning, a deep knowledge tracking model (DKT)[7] is proposed. The DKT model uses the latent variable of a recurrent neural network (RNN) to represent the knowledge state of students, which is a low-dimensional dense vector. The BKT model can express the students' knowledge more abundantly than the BKT model, thereby improving the performance of knowledge tracking. In the field of knowledge tracking, there are few studies on forgetting[8-10] and other studies are on students using richer student information[11]. These studies simplify the forgetting information depending on the sequence of the past. For the extended DKT model considering forgetting memory, only the number of attempts[8] or the delay time between two interactions are considered[9].

In this article, the Intelligent Tutoring System (ITS) was used to collect information to reach a large number of features such as teaching aids, time spent on individual tasks, and the number of attempts and to incorporate information related to forgetting and propose an extended DKT model.

The main contributions of this work include:

1) Multiple features are used to explicitly model forgetting behaviors to consider learning sequences and forgetting behaviors and study how the combination of various past information affects performance.

2) More features are incorporated to improve the accuracy of DKT model predictions including contact tags, reaction time, number of attempts, and first practice.

The rest of this paper is organized as follows. Section 1 reviews related work on student modeling techniques. Section 2 presents the proposed DKT model combining rich features and forgetting features. Section 3 describes the datasets used in our experiments. In the last section, the experiment is summarized.

1 Related Work 1.1 Knowledge TrackingTraditional knowledge tracking models such as BKT and PFA have been extensively explored and applied to the actual tutoring system. BKT uses Hidden Markov Model (HMM) to model students' knowledge. The behavior of students is expressed as a group of binary variables, which shows whether students have mastered it or not. PFA calculates the probability of correct answers based on the number of successes and errors in the data. PFA considers the interaction of the same knowledge, and does not interact with the entire sequence.

Since deep learning models perform better than traditional models in areas such as pattern recognition, the DKT model can simulate students' knowledge more accurately than the above-mentioned traditional models. DKT uses an RNN to simulate students' knowledge, which has good applicability in time series related tasks. Although the DKT model is a sequence model, it is also a BKT model, but the DKT model uses the hidden variables of RNN to represent the student's indicated state, and RNN is a low-dimensional M-based vector; the BKT model of students' knowledge state is represented by a binary (master the knowledge point, not master the knowledge point). In this way, DKT model can express students' knowledge more reasonably than the BKT model, thereby improving the accuracy of predicting students' future performance. After the DKT model, there are more and more researches on knowledge tracking models based on deep learning[10, 12-16]. Although their method extends the DKT model, they did not use feature engineering to process the extra information, and they did not pay attention to the cross temporal forgetting behavior. The DKT model uses a cyclic neural network to represent the latent state and time dynamics. When a student completes a task, it will use the information of the previous time steps or questions to make better leg-breaks for future performance.

1.2 Knowledge Tracking Considering Forgetting BehaviorThere are several entry points for improving knowledge tracking tasks[17], and considering forgetting behavior is one of the directions. An early study on forgetting[18] showed that memory capacity decreases exponentially with time, and forgetting can be avoided by increasing the number of repeated attempts. In knowledge tracking, it is obviously unreasonable if the fact that students' knowledge status changes over time is not considered.

The original BKT model does not consider the forgetting behavior. Khajah et al.[8] extended the BKT model to estimate the probability of the number of times students conducted in the past time. But their algorithm does not consider the problem of interval time. In the research based on the PFA model, there are two models about forgetting behavior. Pelánek added a time factor and used it to determine the probability[9]; Settles and Meeder[14] extended the model by using the near-death forgetting curve. Although these methods consider forgetting-related issues, they ignore the sequence of interaction with other knowledge.

The proposed method extends the DKT model. The DKT model considers the dimensionality reduction extraction of feature engineering and the entire interaction sequence of students.

1.3 Rich Feature ModelExisting public data sets such as ASSISTments include clickstream records and student files. Whenever a student answers a question in the ASSISTments system, a click stream record will be generated, and the student profile describes the student's background information and summarizes his click stream data. Each clickstream record contains 64 attributes. In the BKT model[1], the student's knowledge state is estimated. Every time a question is answered, the knowledge state of the student's cognitive skills will be recalculated. In addition, it can also reflect students' influence behaviors[19], such as confusion, depression, boredom, and concentration.

The student file contains 11 attributes, provides students' educational background information, and summarizes students' use of the above-mentioned auxiliary and learning indicators, including student abilities, emotional state, etc. Although clickstream data provides a large amount of interactive learning information, it is extremely complicated in analyzing the relationship between their time changes and choices. Therefore, our model analyzes the personal data of students, not clickstream data.

2 The Proposed ModelSince students can distinguish STEM and non-STEM very well, our main task is to comprehensively extract the status of each indicator of the student instead of just extracting the average indicator. To fully understand a student's instruction status, BKT is not a good choice, because it can only evaluate the instruction status of the skills the student has already answered. For skills that have not been answered by students, BKT cannot be applied. Therefore, if BKT is used to extract students' expected indication status for each skill, it will result in missing values. Therefore, a deep knowledge tracking model (DKT) was used, which can estimate the indication status of all skills at the same time, and is better than many traditional KT models without too many features, such as BKT and PFA. DKT indicator status and student profile were combined as the feature value of the prediction model.

Our model is based on DKT, which is the basis of all in-depth knowledge tracking models at this stage, and has enough scalability to easily merge multiple input sources and determine their interrelationships.

The traditional DKT task can be expressed as a supervised learning problem: given a student's past interactions x0, … xt, the next interaction can be predicted during the student's performance. The interaction xt = (qt, at) is defined as a tuple containing the skill id qt of the student's constant ten questions at time t and a label indicating whether it is correct, and at is embedding matrix. In our model, the first response time (the time of trying or asking for help in the ASSISTments data of the system), the number of attempts required for each item, and receiving prompts have been added to our features. Then feature selection and dimensionality reduction have been used in our model. After these features were converted into categorical data, they were expressed in the form of large-point coding and sparse vectors. Automatic coding were used to convert high-dimensional data into low-dimensional representative codes that can be used to reconstruct high-dimensional input and reduce the dimensionality without losing too much important information.

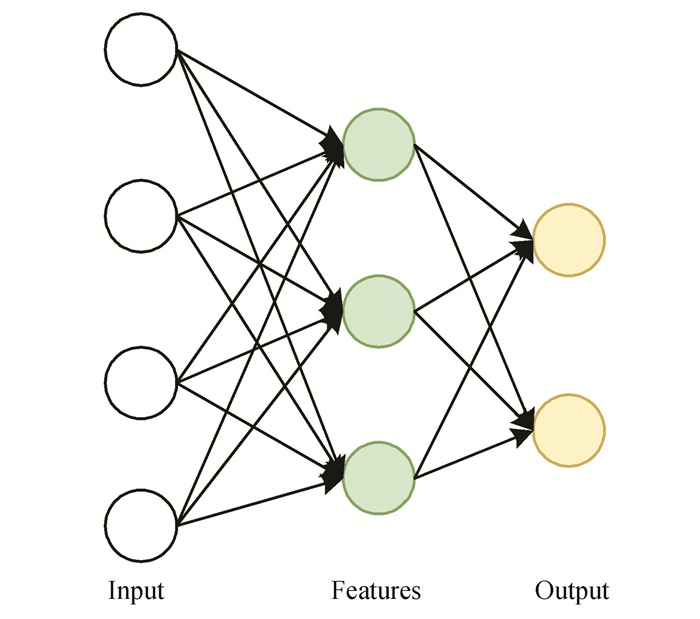

2.1 Model AggregationFeature engineering is a very creative part, which can better extract effective information. Since the input is consistent with the representation in the DKT model, features were transformed into classified data, thus simplifying the output without losing too much information. After the feature was converted into classified data, it was represented by point coding and coefficient vector, and all features were connected to construct the input vector. Since DKT is RNNs, too much training time will be increased if the dimension is too high. Therefore, stacked auto-encoders was adopted to solve this problem without loss of performance. In this way, the dimension was reduced and the task of feature extraction was completed layer by layer without losing too much important information. The final feature is more representative. The hidden layer was used as the input of the model and was stacked in this way. Hidden layers were used as input to the model and stacked in this way. In the experiment, the dimension was reduced to half of the input size, and the structure is shown in Fig. 1.

|

Fig.1 Representation of a stacked automatic encoder that compresses features into smaller dimensions |

The forgetting behavior was introduced into the DKT model, and the embedding vector and state vector in the original DKT model were changed according to the forgetting feature of the link. The students answered correctly over a long period of time, and the model updated its skills in the direction of more knowledge.

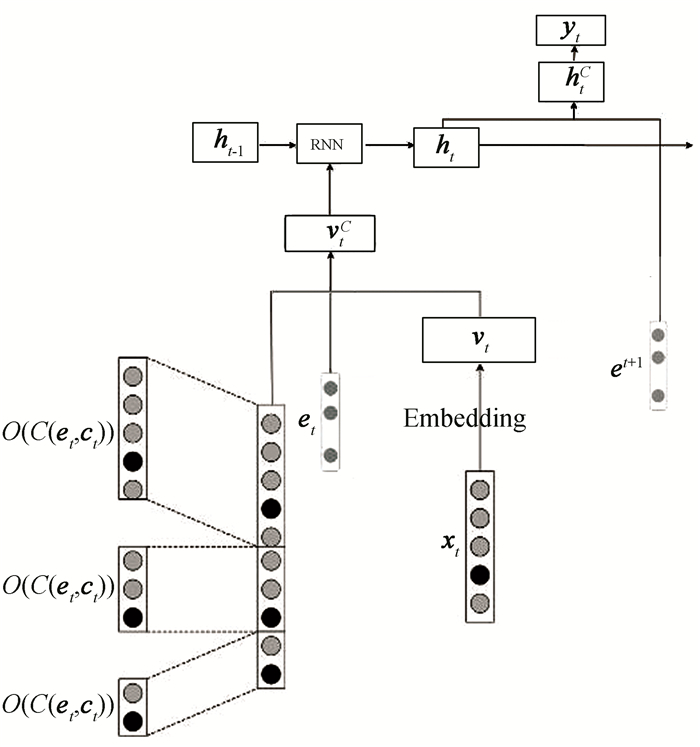

The new DKT LSTM model(Fig. 2) incorporates more functions and auto-encoder reset, connects the encoding feature O to the input layer, adds the forget feature et, which quantifies the previously related information: repetition time interval, sequence time interval, and an integrated component. yt represents the next prediction result.

|

Fig.2 The new DKT LSTM model |

2.2 Model Formula

DKT uses various RNNs such as Elman RNN[20]and long short term memory (LSTM)[21] to simulate the transformation of students. The indicated state of the student is represented by a hidden state of RNN. Student's indicated state ht can be divided into two processes: modeling students' knowledge and predicting students' performance.

The input vector was constructed by thermal coding of individual features, where vt represents the input vector of each student's contact result, et is the motion label, and ct represents the correctness.

| $ C\left(\boldsymbol{e}_t, \boldsymbol{c}_t\right)=\boldsymbol{e}_t+(\max (\boldsymbol{e})+1) \cdot \boldsymbol{c}_t $ | (1) |

The model adds previous information to the model. In order to model the process of students, it uses a trainable embedding matrix a to calculate vt instead of assigning random values. The forgetting parameter et is a multi-hot vector that combines three features. Each is expressed as a heat vector, and then spliced. Before entering the RNN module, the embedding vector vt is first integrated with the additional information o

| $ \boldsymbol{v}_t^C=\boldsymbol{\vartheta}^{\text {in }}\left(\boldsymbol{v}_t, \boldsymbol{e}_t, o\right) $ | (2) |

where vtC is a combination that stores the forgetting information and feature information,

| $ \boldsymbol{h}_t=\phi\left(\boldsymbol{v}_t^C, \boldsymbol{h}_{t-1}\right) $ | (3) |

Therefore, taking into account the student's response to the skills and forgetting related information, the student's instruction status is updated. If students answer the questions correctly, then the model will develop towards a better mastery.

3 Experiment 3.1 Experimental DetailsThe training details are as follows. The stacked autoencoder was trained hierarchically in advance. The performance of each connection was predicted, but only one was supervised, because there was only one label per time step. Just one LSTM layer was used with 200 hidden nodes, and the exit probability was 0.4 during training. In the process of modeling students' knowledge and predicting students' performance, the integral function was added to the model to consider forgetting behavior.

The learning parameters of our model are the embedding matrix a of the interaction vector xt , the weight of the RNN module, the predicted weight matrix, and other parameters. The next question of qt+1 that minimizes the id of the skill is answered with the correct prediction probability, and the standard cross entropy between the real labels at at+1 is true to learn together:

| $ \begin{aligned} L=&-\sum\left(\boldsymbol{a}_{t+1} \log \left(\boldsymbol{y}_t^{\mathrm{T}} \delta\left(q_{t+1}\right)\right)+\left(1-\boldsymbol{a}_{t+1}\right) \cdot\right.\\ &\left.\log \left(1-\boldsymbol{y}_t^{\mathrm{T}} \delta\left(q_{t+1}\right)\right)\right) \end{aligned} $ | (4) |

where at+1 is an embedding matrix indicating knowledge growth based on training, δ indicates one-hot code.

3.2 Data setThe two public data sets used, ASSISTments and Open Learning Initiative (OLI), are the data recorded by the learning platform during the learning process. Table 1 shows the information of these two data sets. The ASSISTments 2009-2010 data set is based on mastering skills to build this mobile phone. In the initial report results, three problems were found that inadvertently exaggerated the performance of DKT, so a newer version was used here. The OLI Statics F2011 data set comes from the engineering statics course of Australian University. Since this is a time series algorithm, it does not consider students whose records contain less than two synchronized times.

| Table 1 Statistics of ASSISTments2009-2010 and Statics2011 data sets |

3.3 Results

5x cross-validation and results were used to evaluate the AUC. There are many possible combinations of features, but the values here discuss some of the most effective ones. In the two datasets in Table 1, the merging of features or the introduction of forgetting behavior is superior to the original DKT model and BKT model. In the ASSISTments 2009-2010 data set, the AUC value increased from 0.7235 to 0.7309 when the forgetting behavior was added, and to 0.7306 when the input characteristics were automatically encoded using time/correct, action (time of the first attempt), and attempt (whether to ask for help or not). It was also attempted to train it directly by using one-hot coding. The results showed that the time efficiency was extremely low or even unfeasible after three features were introduced at the same time.

4 ConclusionsIn this article, the traditional DKT model was extended and a new DKT model is proposed that fully considers forgetting characteristics and multiple features. Student-level cross-validation and pass AUC and R2 were used. There are multiple combinations of features and the combined features of the forgotten features were added. In the two data sets in Table 2, the model of combined features is better than the original DKT model, and the use of autoencoders also shows higher performance. It is supported to reduce the dimensionality. Without the use of autoencoders, it is almost impossible to combine all features.

| Table 2 Test results |

Compared with the previous DKT model, our model is more in line with the actual process with more effective feature information and forgetting features to improve the accuracy of the algorithm.

| [1] |

Corbett A T, Anderson J R. Knowledge tracing: modeling the acquisition of procedural knowledge. User Modeling and User-Adapted Interaction, 1994, 4(4): 253-278. DOI:10.1007/BF01099821 (  0) 0) |

| [2] |

Pavlik Jr P I, Cen H, Koedinger K R. Performance factors analysis-a new alternative to knowledge tracing. Frontiers in Artificial Intelligence and Applications, 2009, 200: 531-538. DOI:10.3233/978-1-60750-028-5-531 (  0) 0) |

| [3] |

Kasurinen J, Nikula U. Estimating programming knowledge with Bayesian knowledge tracing. ACM SIGCSE Bulletin, 2009, 41(3): 313-317. DOI:10.1145/1595496.1562972 (  0) 0) |

| [4] |

Rowe J P, Lester J C. Modeling user knowledge with dynamic Bayesian networks in interactive narrative environments. AⅡDE'10: Proceedings of the Sixth AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment. Palo Alto, California: AAAI, 2010.57-62.

(  0) 0) |

| [5] |

Schodde T, Bergmann K, Kopp S. Adaptive robot language tutoring based on Bayesian knowledge tracing and predictive decision-making. Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction. New York: ACM, 2017.128-136. DOI: 10.1145/2909824.3020222.

(  0) 0) |

| [6] |

Xu D L, Tian Z H, Lai R F, et al. Deep learning based emotion analysis of microblog texts. Information Fusion, 2020, 64: 1-11. DOI:10.1016/j.inffus.2020.06.002 (  0) 0) |

| [7] |

Piech C, Bassen J, Huang J, et al. Deep Knowledge Tracing. https://sc.panda321.com/scholar?cluster=7245594791529377875&hl=zh-CN&as_sdt=0, 5, 2021-01-01.

(  0) 0) |

| [8] |

Khajah M, Lindsey R V, Mozer M C. How Deep Is Knowledge Tracing? https://arxiv.org/abs/1604.02416, 2021-01-01.

(  0) 0) |

| [9] |

Pelánek R. Modeling students' memory for application in adaptive educational systems. Proceedings of the 8th International Conference on Educational Data Mining. Massachusetts: International Educational Data Mining Society, 2015.480-483.

(  0) 0) |

| [10] |

Nagatani K, Zhang Q, Sato M, et al. Augmenting knowledge tracing by considering forgetting behavior. The World Wide Web Conference. Geneva: International World Wide Web Conference Committee, 2019.3101-3107. DOI: 10.1145/3308558.3313565.

(  0) 0) |

| [11] |

Zhang L, Xiong X L, Zhao S Y, et al. Incorporating rich features into deep knowledge tracing. Proceedings of the Fourth (2017) ACM Conference on Learning. New York: ACM, 2017.169-172. DOI: 10.1145/3051457.3053976.

(  0) 0) |

| [12] |

Cheung L P, Yang H Q. Heterogeneous features integration in deep knowledge tracing. International Conference on Neural Information Processing. Berlin: Springer, 2017.653-662. DOI: 10.1007/978-3-319-70096.

(  0) 0) |

| [13] |

Qiu Y M, Qi Y M, Lu H Y, et al. Does Time Matter? Modeling the Effect of Time with Bayesian Knowledge Tracing. https://www.researchgate.net/publication/221570540_Does_Time_Matter_Modeling_the_Effect_of_Time_with_Bayesian_Knowledge_Tracing, 2021-01-01.

(  0) 0) |

| [14] |

Settles B, Meeder B. A trainable spaced repetition model for language learning. Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Berlin: Association for Computational Linguistics, 2016.1848-1858.

(  0) 0) |

| [15] |

Sonkar S, Waters A E, Lan A S, et al. qDKT: Question-Centric Deep Knowledge Tracing. https://arxiv.org/abs/2005.12442?context=cs.LG, 2021-01-01.

(  0) 0) |

| [16] |

Zhao J J, Bhatt S, Thille C, et al. Cold start knowledge tracing with attentive neural turing machine. L@S'20: Seventh (2020) ACM Conference on Learning@Scale. New York: ACM, 2020.333-336.

(  0) 0) |

| [17] |

Pelánek R. Bayesian knowledge tracing, logistic models, and beyond: an overview of learner modeling techniques. User Modeling and User-Adapted Interaction, 2017, 27(3-5): 313-350. DOI:10.1007/s11257-017-9193-2 (  0) 0) |

| [18] |

Ebbinghaus H. Memory: a contribution to experimental psychology. Annals of Neurosciences, 2013, 20(4): 155-156. DOI:10.5214/ans.0972.7531.200408 (  0) 0) |

| [19] |

Ocumpaugh J, Baker R, Gowda S, et al. Population validity for educational data mining models: a case study in affect detection. British Journal of Educational Technology, 2014, 45(3): 487-501. DOI:10.1111/bjet.12156 (  0) 0) |

| [20] |

Elman J L. Finding structure in time. Cognitive Science, 1990, 14(2): 179-211. DOI:10.1016/0364-0213(90)90002-E (  0) 0) |

2022, Vol. 29

2022, Vol. 29