With the continuous development of clients and servers and the support of network communication protocols, network communication has become increasingly easier. However, the corresponding network security problems are also emerging, which brings unstable factors to cyberspace.

Cross-site scripting is one of the most dangerous cyber attacks. The attacker creates malicious scripts and forwards scripts to ordinary user clients through the web server. It is then executed in the browser to serve the attacker's specific purpose.

Cross-site scripting may lead to the following types of attacks: 1) stealing users' identity; 2) denial of service attack; 3) tampering with the web page; 4) simulating user identity to initiate requests or execute command worms, and so on[1].

According to the network attack and defense data released by ChuangYu Cloud Defense Platform[2], among the serious and high-risk vulnerabilities in the network security (cloud security) situation in 2018, cross-site scripting accounted for 27.24%. Therefore, new defense methods are needed to quickly and accurately identify and distinguish malicious cross-site scripting to reduce the harm to web pages[3].

In this paper, malicious cross-site scripts were detected and classified from the perspective of natural language processing. The purpose of malicious script prediction and classification was realized by combining the learning and prediction ability of the long-short term memory (LSTM) neural network.

The rest of the paper is organized as follows. Section 1 introduces some basic information of XSS. In Section 2, some related work are presented. In Section 3, the paper describes browser parsing for cross-site scripting. Section 4 introduces cross-site scripting detection techniques. In Section 5, the data processing and neural network classification models are introduced. In Section 6, the experimental results and conclusions are given. In Section 7, the paper proposes a summary and future outlook.

1 Cross-Site ScriptingThe attack method of cross-site scripting is the script that returns users' input value in the HTML page. If the script returns input consisting of JavaScript code in the response page, the browser can execute the entered code. Therefore, it is possible to form several links to the site. One of the parameters is composed of malicious JavaScript code. This code will be executed by the user's browser in the context of the site and then visit the user's cookies and other web pages. The attacker then entices legitimate users to click on the generated illegal link. When the user clicks the link, a request for the website is generated. The parameter value contains malicious JavaScript code. If the website embeds this parameter value in the response HTML page, the malicious code will execute in the user's browser. An example is shown in Fig. 1.

|

Fig.1 Cross-site scripting schematic |

Here are three representations of cross-site scripting:

1) Reflective cross-site scripting

The attacker enters the malicious script into the Uniform Resource Locator, which causes the malicious information to be stored in the URL. When user accesses the URL link, the backend server responds to the user's request and returns a script. When the information is returned to the user's browser interface, the malicious script collects the victim's sensitive information and sends it to the attacker.

2) Storage cross-site scripting

This type of attack lasts for a long time and is harmful. The attacker will save the malicious script to the vulnerable server or database in advance. Due to insufficient filtering, the malicious script can be stored for a long period of time. When users browse the web page containing this malicious script code and interact with the content, the malicious code is extremely easy to trigger and then executes a malicious attack.

3) Document object-type cross-site scripting

This attack utilizes document objects as platform-neutral and language-neutral interfaces. This allows programs and scripts to dynamically access and update the content, structure, and style of documents. It completes the extraction of malicious code in the user's browser and performs malicious attacks.

2 Related WorkIn recent years, scholars in the field of security have studied cross-site scripting extensively.

From the perspective of network communication security, XSS vulnerability detection methods are mainly divided into static detection method and dynamic detection method[4]. Jovanovic et al.[5] designed and implemented a cross-site scripting vulnerability detection tool called Pixy using static analysis, which can detect whether the stain data has been cleaned up before outputting the data, thereby determining whether XSS vulnerabilities exist. Pan et al.[6] combined the static code analysis of the stain propagation model and the dynamic detection method of the purification unit, which played a good role in detecting XSS vulnerabilities. Parameshwaran et al.[7] dynamically replaced suspicious strings on client browser, which is a patch defense for the browser to prevent XSS attacks from entering the client.

From the natural language processing perspective, scientists have done the following job to prevent cross-site scripting.Le and Mikolov[8] proposed the representation of sentence vectors and paragraph vectors in 2014. The sentence vector used one-hot method as input value, then multiplied it with the word matrix, and used the average and stitching method to obtain the entire sentence representation. Finally, this paper classified them to obtain the complete word vector matrix. Paragraph vectors were represented by paragraph matrix methods. When related words are entered into a paragraph, the corresponding paragraph representation method can be obtained from the matrix by the number of the paragraph. Liu et al.[9] used the idea of natural language processing in the classification of malicious web pages to perform data cleaning and filling operations, then they used machine learning method to predict the malicious sample information, which obtained better results. The sample data was relatively less, but it was not easy to get better results.

According to the text characteristics of malicious cross-site scripts, this paper proposes an analysis method based on URL attributes and YARA rules. By using the two methods to preprocess the malicious script data, more irrelevant and invalid information is removed to ensure the validity of data and improve the success rate of analysis.

This paper analyzed the text characteristics of cross-site script statements from the perspective of natural language processing, and vectorized the cross-site script statements according to the semantic sequence of URL by using the method of word vector processing. Finally, the encoding set of word vectors was obtained, which was used to represent the semantic relationship of words in vector space.

3 Cross-Site Scripting for Browser ParsingOrdinary users communicate via the Internet through a browser. In order to ensure security during communication, the browser will filter all parameters before displaying the web page to the user. The filtering methods include: 1) escaping tags containing "html"; 2) removing sensitive "html" scripts.

At the same time, the browser passes three parsing behaviors when parsing the HTML document, including HTML parsing, JS parsing, and URL parsing. Each parser parses the corresponding sentence content in the HTML document. Generally speaking, after receiving an HTML document requested by a user, the browser performs two steps: tag parsing and DOM tree construction.

3.1 Tag ParsingThe first step in tag parsing is to parse the vocabulary of the HTML document. When the user's browser parses the HTML, it will scan the document according to the order of the web page tags. When encountering a file that needs to be requested from the server, the parser sometimes does not wait for a file request at a certain location, but continues to parse the HTML. When the loaded file is requested, the parser will go back and parse the file again.

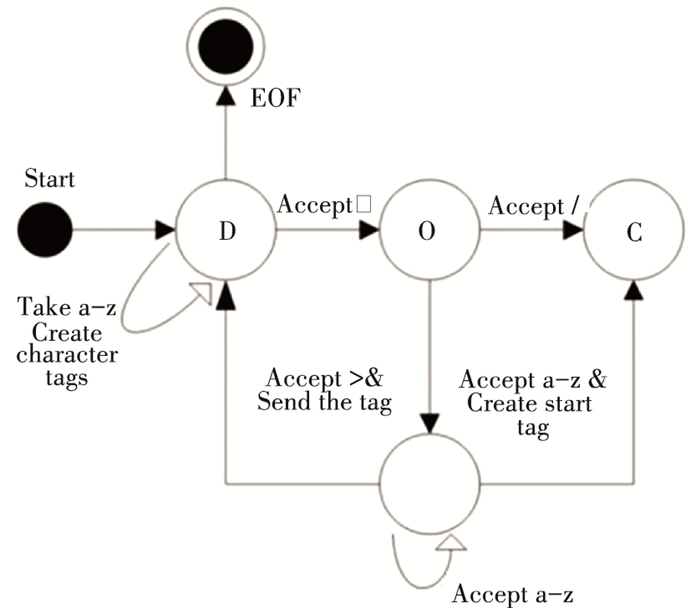

A certain finite automaton can be used to represent the parsing process of HTML, as shown in Fig. 2.

|

Fig.2 Finite automaton representation for HTML parsing |

When the label is in the initial state (data state (D)), the following occurs:

1) The character is "<", the state is changed to "mark open state (O)":

a) Begin searching for tag names. If any a-z character is found in the order from left to right, a "start tag" will be created and the status will be changed to "tag name status (N)". The status will not change until the ">" character is found. After searching for ">" tags, the current new tag is sent to the tree constructor, the state changes back to "data state (D)".

b) When character "/" is found, an "off flag on state (C)" is created. If any subsequent a-z characters are searched, it will change to "tag name status (N)". Until the ">" character is found, the current new token is sent to the tree constructor and changed back to "data state (D)".

2) When encountering a-z characters, the parser will create each character as a character token and send it to the tree constructor.

3) If the tags are nested, a recursive search is performed and the above parsing steps are repeated.

3.2 DOM Tree ConstructionIn the process of tag parsing, the DOM tree also starts to be constructed at the same time.

When building a DOM tree, tag stack is used. Each HTML tag will enter this stack. If it detects that the same type of end tag is in the stack, it will satisfy the pop-out condition and pop the tag pair at the top of the stack. The pop-up tags are then added to the DOM tree. Therefore, the DOM tree will generate various state tree nodes according to the different states of each parse, and will be processed by the DOM tree builder.

So each tag will be found in the corresponding position of the DOM tree.

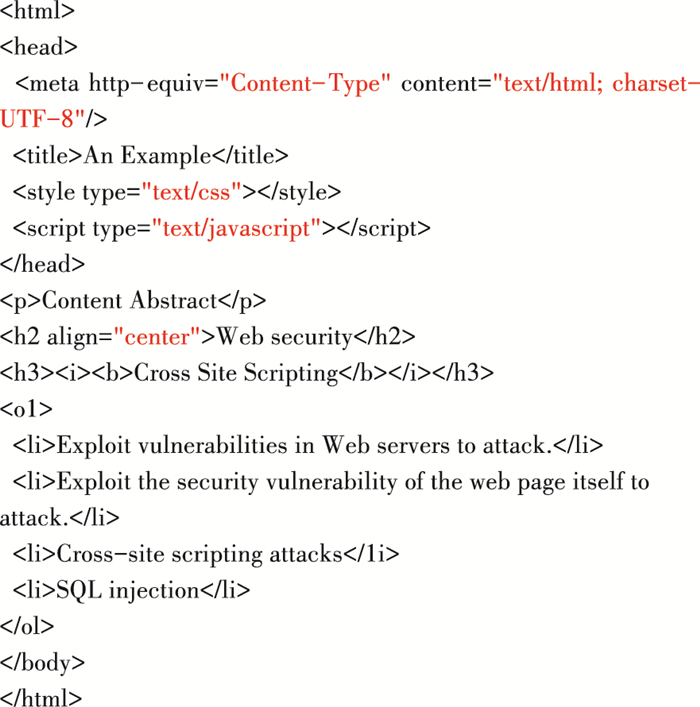

The specific process is shown in Fig. 3, and Fig. 4 illustrates an HTML code example.

|

Fig.3 DOM tree construction process |

|

Fig.4 HTML code |

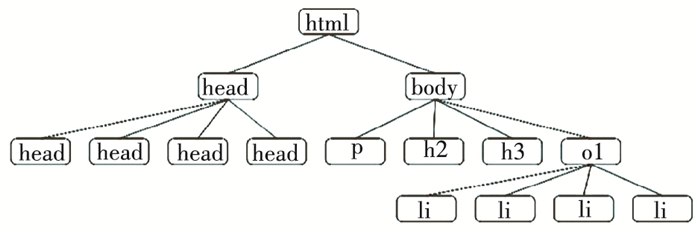

The HTML code shown in Fig. 4 is analyzed from the perspective of building a DOM tree. The first tag is <html>, which contains all other tags and code, so it can be identified as the root of the DOM tree model. Second, it is found that the <head> tag and the <body> tag take up all the space within the <html>, so they are child tags of <html> and are siblings in the tree model. Their tags also contain multiple elements, so they are the parent of other elements. A clear DOM tree model is shown in Fig. 5.

|

Fig.5 DOM tree model for HTML code |

4 Cross-Site Scripting Detection Techniques

This section analyzes the textual characteristics of cross-site scripts. By analyzing the collected data, the characteristics of cross-site scripting attack are analyzed from three aspects: code injection, code bypass, and code attribute.

This section starts with malicious cross-site script attack, and proposes URL-based attribute analysis and YARA rule-based analysis.The sentence structure and attack characteristics of malicious XSS attack were defined. According to the rule description, the malicious cross-site script was successfully identified and classified. Good results have been achieved in data sorting and cleaning.

4.1 Analysis Based on URL AttributesAccording to the types and encoding of XSS attacks described in Section 2, attackers usually use URL vulnerabilities to perform XSS attacks. Among them, reflective cross-site scripting attacks and DOM-based cross-site scripting attacks are mostly implemented through URL links, including:

1) The attacker uses malicious URL links built by social engineering methods to induce users to click;

2) The attacker uses URL property to call remote malicious files to carry out malicious attacks in the background;

3) The attacker will lurk after stealing user's login information. According to the user's operation, the attacker continuously obtains the user's private information and the user's usage habits data.

In the high-risk areas of an XSS vulnerability, the attacker can host malicious JavaScript on an external host and directly use the "eval()" function that contains the main attack information, such as

eval(<script src=″http://hacker.com/xss-malicious.js″></script>)

In external script code, the attacker can write a variety of attacks codes, which can cause multiple injuries to users.

Therefore, continuously detecting and finding suspicious URLs in web pages can effectively prevent cross-site scripting attacks.

4.2 Analysis Based on YARA RuleYARA is an open source tool developed by Victor Alvarez. YARA is mainly used for malware research and detection. It is designed to help information security personnel identify and classify malware samples. It provides a rules-based approach to create a description of a malware family in text or binary mode files. YARA identifies malicious files or processes based on the expression logic in the rules and performs pattern matching on suspected software or files based on rules written by information security personnel. If some features in the scanned software or file conforms to the description of the rules, the software or file could be initially considered malicious and needs to be separated.

A YARA rule consists of a rule name, a string (or a hexadecimal string, or a regular expression), and a condition.

The rule name is the name used by researchers to make rules for a particular type of malicious file. It can give a brief description of the rules written in the introduction area (meta area), including author information, rule source, rule usage, and sample examples. The string area (string area) can be either a hexadecimal string or a text string. The condition area (condition area) refers to a rule defined by the string region. It determines the logical expression of the current YARA rule, so the condition area must contain a Boolean expression to determine whether the target file meets the rule. Fig. 6 shows YARA rules based on cross-site scripting data.

|

Fig.6 Example of YARA rule |

After data collation and analysis, it was found that the combination of dangerous keywords and characters with high frequency in malicious script code was limited. Therefore, it is believed that the combination of high-frequency malicious script data and high-frequency dangerous words can meet the creation of YARA rules.

5 Data Processing and Neural Network Model 5.1 Data SetThe data set of malicious XSS script adopted in this paper is from the </xssed> website, which contains more than 40000 sample data of malicious XSS attack. More than 20000 pieces of malicious XSS data are collected in this paper.

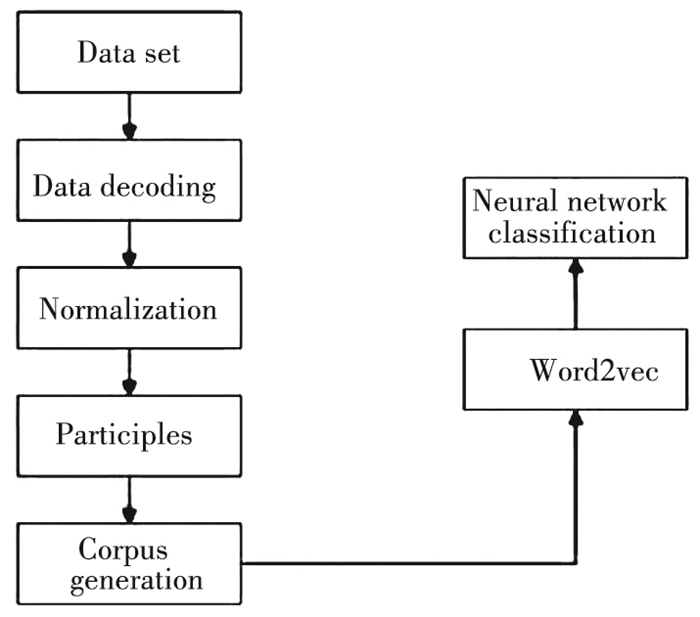

This article used URL attribute analysis and YARA rule analysis combined with manual filtering. At the same time, in order to ensure data security, user data and path information were removed, and the payload portion and the overall statement representation of the malicious script message were preserved, as shown in Fig. 7.

|

Fig.7 Data processing flow |

5.2 Word Vector Processing

The Skip-gram model proposed by Mikolov combined with Word2vec word vector processing method was used is this paper. Vectorization was used to reduce the computational dimension, which guaranteed the interpretability of the script.

Considering that the number of words processed was large, the word vector window size was set to 5 in the process of embedding word vectors. In order to obtain a better word representation, a 128-dimensional vector was used to represent each word. At the same time, to avoid the overfitting problem, the data was reduced. A stochastic gradient descent algorithm was used to optimize the calculation results.

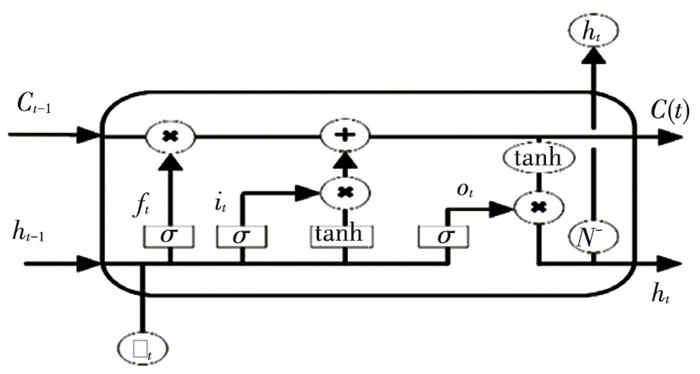

5.3 Neural Network Model ClassificationWord2vec was used to vectorize the data and weaken the context representation of cross-site scripts. At the same time, the context relationship between words was preserved, which is beneficial to the modeling of short-term memory neural network. In this paper, the calculation of the weight of the word set was added to the LSTM neural network, i.e.:

| $ N^{-}=\frac{1}{n} \cdot \sum\limits_{i=1}^n \boldsymbol{X}_t(i) $ | (1) |

where n represents the number of words adjacent to the input word at the current moment, Xt(i) represents the i-th word vector adjacent to the input word, as shown in Fig. 8, where σ∈[0.1] is the sigmoid ativation function used to control the gate unit.

|

Fig.8 LSTM neural network model |

The input status in the current state is

| $ \widetilde{C}_t=\tanh \left(\boldsymbol{W}_C \cdot\left[h_{t-1}, \boldsymbol{x}_t\right]+\boldsymbol{b}_c\right) $ | (2) |

where WC is the weight matrix of the current input state, xt is the input value of LSTM at the current moment, and bc is the paranoid matrix of the current input state.

The unit output status at this time is

| $ C_t=f_t \times C_{t-1} \cdot N^{-}+i_t \times \widetilde{C}_t $ | (3) |

After the above calculations, the final output value of LSTM is

| $ h_t=o_t \cdot \operatorname{softmax}\left(C_t\right) $ | (4) |

Basic LSTM unit contains three input signals: the output result ht-1 of the previous LSTM, the memory state Ct-1 of the previous LSTM unit, and the input value Xt at the current time. The three gates in the LSTM unit determine the three output states respectively.

The forgetting gate ft can determine the quantity information of the state Ct-1 and retain it to the current state Ct;

The input gate it can determine the quantity information of the input value Xt at the current moment, which is saved to the current state Ct;

The output gate ot determines the quantity of the current state Ct, which can be output to the current result value ht.

6 Experiment and Analysis 6.1 Evaluation MethodCross-site scripting detection is a typical binary classification problem, the evaluation methods were used in this paper as shown in Table 1.

| Table 1 Experimental evaluation methods |

TN indicates the case where normal scripts are classified as normal scripts, FN indicates a case where a malicious script is classified as a normal script, TP indicates the case where a malicious script is classified into a malicious script, FP indicates a case where a normal script is classified into a malicious script.

Classification accuracy (Precision):

| $ P=\frac{\mathrm{TP}}{\mathrm{TP}+\mathrm{FP}} $ | (5) |

The recall rate (Recall):

| $ R=\frac{\mathrm{TP}}{\mathrm{TP}+\mathrm{FN}} $ | (6) |

Comprehensive representation classification accuracy (F1-score):

| $ \mathrm{F} 1=\frac{2 \times P \times R}{P+R} $ | (7) |

LSTM neural network calculation method was used to calculate the accuracy, recall, and F1-score of data classification.

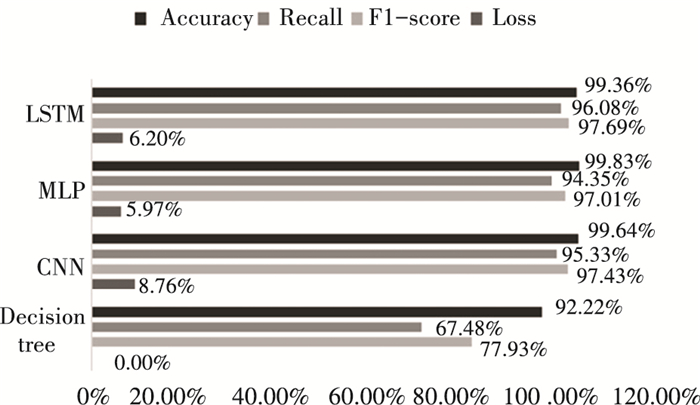

The data in this paper were used in combination with convolutional neural networks and multi-layer perceptron detection methods for experiments. The methods in this paper were compared with the machine learning decision tree method in Ref.[6].

By observing the experimental process and combining with the experimental results shown in Fig. 9, the following findings were obtained:

|

Fig.9 Experimental results |

1) The LSTM model achieved 98% accuracy and 96% recall in about 100 rounds of experiments. Under the same condition, it is about 2 times faster than both MLP model and CNN model;

2) The decision tree method of machine learning takes less time for model training than neural network model training. But the accuracy of neural network model is generally higher than that of machine learning decision tree algorithm;

3) The decision tree method of machine learning takes less time for model training than neural network model training. But the decision tree method cannot reflect the loss value;

4) The LSTM method in this paper has a low accuracy rate, but a low loss value, and the highest score in recall rate and F1-score. Moreover, recall rate and F1-score can indicate the ability of the model to identify malicious scripts, so it can be proved that the method adopted in this paper has a good effect.

5) The decision tree method of machine learning, combined with the research of Liu et al.[9], shows that the ability of decision tree to process large samples is weak, and the tree structure formed when the data volume is too large is not suitable for the data set calculation in this paper. Therefore, deep learning can train big data sample and obtain more accurate results.

7 ConclusionsThis paper analyzed cross-site scripting by attribute analysis and YARA rule classification. Cross-site scripting was analyzed from the perspective of natural language processing. In addition, the calculation of the word encoding set was added to the LSTM neural network model to classify the experimental data. The good results were obtained, which is beneficial to secure network data communication.

The YARA rules compiled in this paper are not comprehensive. Aiming at the collected data, it is necessary to summarize and refine the attack forms of malicious script statements in the subsequent research, continuously optimize the statement processing logic, and improve the script detection capability.

| [1] |

OWASP. OWASP Top 10 2017. http://www.owasp.org.cn/owasp-project/OWASPTop102017v1.1.pdf, 2021-02-09.

(  0) 0) |

| [2] |

KNOWNSEC. KNOWNSEC 2018 Network Security (Cloud Security) Situation Report. https://www.freebuf.com/articles/paper/196002.html.

(  0) 0) |

| [3] |

CNCERT/CC. A Review of China's Internet Security Situation in 2019. https://www.cert.org.cn/publish/main/upload/File/2019-year.pdf, 2021-02-09.

(  0) 0) |

| [4] |

Avancini A, Ceccato M. Towards security testing with taint analysis and genetic algorithms. SESS '10: Proceedings of the 2010 ICSE Workshop on Software Engineering for Secure Systems. New York: Association for Computing Machinery, 2010.65-71. DOI: 10.1145/1809100.1809110.

(  0) 0) |

| [5] |

Jovanovic N, Kruegel C, Kirda E. Pixy: a static analysis tool for detecting web application vulnerabilities. Proceedings of the 2006 IEEE Symposium on Security and Privacy (S& P'06). Piscataway: IEEE, 2006.1042585. DOI: 10.1109/SP.2006.29.

(  0) 0) |

| [6] |

Pan G B, Zhou Y H. XSS vulnerability detection based on static analysis and dynamic analysis. Computer Science, 2012, 39(6A): 51-53+85. (  0) 0) |

| [7] |

Parameshwaran I, Budianto E, Shinde S, et al. Auto-Patching DOM-Based XSS at Scale. https://www.comp.nus.edu.sg/~hungdang/papers/autopatching.pdf, 2021-02-09.

(  0) 0) |

| [8] |

Le Q, Mikolov T. Distributedrepresentations of sentences and documents. ICML'14. Proceedings of the 31st International Conference on International Conference on Machine Learning. Beijing: Microtome Publishing, 2014. Ⅱ-1188-Ⅱ-1196.

(  0) 0) |

| [9] |

Liu J H, Xu M D, Wang X, et al. A Markov detection tree-based centralized scheme to automatically identify malicious webpages on cloud platforms. IEEE Access, 2018, 6(1): 74025-74038. DOI:10.1109/ACCESS.2018.2882742 (  0) 0) |

2022, Vol. 29

2022, Vol. 29