In data mining[1-3], pattern recognition[4-5], machine learning[6-7], and feature selection (FS) has indeed been intensively investigated. The process of picking useful characteristics and deleting unnecessary ones from a dataset is referred to as feature selection. The goal of FS is to keep the strong characteristics of the prediction model, rendering it more exclusionary and hence more efficient[8].

Big data is a combination of techniques that enable large-scale data processing, while traditional knowledge extraction techniques will not operate in this context since they were not designed for it. Big data is a notion that has been described based on five key characteristics of data: volume, velocity, diversity, validity, and value[9]. The first three are concerned with the data generating process as well as how the information is acquired and preserved. The elements of truth and significance will be those who interact with both qualities and utility. The primary difficulties in big data include analyzing computer expertise, domain knowledge, data and its privacy, and data mining. As a result of these challenges, data processing and mining procedures are crucial in its development[10-11].

In big mining of information and data, the purpose of the feature selection (FS) method is to group subsets based on significant features from the original set while eliminating duplicate, irrelevant, and noisy features. Researchers may construct a better model by removing inefficient features from the data source, as ineffectual features can confuse the learning system, resulting in reduced memory and compute costs.

Feature selection is a dimensional reduction approach for processing high-dimensional data that picks a subset with acceptable features for model construction. Maintaining a subset of its characteristic features, but also their practical interpretations in its initial feature sets has the benefit of being properly preserved, which may increase comprehensibility and accessibility[12]. This feature selection will obtain the necessary elements while deleting the irrelevant and unneeded features, resulting in a cost reduction without sacrificing performance. Feature selection techniques linked to such search strategies include the wrapper[13], the filter[14], and the embedding approaches[15]. The wrapper technique assesses the relevance of a feature by using the model's learning. It will keep choosing subsets of features and estimating the training presentations based on the features chosen until it reaches a high-level performance. Because it scans the whole search region[16-17], it is sluggish and thus seldom used. The filter techniques do not rely on very efficient learning algorithms, instead it employ data properties to assess the importance of features[18].

Embedded selection using model development has resulted in a system that incorporates the benefits of either the filter or wrapping techniques, enabling embedding selection with model development to take advantage of the benefits of both. Firstly, all interactions within the learning process are removed, and second, since feature sets are not analyzed, wrapper approaches are demonstrated to be more efficient[19]. Data mining will benefit from the categorization strategy since it will classify the data into structured groups or classes. It will help with knowledge extraction and the formulation of a plan. Users will be able to make sensible decisions thanks to this categorization, which will be divided into two stages. First, there will be a learning approach that examines a vast data collection. The examination or testing of the accuracy of the categorization patterns will be the next step[20]. The categorization will be based on the use of models and object class labels to determine the class of an unknown item. Several types of classification have been utilized, including neural networks, classification rules, decision trees, and mathematical formulae, as well as KNN and Nave Bayes classifiers[21]. A new method has been presented to improve the analysis of huge data for this project.

1 MotivationDatasets are crammed with data from a wide variety of sources in today's world. If the dataset comprises irrelevant, redundant, and duplicated features which do not benefit the development of a predictive model, the enormous dimensionality of data raises the computing cost and improves the effectiveness of a machine learning framework. With a large number of features, learning models will face the issue of overfitting. Because selecting an acceptable feature selection technique is a difficult undertaking, the motivation of this research is to select a subset of features from the dataset that are most suited or appropriate for the challenge. By testing alternative combinations of qualities in a dataset, feature selection algorithms may minimize the amount of features needed to create a machine learning model. Feature selection has been widely employed in a variety of applications, including junk email categorization, illness diagnosis, fraudulent claims, credit risk, text cataloging, and microarray technology inquiry. Preparing excellent features from the dataset, that enables them more relevant to obtain the greatest accuracy outcomes by the classifier, is the most significant task for creating an effective decision-making model. Wrapper-based, filter-based, embedded feature selection, and a variety of other traditional strategies are often suggested to improve classification model performance by selecting an appropriate subset of features from feature space. However, it has significant disadvantages over deterministic algorithms, including computationally costly, discriminative power, shorter training durations, classifier dependent selection, and a larger danger of over-fitting. Consequently, sophisticated feature selection approaches are increasingly relying on the capability of optimization algorithms to choose a subset of important features for improved classification results. Many governing factors are modified for greater efficiency in most optimization methods, such as the genetic algorithm and particle swarm optimization. Because optimization is a strong tool for obtaining the required design parameters and the optimal set of operational circumstances. This would leads the experimental effort and limits the chance of utilizing irrelevant data, as well as assists in the difficult process of discovering a solid mix of features[22-28]. During the assessment, optimization algorithms often utilize a combination of particular algorithmic parameters and common regulating factors. These variables are important in the feature selection process and have an impact on the performance of machine learning models.

The major contribution of this paper is as follows. The paper is dedicated to designing a novel hybrid optimization model termed quantum leaping GWO with nearest-neighbors memeplexes (QLGWONM) for feature extraction and optimization algorithm. It presented the ablation study to show efficiency over other existing algorithms, and showed the result analysis on different domains and shows better improvement of the proposed QLGWONM. Finally, the paper presented the comparative state-of-art to show the efficiency of the designed model.

The article is organized as follows. Section 2 describes the related work and contribution of researchers for feature extraction and reduction. Section 3 describes the system methodology and defines the proposed flowchart and algorithms. Section 4 describes the implementation details and results in analysis and the comparative state-of-art. Finally, in Section 5, the conclusion and future work are described.

2 Related WorkFahy and Yang[22] suggested a dynamic feature masking for clustering high-dimensional big quantities of information. Clustering is done across unmasked, useful characteristics after redundant features are masked. The mask is changed as the perceived significance of a feature varies. Formerly insignificant features are unmasked, and those that lose significance are masked. In each scenario, the suggested dynamic feature mask increases clustering efficiency while reducing the underlying algorithm's processing time. The computed F-score is 0.62. CMM is 0.87 and purity is 0.93.

Manoj et al.[23] used the techniques of ant-colony optimizing (ACO) and ANN to construct a feature selection algorithm method for text-based classifying method. The effectiveness of this hybrid technique was shown using Reuter's data set. As a result, the ACO- ANN hybrid method successfully solves the feature selection issue. It has also been applied in a large data context, and the results have been examined. F-1 has a score of 89.87. The precision is 81.35%. Precision and recall are 77.34 and 80.14, respectively.

Barddal et al.[24] presented adaptive boosting for feature selection, a revolutionary dynamic FS approach for data streams (ABFS). It extends feature selection-specific statistics from batches learning to streamed contexts in addition to our suggested technique. The predicted ABFS-HAT has an accuracy of 89.01%.

Kushwaha and Pant[25] proposed link-based particle swarm optimization approach as a novel feature selection method for unsupervised text clustering (LBPSO). To pick important features, this technique provides a novel neighbor selection mechanism in BPSO. The performance of LBPSO is better than other PSO-based algorithms, according to our assessment metrics.

Rashid et al.[26] investigated the influence of the cooperative co-evolutionary technique for feature selection on six commonly used ML classification algorithms. The performance of the classifiers was demonstrated with and without selecting features. Because all dataset's properties were retained, SVM beats LR in most situations, but LR surpasses SVM in others.

Rashid et al.[26] examined the use of cooperative co-evolution (CC) with a dynamical decomposition for FS. It presented a random feature grouping approach for feature selection using CC and tested six machine learning classifications on 7 datasets. The trials revealed that the FS procedure had no substantial impact on the classifiers' performance. The suggested NB+CCEAFS has an accuracy, specificity, and sensitivity of 87.79%, 91.20% and 49.80%, respectively, when tested on the QSAR Oral Toxicity Dataset.

AlFarraj et al.[27] presented a strategy for optimizing feature selection as well as soft computing strategies for lowering the dataset's dimensionality. The firefly gravitational ant colony optimization (FGACO) technique was then used to choose the optimum characteristics. During the selection process, this optimized FS properly analyses the qualities as well as the relevance of the feature. The average effectiveness of the feature selection approach is 98.4625%.

Onan [29] proposed a new deep neural network architecture that combines recurrent and convolutional recurrent neural networks in a novel way. The group-wise advancement process differs from all other attention mechanisms in that it seeks to promote the learning of diverse sub-features within each group while also expanding their spatial distribution. To extract high-level features and reduce the dimensionality of the feature space, the proposed scheme uses convolution and pooling layers.

Onan[30] introduced a method to extremely unbalanced learning based on consensus clustering and under-sampling. Five clustering algorithms (namely, k-means, k-modes, k-means++, self-organizing maps, and DIANA algorithm) and their groupings are considered in the consensus clustering schemes.

Onan[31] analyzed the performance of five statistical keyword extraction methods on classification algorithms and ensemble methods for scientific text classification tasks (most frequent measure based keyword extraction, term frequency-inverse sentence frequency oriented keyword extraction, co-occurrence statistical information centered keyword extraction, eccentricity-based keyword extraction, and TextRank algorithm) (categorization). The study compares five widely used ensemble techniques (AdaBoost, Bagging, Dagging, Random Subspace, as well as Majority Voting) with five base learning algorithms (Nave Bayes, support vector machines, logistic regression, and Random Forest).

Onan[32] obtained word vectors by word2vec, POS2vec, word-position2vec, and LDA2vec schemes are combined in an enhanced word embedding scheme. The proposed scheme employs a two-staged procedure, in which word embedding schemes are combined with cluster analysis.

Previtali et al.[33] proposed an ensemble strategy to feature selection that combines the individual feature lists obtained by various feature selection methods to produce a more robust and efficient feature subset. A genetic algorithm has been used to aggregate the individual feature lists.

Onan et al.[34] proposed a hybrid ensemble pruning framework based on clustering and randomized search. In addition, to deal with the instability of clustering results, a consensus clustering scheme is presented. The ensemble's classifiers are first grouped into groups based on their predictive characteristic.

Onan[35] presented architecture that combines CNN-LSTM architecture with TF-IDF weighted hlove word embedding. The use of CNN in conjunction with LSTM architecture has shown to be effective.

Onan[36] developed an efficient sentiment classification scheme with high predictive performance in MOOC reviews. The selection of a suitable representation scheme is a crucial obstacle to development sentiment classification schemes based on machine learning.

Onan[37] performed an extensive comparison between different features extraction strategies (such as authorship attribution features, language features, character n-grams, part of speech n-grams, and the frequency of the most discriminative words) and five separate base learners (Nave Bayes, support vector machines, logistic regression, k-nearest neighbor, and Random Forest) in conjunction with ensemble learning methods (such as Boosting, Bagging, and Random Subspace).

By pursuing the paradigms of neural language models and deep neural networks, Onan and Tocoglu[38] proposed an effective sarcasm detection framework on social media data. A term weighted word embedding framework based on inverse gravity moments is introduced.

Onan[39] proposed a deep learning-based method for detecting sarcasm. The predictive performance of a topic-enriched word embedding arrangement has been compared with that of traditional word embedding strategies in this respect (such as word2vec, fastText and GloVe). Word-embedding based representation schemes combined with lexical, pragmatic, implicit incongruity, and explicit incongruity based techniques can produce impressive outcomes.

Onan et al.[40] proposed swarm-optimized topic modeling, an efficient multiple classifier strategy to text classification. The Latent Dirichlet allocation (LDA) can solve the vector space model's high-dimensional issue, but finding the right parameter values is crucial to its success.

Jiménez et al.[41] proposed a unique multimodal characteristic selecting approach centered on multi-objective evolving arithmetic operations for both the searching approach and the classification algorithm.

Xu et al.[42] presented a duplication analysis-based EA (DAEA) for bi-objective feature choice in categorization in this study. Researchers improved on the basic dominance-based EA framework in three ways in the suggested technique. They compared the presented approach with five state-of-the-art multi-objective EAs (MOEAs) and evaluated them on 20 categorization databases utilizing two frequently utilized performance indicators in the trials.

Xue et al.[43] gave a thorough assessment of the state-of-the-art on EC for feature choice, identifying the valuable contribution of these various methods. Besides, existing concerns and difficulties are explored in order to determine prospective opportunities for subsequent research.

Xue et al.[44] presented a multi-objective particle swarm optimization (PSO) for selecting features. The goal is to build a Pareto front of nondominated alternatives. Researchers studied two multi-objective feature selecting method based on PSOs. On 12 benchmark data collections, the two multi-objective techniques are matched to two standard feature selecting techniques, a sole purpose feature choosing approach, a two-stage feature selecting approach, and three well-known evolving multi-objective techniques.

Liang and Ma[45] presented a feature selecting technique built on a many-objective optimization algorithm (FS-MOEA) for IDSs in VANETs, with the many-objective optimization technique being adjustable non-dominant sorting genetic algorithm-III (A-NSGA-III). In FS-MOEA, two enhancements are developed: Bias and Weighted (B&W) niche conservation and Information Gain (IG)-Analytic Hierarchy Process (AHP) prioritization.

Tian et al.[46] proposed an evolutionary technique for resolving humongous scale heterogeneous MOPs. To assure the sparseness of the obtained alternatives, the recommended approach proposes a novel community starting technique and evolutionary algorithms that take into account the scarce aspect of the Pareto optimal alternatives. Table 1 represents some comparative review on different optimization algorithms.

| Table 1 Contribution of researchers for optimization based feature selection methods |

3 Proposed Methodology

The FS is the method of creating a fully new database that is devoid of any duplicated or unneeded features[52-55]. Then it also implies that the initial data structure is preserved, as well as any critical stuff is not wasted or compromised. When there are a lot of samples and a lot of characteristics, feature selection techniques become quite important. These strategies are extensively utilized by consumers since they can effectively minimize dimensionality. It is a way to find characteristics that can quickly and accurately characterize an initial dataset.

Particle swarm optimization (PSO), ABC, and GA are three prominent meta-heuristic optimization approaches that have been used to search for and locate relevant and optimum features, albeit they have certain limitations, such as being caught in local optima[56].The Grey Wolf Optimizer (GWO) is a new optimization algorithm developed in Ref. [57], which resembles the leadership hierarchy of wolves, which are well known for their group hunting. The PSO[57] and GA[58] algorithms have a poor convergence rate in the iterative process and are easy to slip into local optimum in high-dimensional space. As a result, the GWO method requires just a few parameters and is simple to implement, making it superior to previous algorithms. The wolf method is more readily coupled with realistic engineering issues because of its benefits of simplicity in concept, quick seeking speed, high search accuracy, and ease of implementation. As a result, GWO has a significant theoretical research value[59]. As GWO is a new biological intelligence algorithm, the research about it is just at the initial phase, so research and development of the theory are still not perfect. In order to make the algorithm achieve a more superior performance, further exploration and research is needed[59].

This research aims to provide a paradigm for massive datasets processing challenges relying on quantum leaping GWO with nearest-neighbor memeplexes of dimension reduction.

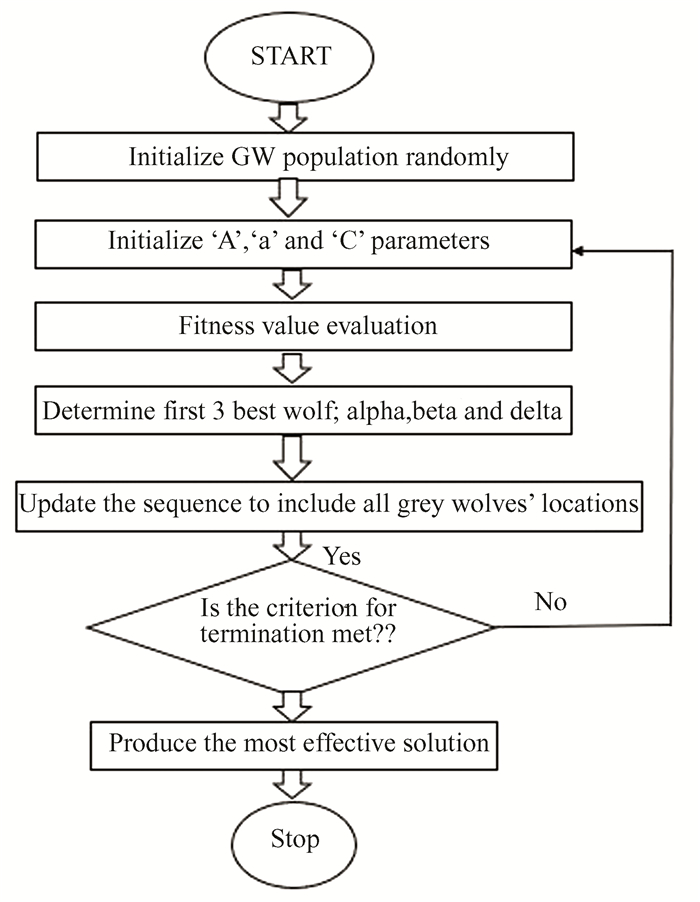

Because of overexploitation, many enhanced GWO methods are prone to be caught in a locally optimal when the search space becomes more multidimensional, causing their efficiency to decrease. Exploring is seen to be an excellent way of learning much more about global optimum. Extensive exploration, on the other hand, degrades the quality of the chosen wolf's search. We present a quantum jumping GWO with nearest-neighbor memeplexes to allow better optimal matching among exploratory and exploitative GWO for the fuzzy attribute reduction of complicated large data (QLGWONM). QLGWONM is shown in Fig. 1. It uses both a coordinates rotation gate and a dynamic rotation angle technique to explore the search space, find the global best area throughout fuzzy attribute reduction, and speed up premature convergence.

|

Fig.1 Flowchart of Algorithm 1: GWO |

The development of fuzzy attribute reduction has received a lot of attention during the last decade. The vast majority of big data now exists in the form of expanding databases with extremely high-dimensional characteristics and dynamic and diversified structures. While fuzzy attribute reduction methods have shown promise, modeling using fuzzy large data analysis has received significantly less consideration. When the number of enormous new datasets increases substantially in a single database at the very same time, there is a far bigger failure of computing effort and used space. This design might jeopardize the current attribute reduction procedure. As a result, typical fuzzy attribute reduction techniques are inaccurate when dealing with enormous datasets that are constantly evolving and have more complicated fuzzy structures.

The following goals must be met for optimal control of dynamic feature selection: maximization of accuracy and precision rate; minimization of time; minimization of overall computational cost; Minimize error rate. For these objectives, quantum leaping Grey Wolf Optimization (GWO) with nearest-neighbors memeplexes (QLGWONM) is proposed. The flow-chart of the proposed Quantum leaping-based GWO feature selection methodology is given below:

Algorithm 1: GWO

1:Initialize the generation counter t and the grey wolf population Xi (i=1, 2, ..., n)

2:Set the variables a, A, and C

3:Compute the fitness of every Wolf

4:Xa= The best wolf

5:Xβ= The 2nd best wolf

6:Xδ=The 3rd best wolf

7:while (t is less than Max_iterations)

8:Filter the grey wolf populations as per their fitness.

9:Update the latest wolf's location using Eq., X(t + 1) = (X1 + X2 + X3)/3

10:end for Update parameters a, A, C

11:Determine the overall fitness of all wolves.

12:Update Xa, Xβ, Xδ

13:Swap out the worst-fitting wolf with the best-fitting wolf.

14:t=t+1;

15:end while;

16:Return to Xa

The worst wolf is replaced by the probability weights by the Quantum leaping nearest neighbor memeplexes.

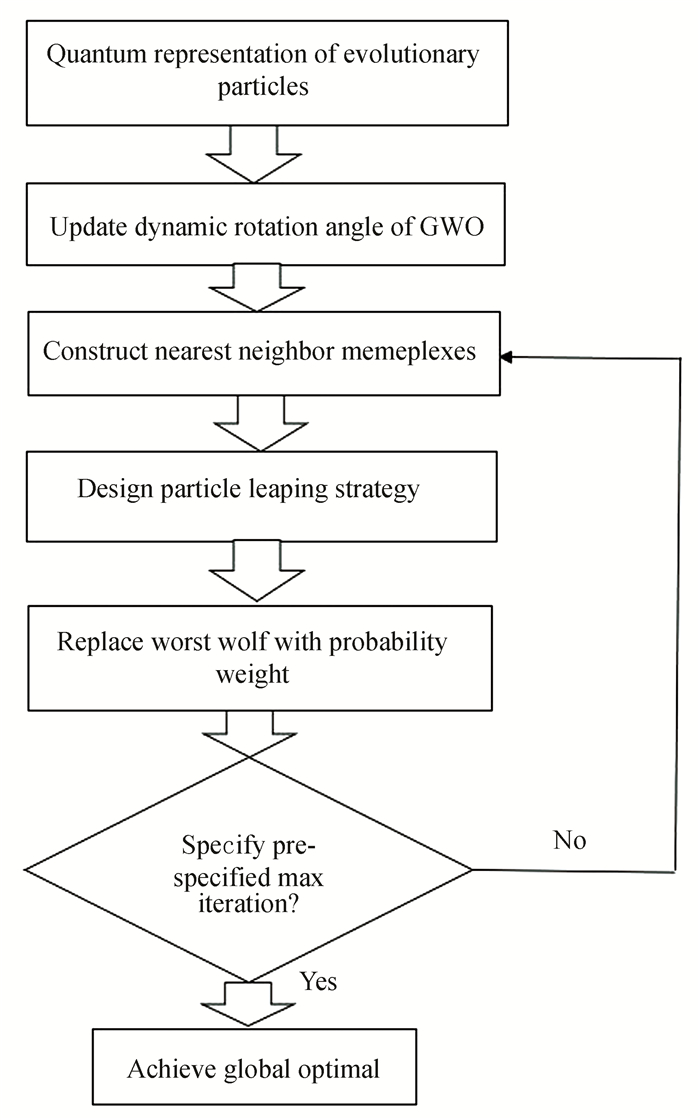

We propose the QLGWONMs to develop the deconstructed fuzzy attribute subsets in the quantum representations, rotational mechanisms, and wolf leaping approach, as indicated above. Algorithm 2 describes the basic steps(as shown in Fig. 2). This employing process in QLGWONM uses the nearest-neighbor memeplexes leaping search approach to find a global optimum wolf position with a proper mix of exploratory and exploitative methods. QLGWONM can get adequate data of all quantum particles or wolves in its NNM, which is advantageous for enhancing its dominant efficiency and reducing for fuzzy attribute subsets.

|

Fig.2 QLGWONM steps (Algorithm 2) |

Algorithm 2: QLGWONM

1. Set the placements and start the Q-bit. Put the starting probabilities amplitudes of the states of the jth element within ith quantum particle at time t, α0ij, t and β0ij, to (1/ √2), implying that each Q-bit particle signifies the equal probability superposition of states.

2.Rnij, t is a randomly generated generator. Setting the input values of x0ij, t to 1 if Rnij, t < 1/2; else, it is set to 0. Initialize Bestfitwolf as the location of the quantum particle only with the lowest cost, as well as the initial Initfitwolf as the starting position of every quantum particle or wolf.

3. Apply the particle leaping technique in NNM and use the probabilistic weight i to get the location vector XKi of the i-th weakest quantum particle at the k-th iteration.

4. Adjust the quantum rotational angle's magnitude and modify the rotational angle of each Q-bit particle.

5. If XK+1i is less than Initfitwolfki in the updated NNM, InitfitwolfK+1i is set to XK+1i otherwise, InitfitwolfK+1i is calculated by InitfitwolfK+1i = XK+1i.

6. If the current iteration exceeds the predetermined maximum, end it. If not, go to Step 3.

4 Results and DiscussionIn this section, we have presented the implementation details and result analysis. Subsection 4.1 discusses about implementation detail of designed algorithm, 4.2 discusses dataset description used in this study, 4.3 presents the ablation result analysis of the system and 4.4 presents the comparative state-of-art.

4.1 Implementation DetailsFor performance evaluation of the proposed model, we have simulated the scenario on the MATLAB platform. The paper also implemented the comparative optimization algorithms used in this paper such as PSO, SMA, WO, GWO, SSA, ABA, Jaya, and CSA. For performance evaluation, accuracy is used, that is mathematically calculated as

| $\text { Accuracy }=\frac{(\text { True_Positive }+ \text { True_Negative })}{(\text { True_Positive }+ \text { True_Negative }+ \text { False_Positive }+ \text { False_Negative })} $ | (1) |

| $\text { Recall }=\frac{\text { True_Positive }}{(\text { True_Positive }+ \text { False_Negative })} $ | (2) |

| $ \text { Precision }=\frac{\text { True_Positive }}{(\text { True_Positive }+ \text { False_Positve })} $ | (3) |

| $ \text { F-1 score }=\frac{2 \cdot \text { Recall } \cdot \text { Precision }}{(\text { Recall }+ \text { Precision })} $ | (4) |

In this paper, five different datasets from different domain are selected, which are brain tumor, Central nervous system (CNS), lung cancer, ionosphere, and Network Security Laboratory Knowledge Discovery in Databases (NSL_KDD). Lung cancer dataset contains the gene expression of lung cancer having two classes of cancer i.e., adenocarcinoma (ADCA); malignant pleural mesothe-lioma (MPM) with 150 and 31 samples respectively described by 12533 genes[60]. Central Nervous System dataset (CNS) dataset contains dataset of 60 samples with 7129 features[61]. Ionosphere dataset contains radar data collected for ionosphere[62]. NSL-KDD dataset is collected for intrusion detection. The dataset was collected from[63]. Brain Tumor feature dataset including five first-order features and eight texture features with the class label. The dataset is collected from[64].

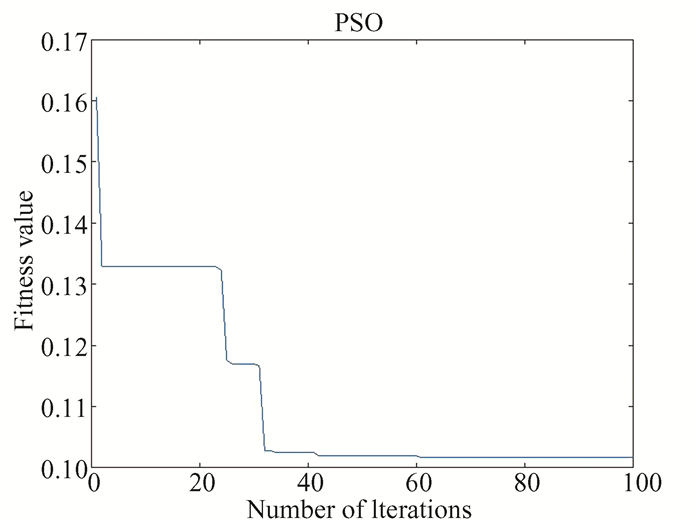

4.3 Ablation StudyAnalysis of the change in iteration numbers with fitness values is shown for the evaluation of sentiment analysis and accuracy. The result shows how the overall number of iterations and fitness values have changed over time. In Fig. 3, fitness values (FV) v.s. several iterations are shown for the PSO algorithm. The fitness decreases from 0.16 to 0.10 for 100 iterations and is almost constant after 30 iterations. The accuracy of the particle swarm optimization (PSO) is evaluated on 5 datasets including brain tumor, Central nervous system (CNS), lung, ionosphere, and NSL_KDD. PSO has an accuracy of 0.8333 for brain tumors, 0.975 for lung, 0.975 for ionosphere, and 0.970 for NSL_KDD. Table 2 shows the comparison of accuracy evaluation. The result shows that the proposed method outperformed all the methods in terms of accuracy. When compared with PSO, SMA, WO, SSA, ABA, Jaya and CSA model, the suggested model performed well with an accuracy of 100.0% for brain tumor, CNS, lung cancer dataset and 97.1% for ionosphere dataset and 99.0% for NSL-KDD.

|

Fig.3 FV v.s. number of iterations for PSO algorithm |

| Table 2 Comparison of accuracy evaluation |

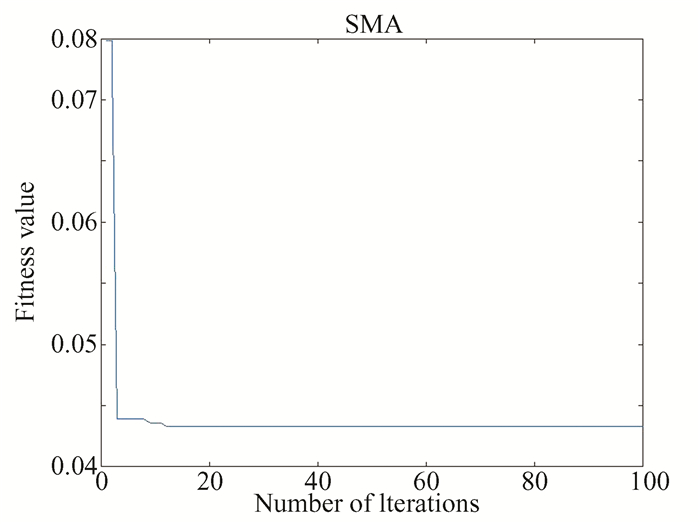

In Fig. 4, FV v.s. number of iterations are shown for SMA model. The fitness decreases from 0.08 to 0.05 for 100 iterations and is almost constant after 5 iterations. The accuracy of the SMA is evaluated on 5 datasets including brain tumor, CNS, lung, ionosphere, and NSL_KDD. SMA has an accuracy of 0.888 for brain tumors, 0.916 for CNS, 0.95 for lung cancer, 0.928 for ionosphere, and 0.96 for NSL_KDD.

|

Fig.4 FV v.s. Number of iterations for SMA algorithm |

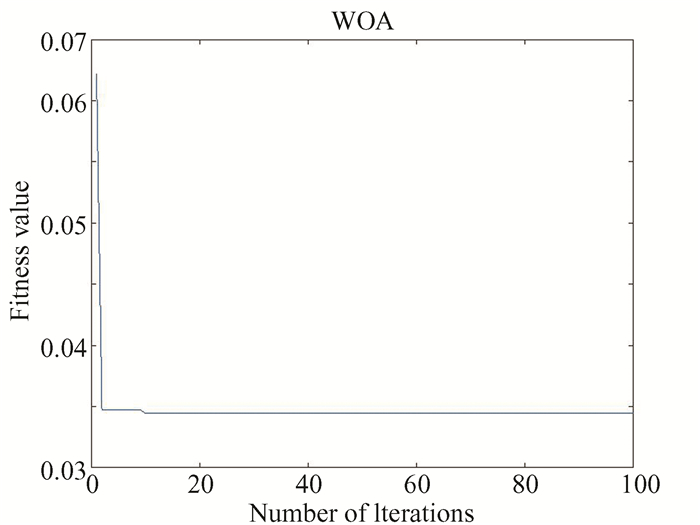

In Fig. 5, FV v.s. number of iterations are shown for the WOA model. The fitness decreases from 0.065 to 0.03 for 100 iterations and is almost constant after 5 iterations. The accuracy of the WOA is evaluated on 5 datasets including brain tumor, CNS, lung, ionosphere, and NSL_KDD. WOA has an accuracy of 0.888 for brain tumor, 0.916 for CNS, 0.950 for lung cancer, 0.975 for ionosphere, and 0.95 for NSL_KDD.

|

Fig.5 FV v.s. the number of iteration for WOA model |

In Fig. 6, FV v.s. number of iterations are shown for the GWO model. The fitness decreases from 0.135 to 0.03 for 100 iterations and is almost constant after 20 iterations. The accuracy of the GWO is evaluated on 5 datasets including brain tumor, CNS, lung, ionosphere, and NSL_KDD. GWO has an accuracy of 0.944 for brain tumors, 1.000 for CNS, 0.975 for lung cancer, 0.957 for ionosphere, and 0.980 for NSL_KDD.

|

Fig.6 FV v.s. the number of iteration for GWO algorithm |

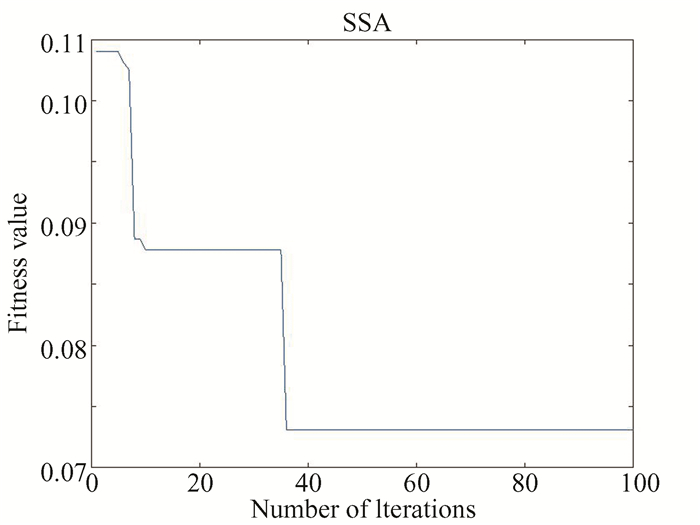

In Fig. 7, FV v.s. number of iteration ae shown for the SSA model. The fitness decreases from 0.11 to 0.06 for 100 iterations and is almost constant after 40 iterations. The accuracy of the SSA is evaluated on 5 datasets including brain tumor, CNS, lung, ionosphere, and NSL_KDD. SSA has an accuracy of 0.777 for brain tumor, 0.833 for CNS, 0.975 for lung cancer, 0.928 for ionosphere, and 0.96 for NSL_KDD.

|

Fig.7 FV v.s. number of iteration for SSA model |

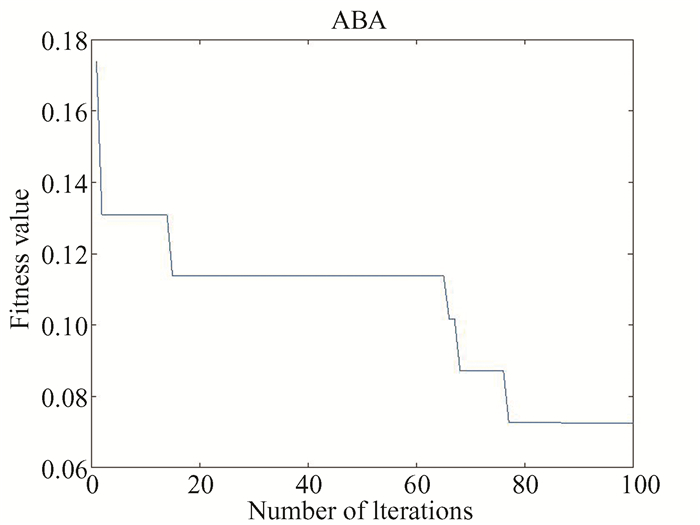

In Fig. 8, FV v.s. the number of iteration are shown for the ABA model. The fitness decreases from 0.18 to 0.07 for 100 iterations and is almost constant after 80 iterations. The accuracy of the ABA is evaluated on 5 datasets including brain tumor, CNS, lung cancer, ionosphere, and NSL_KDD. ABA has an accuracy of 0.944 for brain tumors, 0.9160 for CNS, 0.9550 for lung cancer, 0.9285 for ionosphere, and 0.9800 for NSL_KDD.

|

Fig.8 FV v.s. the number of iteration for the ABA model |

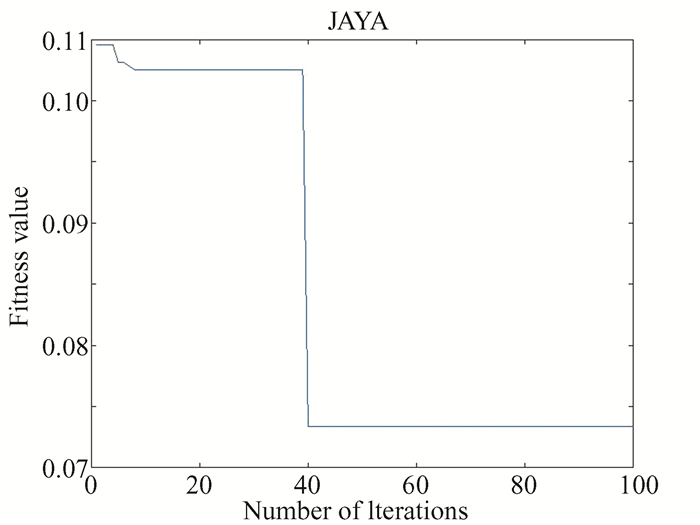

In Fig. 9, FV v.s. the number of iteration are shown for the JAYA model. The fitness decreases from 0.11 to 0.060 for 100 iterations and is almost constant after 40 iterations. The accuracy of the JAYA is evaluated on 5 datasets including brain tumor, CNS, lung cancer, ionosphere, and NSL_KDD. JAYA has an accuracy of 0.888 for brain tumors, 0.916 for CNS, 0.975 for lung, 0.957 for ionosphere, and 0.960 for NSL_KDD.

|

Fig.9 FV v.s. the number of iteration for JAYA algorithm |

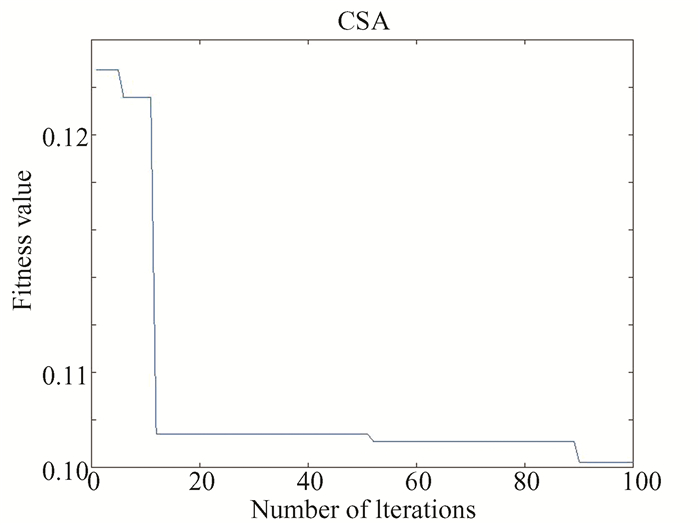

In Fig. 10, FV v.s. the number of iteration are shown for the CSA model. The fitness decreases from 0.12 to 0.10 for 100 iterations. The accuracy of the CSA is evaluated on 5 datasets brain tumor, CNS, lung cancer, ionosphere, and NSL_KDD. CSA has an accuracy of 0.8333 for brain tumors, 0.8330 for CNS, 0.9750 for lung cancer, 0.9280 for ionosphere, and 0.9700 for NSL_KDD.

|

Fig.10 FV v.s. the number of iteration for CSA Algorithm |

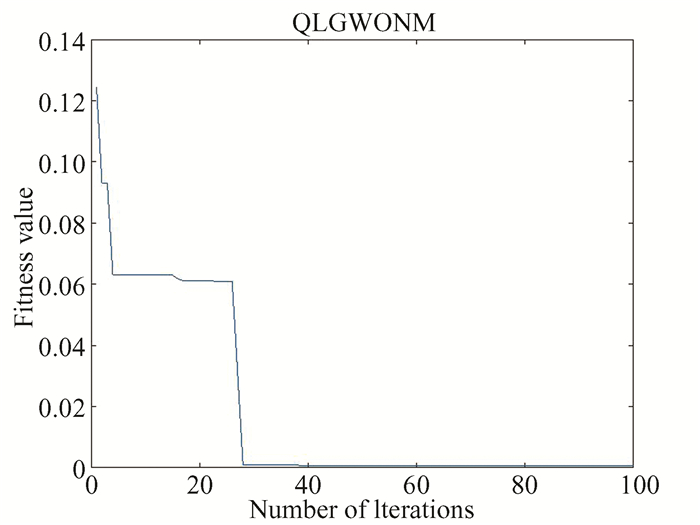

In Fig. 11, FV v.s. the number of iteration are shown for the QLGOWNM model. The fitness decreases from 0.12-0.01 for 100 iterations and is almost constant after 30 iterations. The accuracy of the QLGOWNM is evaluated on 5 datasets brain tumor, CNS, lung cancer, ionosphere, and NSL_KDD. QLGOWNM has an accuracy of 1.000 for brain tumor, 1.000 for CNS, 1.000 for lung cancer, 0.971 for ionosphere, and 0.990 for NSL_KDD.

|

Fig.11 FV v.s. the number of iteration for QLGWONM algorithm |

Table 3 shows the T-test comparison of various evolutionary optimization technique. QLGWONM has T-test value of 0.93 which is the highest compared with other algorithms. Table 4 shows the performance evaluation of QLGWONM classifier.

| Table 3 T-test evaluation of feature selection and reduction techniques |

| Table 4 Performance evaluation of QLGWONM |

4.4 Comparison of the State-of-Art

Table 5 shows comparative performance of different evolutionary optimization techniques like dragonfly[52], ant colony optimization[53-54], differential evolution technique[54], genetic algorithm[55], etc. Performance parameters for the feature selection techniques are accuracy, precision, recall and F-1 score. For dragon fly optimization discussed in Ref.[52], the accuracy is 81.2%, precision is 81.45%, recall is 80.84% and F-1 score is 81.14%. For colony optimisation technique discussed in Ref.[53], it is integrated with support vector machine and results improved as compared to dragon fly optimisation. The precision, accuracy, recall, and F-1 scores are 94.3%, 94.1%, 94.5% and 94.2% respectively. For differential evolution optimisation in Ref.[54], accuracy is greater than dragonfly optimisation and less than colony optimisation which is 82.81%, precision is 92.4%, recall is 84.3, and F-1 score is 85.3%. Ant colony optimization with fuzzy set feature selection evaluation techniques have accuracy of 87.41%, precision of 87.3%, recall of 87.5%, and F-1 score of 87.4%. Another evolution optimization technique is discussed in Ref.[55], which is genetic algorithm used in numerous application including feature selection. Accuracy is 89.7%, precision is 89.5%, recall is 90.7%, and F-1 score is 90.1%. Whereas, the proposed QLGWONM achieved highest accuracy of 99%. The results show more accurate result of QLGWONM due to better convergence towards optimal solution.

| Table 5 Comparative performance evaluation |

5 Conclusions

Big data has gained increasing attention in a variety of industries, including deep learning, pattern classification, healthcare, commercial, and infrastructure. Data analysis is essential for transforming the data into much more precise information that can be fed into decision-making processes. Information retrieval gets increasingly challenging as databases grow more varied and complicated. One way to meet the challenge is to employ attribute selection and preprocessing, which minimizes the scale of the situation and makes computing and interpretation easier. Any data-mining technique will benefit from preprocessing since it creates a dependable and acceptable source. The selection of appropriate features may help us comprehend the properties and underlying structure of complicated data, as well as increase the model's performance. Based on the proposed QLGWONM technique, this paper offers a unique hybrid feature selection model for 5 datasets. When compared with the PSO, SMA, WO, SSA, ABA, Jaya, and CSA model, the suggested model performed well with an accuracy of 100% for brain tumor, CNS, lung dataset, 97.1% for ionosphere dataset, and 99% for NSL-KDD. When compared with the weighted closest neighbor, the experimental results revealed excellent insights in both time utilization and feature weights.

| [1] |

Zaffar M, Hashmani M A, Savita K S. Performance analysis of feature selection algorithm for educational data mining. 2017 IEEE Conference on Big Data and Analytics. Piscataway: IEEE, 2018. 7-12. DOI: 10.1109/ICBDAA.2017.8284099.

(  0) 0) |

| [2] |

Verma A K, Pal S, Kumar S. Prediction of skin disease using ensemble data mining techniques and feature selection method-a comparative study. Applied Biochemistry and Biotechnology, 2020, 190(2): 341-359. DOI:10.1007/S12010-019-03093-Z (  0) 0) |

| [3] |

Abualigah L, Dulaimi A J. A novel feature selection method for data mining tasks using hybrid Sine Cosine Algorithm and Genetic Algorithm. Cluster Computing, 2021, 243(24): 2161-2176. DOI:10.1007/S10586-021-03254-Y (  0) 0) |

| [4] |

Harb H M, Desuky A S. Feature selection on classification of medical datasets based on particle swarm optimization. International Journal of Computer Applications, 2014, 104(5): 14-17. DOI:10.5120/18197-9118 (  0) 0) |

| [5] |

Nagarajan S M, Muthukumaran V, Murugesan R, et al. Innovative feature selection and classification model for heart disease prediction. Journal of Reliable Intelligent Environments, 2021, 8: 333-343. DOI:10.1007/s40860-021-00152-3 (  0) 0) |

| [6] |

Ghosh P, Azam S, Jonkman M, et al. Efficient prediction of cardiovascular disease using machine learning algorithms with relief and lasso feature selection techniques. IEEE Access, 2021, 9: 19304-19326. DOI:10.1109/ACCESS.2021.3053759 (  0) 0) |

| [7] |

Aydin H M, Ali M A, Soyak E G. The analysis of feature selection with machine learning for indoor positioning. Proceedings of the 2021 29th Signal Processing and Communications Applications Conference (SIU). Piscataway: IEEE, 2021. 21172953. DOI: 10.1109/SIU53274.2021.9478012.

(  0) 0) |

| [8] |

Kaur A, Guleria K, Trivedi N K. Feature selection in machine learning: methods and comparison. Proceedings of the 2021 International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE). Piscataway: IEEE, 2021. 20632367. DOI: 10.1109/ICACITE51222.2021.9404623.

(  0) 0) |

| [9] |

BenSaid F, Alimi A M. Online feature selection system for big data classification based on multi-objective automated negotiation. Pattern Recognit, 2021, 110: 107629. DOI:10.1016/J.PATCOG.2020.107629 (  0) 0) |

| [10] |

Rong M, Gong D, Gao X. Feature selection and its use in big data: challenges, methods, and trends. IEEE Access, 2019, 7: 19709-19725. DOI:10.1109/ACCESS.2019.2894366 (  0) 0) |

| [11] |

Meera S, Sundar C. A hybrid metaheuristic approach for efficient feature selection methods in big data. Journal of Ambient Intelligence and Humanized Computing, 2021, 12: 3743-3751. DOI:10.1007/S12652-019-01656-W (  0) 0) |

| [12] |

Bazlur Rashid A N M, Choudhury T. Knowledge management overview of feature selection problem in high-dimensional financial data: Cooperative co-evolution and Map Reduce perspectives. Problems and Perspectives in Management, 2019, 17(4): 340-359. DOI:10.21511/PPM.17(4).2019.28 (  0) 0) |

| [13] |

Nugroho A, Fanani A Z, Shidik G F. Evaluation of Feature Selection Using Wrapper for Numeric Dataset with Random Forest Algorithm. https://ieeexplore.ieee.org/document/9573249.

(  0) 0) |

| [14] |

Jiang Y, Liu X, Yan G, et al. Modified binary cuckoo search for feature selection: a hybrid filter-wrapper approach. Proceedings of the 2017 13th International Conference on Computational Intelligence and Security (CIS). Piscataway: IEEE, 2017. 488-491. DOI: 10.1109/CIS.2017.00113.

(  0) 0) |

| [15] |

Suchetha N K, Nikhil A, Hrudya P. Comparing the wrapper feature selection evaluators on twitter sentiment classification. Proceedings of the 2019 International Conference on Computational Intelligence in Data Science (ICCIDS). Piscataway: IEEE, 2019. 19046454 DOI: 10.1109/ICCIDS.2019.8862033.

(  0) 0) |

| [16] |

Fong S, Wong R, Vasilakos A V. Accelerated PSO swarm search feature selection for data stream mining big data. IEEE Transactions on Services Computing, 2016, 9(1): 33-45. DOI:10.1109/TSC.2015.2439695 (  0) 0) |

| [17] |

Moslehi F, Haeri A. A novel hybrid wrapper-filter approach based on genetic algorithm, particle swarm optimization for feature subset selection. Journal of Ambient Intelligence and Humanized Computing, 2019, 11: 1105-1127. DOI:10.1007/S12652-019-01364-5 (  0) 0) |

| [18] |

Reddy G T, Praveen Kumar Reddy M, Lakshmanna K, et al. Analysis of dimensionality reduction techniques on big data. IEEE Access, 2020, 8: 54776-54788. DOI:10.1109/ACCESS.2020.2980942 (  0) 0) |

| [19] |

Mucherino A, Papajorgji P J, Pardalos P M. K-Nearest Neighbor Classification. New York: Springer, 2009: 83-106.

(  0) 0) |

| [20] |

Khalid S, Khalil T, Nasreen S. A survey of feature selection and feature extraction techniques in machine learning. Proceedings of the 2014 Science and Information Conference. Piscataway: IEEE, 2014. 14651028. DOI: 10.1109/SAI.2014.6918213.

(  0) 0) |

| [21] |

Chakraborty B, Kawamura A. A new penalty-based wrapper fitness function for feature subset selection with evolutionary algorithms. Journal of Information and Telecommunication, 2018, 2(2): 163-180. DOI:10.1080/24751839.2018.1423792 (  0) 0) |

| [22] |

Fahy C, Yang S. Dynamic feature selection for clustering high dimensional data streams. IEEE Access, 2019, 7: 127128-127140. DOI:10.1109/ACCESS.2019.2932308 (  0) 0) |

| [23] |

Manoj J R, Anto Praveena M D, Vijayakumar K. An ACO-ANN based feature selection algorithm for big data. Cluster Computing, 2018, 22: 3953-3960. DOI:10.1007/S10586-018-2550-Z (  0) 0) |

| [24] |

Barddal J P, Enembreck F, Gomes H M, et al. Boosting decision stumps for dynamic feature selection on data streams. Information Systems, 2019, 83: 13-29. DOI:10.1016/J.IS.2019.02.003 (  0) 0) |

| [25] |

Kushwaha N, Pant M. Link based BPSO for feature selection in big data text clustering. Future Generation Computer Systems, 2018, 82: 190-199. DOI:10.1016/J.FUTURE.2017.12.005 (  0) 0) |

| [26] |

Rashid A N M B, Ahmed M, Sikos L F, et al. Cooperative co-evolution for feature selection in big data with random feature grouping. Journal of Big Data, 2022, 7: 107. DOI:10.1186/S40537-020-00381-Y/FIGURES/2 (  0) 0) |

| [27] |

AlFarraj O, AlZubi A, Tolba A. Optimized feature selection algorithm based on fireflies with gravitational ant colony algorithm for big data predictive analytics. Neural Computing and Applications, 2019, 31: 1391-1403. DOI:10.1007/S00521-018-3612-0 (  0) 0) |

| [28] |

Pomeroy S L, Tamayo P, Gaasenbeek M, et al. Prediction of central nervous system embryonal tumour outcome based on gene expression. Nature, 2002, 415(6870): 436-442. DOI:10.1038/415436a (  0) 0) |

| [29] |

Onan A. Bidirectional convolutional recurrent neural network architecture with group-wise enhancement mechanism for text sentiment classification. Journal of King Saud University-Computer and Information Sciences, 2022, 34(5): 2098-2117. DOI:10.1016/J.JKSUCI.2022.02.025 (  0) 0) |

| [30] |

Onan A. Consensus clustering-based undersampling approach to imbalanced learning. Scientific Programming, 2019, 2019: 5901087. DOI:10.1155/2019/5901087 (  0) 0) |

| [31] |

Onan A, Korukoǧlu S, Bulut H. Ensemble of keyword extraction methods and classifiers in text classification. Expert Systems with Applications, 2016, 57: 232-247. DOI:10.1016/J.ESWA.2016.03.045 (  0) 0) |

| [32] |

Onan A. Two-stage topic extraction model for bibliometric data analysis based on word embeddings and clustering. IEEE Access, 2019, 7: 145614-145633. DOI:10.1109/ACCESS.2019.2945911 (  0) 0) |

| [33] |

Previtali F, Arrieta A F, Ermanni P. Double-walled corrugated structure for bending-stiff anisotropic morphing skins. Journal of Intelligent Material Systems and Structures, 2014, 26: 599-613. DOI:10.1177/1045389X14554132 (  0) 0) |

| [34] |

Onan A, Korukoǧlu S, Bulut H. A hybrid ensemble pruning approach based on consensus clustering and multi-objective evolutionary algorithm for sentiment classification. Information Processing & Management, 2017, 53(4): 814-833. DOI:10.1016/J.IPM.2017.02.008 (  0) 0) |

| [35] |

Onan A. Sentiment analysis on product reviews based on weighted word embeddings and deep neural networks. Concurrency Computation: Practice and Experience, 2021, 33(23): e5909. DOI:10.1002/CPE.5909 (  0) 0) |

| [36] |

Onan A. Sentiment analysis on massive open online course evaluations: a text mining and deep learning approach. Computer Application in Engineering Education, 2021, 29(3): 572-589. DOI:10.1002/CAE.22253 (  0) 0) |

| [37] |

Onan A. An ensemble scheme based on language function analysis and feature engineering for text genre classification. Journal of Information Science, 2016, 44(1): 28-47. DOI:10.1177/0165551516677911 (  0) 0) |

| [38] |

Onan A, Tocoglu M A. A term weighted neural language model and stacked bidirectional LSTM based framework for sarcasm identification. IEEE Access, 2021, 9: 7701-7722. DOI:10.1109/ACCESS.2021.3049734 (  0) 0) |

| [39] |

Onan A. Topic-enriched word embeddings for sarcasm identification. Advances in Intelligent Systems and Computing, 2019, 984: 293-304. DOI:10.1007/978-3-030-19807-7_29/COVER/ (  0) 0) |

| [40] |

Onan A. Biomedical text categorization based on ensemble pruning and optimized topic modelling. Computational and Mathematical Methods in Medicine, 2018, 2018: 2497471. DOI:10.1155/2018/2497471 (  0) 0) |

| [41] |

Jimenez F, Martinez C, Marzano E, et al. Multiobjective evolutionary feature selection for fuzzy classification. IEEE Transactions on Fuzzy Systems, 2019, 27(5): 1085-1099. DOI:10.1109/TFUZZ.2019.2892363 (  0) 0) |

| [42] |

Xu H, Xue B, Zhang M. A duplication analysis-based evolutionary algorithm for bi-objective feature selection. IEEE Transactions on Evolutionary Computation, 2021, 25(2): 205-218. DOI:10.1109/TEVC.2020.3016049 (  0) 0) |

| [43] |

Xue B, Zhang M, Browne W N, et al. A survey on evolutionary computation approaches to feature selection. IEEE Transactions on Evolutionary Computation, 2016, 20(4): 606-626. DOI:10.1109/TEVC.2015.2504420 (  0) 0) |

| [44] |

Xue B, Zhang M, Browne W N, et al. Particle swarm optimization for feature selection in classification: a multi-objective approach. IEEE Transactions on Cybernetics, 2013, 43(6): 1656-1671. DOI:10.1109/TSMCB.2012.2227469 (  0) 0) |

| [45] |

Liang J, Ma J. FS-MOEA: a novel feature selection algorithm for IDSs in vehicular networks. IEEE Transactions on Intelligent Transportation Systems, 2022, 23(1): 368-382. DOI:10.1109/TITS.2020.3011452 (  0) 0) |

| [46] |

Tian Y, Zhang X, Wang C, et al. An evolutionary algorithm for large-scale sparse multiobjective optimization problems. IEEE Transactions on Evolutionary Computation, 2020, 24(2): 380-393. DOI:10.1109/TEVC.2019.2918140 (  0) 0) |

| [47] |

Mistry K, Zhang L, Neoh S C, et al. A micro-GA embedded PSO feature selection approach to intelligent facial emotion recognition. IEEE Transactions on Cybernetics, 2017, 47(6): 1496-1509. DOI:10.1109/TCYB.2016.2549639 (  0) 0) |

| [48] |

Manoj R J, Anto Praveena M D, Vijayakumar K. An ACO-ANN based feature selection algorithm for big data. Cluster Computing, 2019, 22: 3953-3960. DOI:10.1007/S10586-018-2550-Z (  0) 0) |

| [49] |

Sun Y, Xue B, Zhang M, et al. Automatically designing CNN architectures using the genetic algorithm for image classification. IEEE Transactions on Cybernetics, 2020, 50(9): 3840-3854. DOI:10.1109/TCYB.2020.2983860 (  0) 0) |

| [50] |

Xu H, Xue B, Zhang M. A duplication analysis-based evolutionary algorithm for bi-objective feature selection. IEEE Transactions on Evolutionary Computation, 2021, 25(2): 205-218. DOI:10.1109/TEVC.2020.3016049 (  0) 0) |

| [51] |

Karizaki A A, Tavassoli M. A novel hybrid feature selection based on ReliefF and binary dragonfly for high dimensional datasets. Proceedings of the 2019 9th International Conference on Computer and Knowledge Engineering (ICCKE). Piscataway: IEEE, 2019. 300-304. DOI: 10.1109/ICCKE48569.2019.8965106.

(  0) 0) |

| [52] |

Sayed G I, Tharwat A, Hassanien A E. Chaotic dragonfly algorithm: an improved metaheuristic algorithm for feature selection. Applied Intelligence, 2019, 49(1): 188-205. DOI:10.1007/S10489-018-1261-8 (  0) 0) |

| [53] |

Kalita D J, Singh V P, Kumar V. Two-way threshold-based intelligent water drops feature selection algorithm for accurate detection of breast cancer. Soft Computing, 2022, 26: 2277-2305. DOI:10.1007/S00500-021-06498-3 (  0) 0) |

| [54] |

Meenachi L, Ramakrishnan S. Differential evolution and ACO based global optimal feature selection with fuzzy rough set for cancer data classification. Soft Computing, 2020, 24: 18463-18475. DOI:10.1007/S00500-020-05070-9 (  0) 0) |

| [55] |

Iqbal F, Hashmi J, Fung B, et al. A hybrid framework for sentiment analysis using genetic algorithm based feature reduction. https://ieeexplore.ieee.org/ielx7/6287639/8600701/08620527.pdf.

(  0) 0) |

| [56] |

Xue B, Zhang M, Browne W N, et al. A survey on evolutionary computation approaches to feature selection. IEEE Transactions on Evolutionary Computation, 2016, 20(4): 606-626. DOI:10.1109/TEVC.2015.2504420 (  0) 0) |

| [57] |

Song X F, Zhang Y, Gong D W, et al. A fast hybrid feature selection based on correlation-guided clustering and particle swarm optimization for high-dimensional data. IEEE Transactions on Cybernetics, 2022, 52(9): 9573-9586. DOI:10.1109/TCYB.2021.3061152 (  0) 0) |

| [58] |

Amini F, Hu G. A two-layer feature selection method using genetic algorithm and elastic net. Expert Systems with Applications, 2021, 166: 114072. DOI:10.1016/j.eswa.2020.114072 (  0) 0) |

| [59] |

Wang J S, Li S X. An improved grey wolf optimizer based on differential evolution and elimination mechanism. Scientific Reports, 2019, 9: 7181. DOI:10.1038/s41598-019-43546-3 (  0) 0) |

| [60] |

Gordon G J, Roderick V J, Hsiao L L, et al. Translation of microarray data into clinically relevant cancer diagnostic tests using gene expression ratios in lung cancer and mesothelioma. Cancer Research, 2002, 62(17): 4963-4967. (  0) 0) |

| [61] |

Pomeroy S L, Tamayo P, Gaasenbeek M, et al. Prediction of central nervous system embryonal tumour outcome based on gene expression. Nature, 2022, 415(6870): 436-442. DOI:10.1038/415436a (  0) 0) |

| [62] |

Sigillito V G, Wing S P, Hutton L V, et al. Classification of radar returns from the ionosphere using neural networks. Johns Hopkins APL Technical Digest (Applied Physics Laboratory), 1989, 10(3): 262-266. (  0) 0) |

| [63] |

Tavallaee M, Bagheri E, Lu W, et al. A detailed analysis of the KDD CUP 99 data set. 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications. Piscataway: IEEE, 2009. DOI: 10.1109/CISDA.2009.5356528.

(  0) 0) |

| [64] |

Jakesh Bohaju. Brain Tumor. https://www.kaggle.com/datasets/jakeshbohaju/brain-tumor

(  0) 0) |

2023, Vol. 30

2023, Vol. 30