2. Zhejiang Xingsuan Technology Co., Ltd, Hangzhou 310023, China

Artificial intelligence technology is widely used and has brought great convenience to people's life. There are also many applications of artificial intelligence technology in the field of education. For a document image containing both printed and handwritten texts, if the handwritten texts can be removed by artificial intelligence, it will have a broad and important application, such as the test papers done by primary and secondary school students to collect the questions which answer wrong. If the handwritten texts can be removed and a document can be returned to the previous blank state, it will allow the students to practice again. The goal of this work was to realize handwritten texts removal function on a smart phone.

A key step for handwritten texts removal is the classification of printed and handwritten texts (PHT). The problem of PHT classification can be defined as: for a document image containing both printed texts and handwritten texts, it is desired to implement pixel level classification for the printed texts, handwritten texts and background in the image. This problem belongs to semantic segmentation.

For a paper document, the image scanned by the scanner is regular. However, when taking a picture with a smart phone, the image may be distorted or tilted due to the different positions and angles of the smart phone. In addition, the desktop background of a paper document may be complex, with part of the background potentially captured in the image when taking a picture. Thus, an image taken by a smart phone is quite different from a scanned image. It is more difficult to remove handwritten texts from images taken by a smart phone. The accuracy of PHT classification needs to be improved. A better algorithm model needs to be established.

The contribution of this article is as follows. For document images taken with a smart phone, in order to achieve better results in handwritten texts removal, the algorithm model combining dewarping with FCN-AC-ASPP was proposed. The experiment results show that this method can improve the classification accuracy of PHT, and the effect of handwritten texts removal is good.

The structure of this paper is given as follows: In Section 1, we briefly review the relevant methods of PHT classification. Section 2 focuses on the main architecture of the proposed FCN-AC-ASPP.Section 3 addresses the detailed experimental results, analysis and application. Finally, the conclusion is given in Section 4.

1 Related WorkThe paper documents contain a lot of important information, and the processing of document images has important applications. Research on document images typically includes text segmentation[1], optical character recognition (OCR)[2-3], document classification [4], and separation of PHT[5]. The essence of handwritten texts removal and the separation of PHT is the same, both require the classification of PHT. This article mainly focuses on the classification of PHT.

The classification methods of PHT include traditional and deep learning methods. The traditional methods typically include four steps: (1) Image preprocessing. Image preprocessing methods include noise removal and conversion to gray-scale or binary images [6-9]. (2) Identification of areas that are likely to contain texts. The methods used for area identification include region[10-12] and texture methods[13-15]. (3) Extraction of features. Feature extraction can include horizontal and vertical projection profiles, character height, number of baseline pixels, and inter-character gap length [16]. (4) Classification of PHT. PHT classification methods include multi-layer perceptron (MLP) [14], rule-based approach[15], discriminant analysis[16], and hidden Markov models (HMMs)[17].

In recent years, deep learning algorithms have been used to classify PHT. In Ref.[18], the authors used fully convolutional neural networks (FCN) [19] to classify PHT. Ref.[20] applied U-Net [21] to classify PHT. These two methods are better than the traditional methods, but because of the small number of training samples, the generalization ability of the two models is weak, and the classification accuracy is not high enough. In Ref.[22], improved DeeplabV3+ model was applied to classify PHT, producing the dataset PHTD 2021 with 3000 samples. The improved DeeplabV3+ model has higher classification accuracy, but the model is complex. The DeeplabV3+ [23] algorithm includes atrous convolution (AC), atrous spatial pyramid pooling (ASPP) and other modules[24-35].

There is abundant research on handwritten texts removal, but there are few studies that focus on handwritten texts removal of image taken by a smart phone. Handwritten texts removal of an image taken by a smart phone requires dewarping. In Ref.[36], the authors proposed an approach to rectify a distorted document image by estimating control points and reference points. In Ref.[36], the authors only discussed the method of image dewarping, and did not discuss the problem of handwritten texts removal. In this paper, the image dewarping method of Ref. [36] is applied in the image preprocessing stage. After dewarping correction, the image taken by the smart phone will become more standardized and will be like an image from a scanner. Then, the FCN-AC-ASPP model can be applied and used to classify PHT.

A function for handwritten texts removal on a smart phone requires a model with high classification accuracy, but not too complex. In this work, the FCN-AC-ASPP model was proposed to utilize an image taken by smart phone and subjected to dewarping in the preprocessing stage.

2 MethodsFor a picture taken by smart phone, firstly, the image is transformed into a regular image by the dewarping algorithm. Secondly, the FCN-AC-ASPP is used to classify printed texts and handwritten texts. Lastly, handwritten texts can be removed by a simple algorithm, the algorithm is to replace the pixels in the handwritten texts category with 255, because the pixel 255 of the image corresponds to white.

The FCN-AC-ASPP model proposed in this paper is an improvement of the FCN model. The FCN can take input of arbitrary size and produce a correspondingly sized output with efficient inference and learning. The improvement of FCN-AC-ASPP model over FCN model is reflected by replacing the traditional convolution with Atrous Convolutional (AC) and followed by Atrous Spatial Pyramid Pooling (ASPP). Atrous Convolutional supports the exponential expansion of the receptive field and improves network performance. ASPP probes convolutional features at multiple scales. For a paper document, when taking photos from different distances and angles, the images have different scales. Therefore, the Atrus Convolutional and ASPP are considered for use.

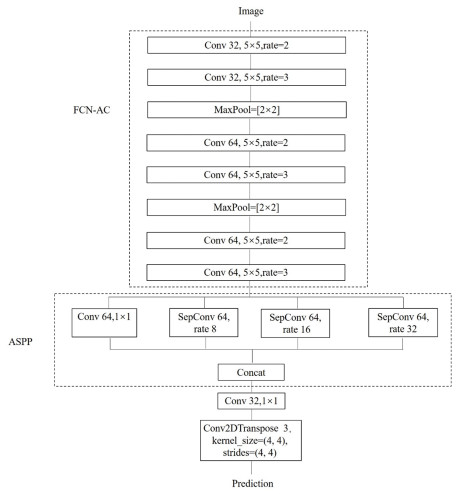

The FCN-AC-ASPP algorithm flow includes the following steps: for the input image, the features are extracted using AC and ASPP, and the output classification results after a deconvolution operation. The FCN-AC-ASPP is a pixels-to-pixels prediction algorithm. The structure of the FCN-AC-ASPP model is shown in Fig. 1 and the details of the model are described below.

|

Fig.1 Network structure of the FCN-AC-ASPP model |

(1) Input layer: the input figure is 1024×1024, with 3 channels.

(2) FCN-AC module

(2.1)Atrous convolutional layer: there are 32 channels, the kernel is 5×5, dilation is 2.

(2.2) Dropout layer, dropout probability is 20%.

(2.3)Atrous convolutional layer: there are 32 channels, the kernel is 5×5, dilation is 3.

(2.4)Pool layer: the down-sample ratio is 2×2.

(2.5)Atrous convolutional layer: there are 64 channels, the kernel is 5×5, dilation is 2.

(2.6)Dropout layer, dropout probability is 20%.

(2.7)Atrous convolutional layer: there are 64 channels, the kernel is 5×5, dilation is 3.

(2.8)Pool layer: the down-sample ratio is 2×2.

(2.9)Atrous convolutional layer: there are 64 channels, the kernel is 5×5, dilation is 2.

(2.10)Dropout layer, dropout probability is 20%.

(2.11)Atrous convolutional layer: there are 64 channels, the kernel is 5×5, dilation is 3.

(3) ASPP module

(3.1)ASPP branch 1 is the convolution layer: there are 64 channels, the kernel is 1×1, dilation is 1.

(3.2)ASPP branch 2 is the depthwise separable convolution layer: there are 64 channels, the kernel is 3×3, dilation is 8.

(3.3)ASPP branch 3 is the depthwise separable convolution layer: there are 64 channels, the kernel is 3×3, dilation is 16.

(3.4)ASPP branch 4 is the depthwise separable convolution layer: there are 64 channels, the kernel is 3×3, dilation is 32.

(3.5)Concatenate 4 ASPP branches.

(4) Convolution layer: there are 32 channels, and the kernel is 1×1.

(5) Deconvolution layer: there are 3 channels, the kernel is 4×4, and the stride is 4×4.

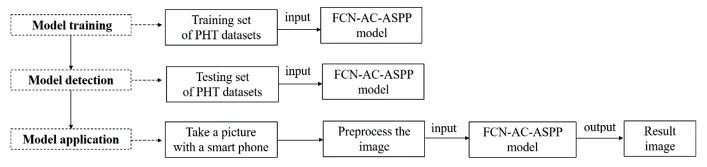

3 Experimental Design and Application 3.1 Technical RouteThe technical route of this research is shown in Fig. 2. It includes model training, model detection, and model application.

|

Fig.2 Technical route |

Model training: establish FCN-AC-ASPP model, input the training set of PHT datasets into the FCN-AC-ASPP model for training.

Model detection: input the testing set of PHT datasets into the trained FCN-AC-ASPP model for detection, calculate the classification accuracy of the FCN-AC-ASPP model.

Model application: take a picture with a smart phone of a paper document including printed and handwritten texts, preprocess the image, and then input the image into the trained FCN-AC-ASPP model to obtain the resulting classified image. After classification, the handwritten texts can be removed by algorithm processing, leaving only the printed texts.The image preprocessing methods include dewarping and binarization. A previously described method [31] was adopted for dewarping, and the adaptive threshold algorithm was adopted for binarization.

3.2 DatasetWe used dataset PHTD2021 [17].The PHTD2021 dataset was specifically created by us in 2021 to study handwritten texts removal. At present, we have not found a better publicly available PHT dataset. The specific details of this dataset are listed in Table 1.

| Table 1 Distribution for dataset PHTD2021 |

3.3 Model Training

For experimental comparison, the FCN model, the DeeplabV3+ model, the fully convolutional network with atrous convolutional (FCN-AC) model, and the FCN-AC-ASPP model were established. The training samples of PHTD2021 were input into the four models respectively for training.

3.4 Experimental ResultsAfter training of the FCN, DeeplabV3+, FCN-AC, and FCN-AC-ASPP models, each model was tested on the testing set of PHTD2021, and the results are shown in Table 2. The FCN-AC-ASPP model had the highest classification accuracy, and the total mean intersection over union (mIoU) was 96.10%. The total mIoU values of the FCN, DeeplabV3+, and FCN-AC models were 78.63%, 87.78%, and 94.01%, respectively. The total mIoU of the improved DeeplabV3+ model obtained previously [17] was 95.06%. Therefore, the FCN-AC-ASPP model is superior to the improved DeeplabV3+ model.

| Table 2 Classification accuracy achieved by the FCN, DeeplabV3+, FCN-AC, and FCN-AC-ASPP models(%) |

In terms of model calculation speed, the calculation time of different models for a 3000×2000 pixel size image is shown in Table 3. The FCN, DeeplabV3+, FCN-AC, and FCN-AC-ASPP models require 1.25, 4.17, 1.68, and 2.06 s respectively. It can be seen that compared to DeeplabV3+, the FCN-AC-ASPP model not only has high accuracy but also takes less time.

| Table 3 Time consuming by the FCN, DeeplabV3+, FCN-AC, and FCN-AC-ASPP models |

3.5 Model Application

The previous experiments showed that the FCN-AC-ASPP model exhibits the highest classification accuracy. So the FCN-AC-ASPP model was used for the next experiments to remove handwritten texts. Using an image of a document taken by smart phone, the process was performed with and without dewarping, as described below.Using dewarping was called Method 1, and not using dewarping was called Method 2.

For Method 1, only binarization was used during preprocessing, FCN-AC-ASPP was used to classify the image at the pixel level, and then the handwritten texts were removed. Fig. 3(a) shows an image of a document taken by a smart phone. The result after binarization is shown in Fig. 3(b) and the result of classification is shown in Fig. 3(c). The green, blue, and white represent handwritten texts, printed texts, and backgrounds respectively.The result of handwritten texts removal is shown in Fig. 3(d).

|

Fig.3 Handwritten texts removal method based on FCN-AC-ASPP |

For Method 2, which this study adopted, preprocessing included dewarping and binarization, FCN-AC-ASPP was used to classify the image at the pixel level, and then the handwritten texts were removed. Fig. 4(a) shows an image of a document taken by a smart phone. The image after dewarping is shown in Fig. 4(b). The image after binarization is shown in Fig. 4(c). The image after classification is shown in Fig. 4(d). The green, blue, and white represent handwritten texts, printed texts, and backgrounds, respectively. The final result after the removal of the handwritten texts is shown in Fig. 4 (e).

|

Fig.4 Our handwritten texts removal method based on dewarping and FCN-AC-ASPP |

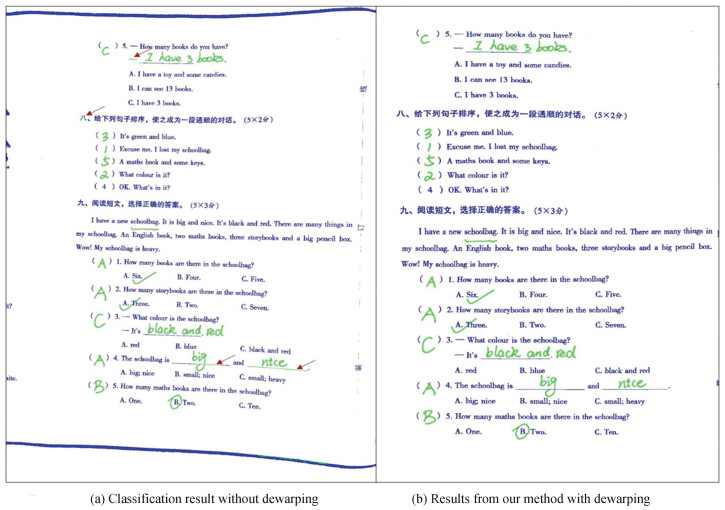

The classification results of the two methods were compared. Fig. 5(a) shows the classification result without dewarping. The arrows in the figure indicate classification errors. Fig. 5(b) shows the classification result when dewarping is used. With dewarping, the results are more accurate with the intelligent removal of unnecessary information. Using the same classification algorithm FCN-AC-ASPP, when dewarping is used in the preprocessing, the classification effect is better, and the handwritten texts removal effect is also better. Moreover, the result is more beautiful when dewarping is used in the preprocessing.

|

Fig.5 Comparison of classification results |

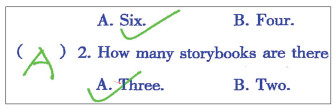

Even when the handwritten texts overlap with the printed texts, the two kinds of texts can be distinguished by the FCN-AC-ASPP model, as indicated by the arrow in Fig. 6. This is an advantage of the FCN-AC-ASPP. This is because, for an input image of any size, the output afterconvolution and deconvolution was restored to the original size of the input image, thus we can achieve classification prediction for each pixel. Thus, FCN-AC-ASPP is a pixels-to-pixels prediction algorithm. Overall, for an image of a document taken by a smart phone, this method combining dewarping with FCN-AC-ASPP model can achieve good handwritten texts removal effect.

|

Fig.6 Handwritten texts overlapping with printed texts can be classified accurately |

3.6 Deployment as a Service

The developed handwritten texts removal function based on dewarping and FCN-AC-ASPP has been made available on our website: http://www.algstar.com/Upload?type=8, as shown in Fig. 7. An application (APP) was also developed, and can be downloaded from the website and installed on Android phones. Currently, images with English and Chinese texts can be processed.

|

Fig.7 Web interface of handwritten texts removal |

4 Conclusions

For handwritten texts removal of an image taken on a smart phone, a method combining dewarping and FCN-AC-ASPP was proposed. Images taken by a smart phone may be distorted and tilted, so the dewarping algorithm was used to convert the image into a standard image. Then, the standard image was input to FCN-AC-ASPP for classification. The classification accuracy of the FCN-AC-ASPP model is 96.10%, which is better than that of FCN, DeeplabV3+, FCN-AC, and improved DeeplabV3+ models. After classification, the handwritten texts can be removed by a simple algorithm. The experimental results show that the handwritten texts removal method proposed in this article has ideal results for document images taken by a smart phone.

The method proposed in this paper has two limitations: (a) The handwritten texts removal effect for blurry and unclear images is weaker than for clear images; (b) Because the training samples only included English and Chinese characters, it is only suitable for handling English and Chinese document images.

| [1] |

Xu X, Zhan Z, Wang Z, et al. Rethinking text segmentation: A novel dataset and a text-specific refinement approach. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Ithaca: Cornell University, 2021: 12045-12055. DOI:10.48550/arXiv.2011.14021

(  0) 0) |

| [2] |

Du Y, Li C, Guo R, et al. PP-OCR: A Practical Ultra Lightweight OCR System. Ithaca: Cornell University, 2020. DOI:10.48550/arXiv.2009.09941

(  0) 0) |

| [3] |

Du Y, Li C, Guo R, et al. PP-OCRv2: Bag of Tricks for Ultra Lightweight OCR System. Ithaca: Cornell University, 2021. DOI:10.48550/arXiv.2109.03144

(  0) 0) |

| [4] |

Nasir I M, Khan M A, Yasmin M, et al. Pearson correlation-based feature selection for document classification using balanced training. Sensors, 2020, 20(23): 6793. DOI:10.3390/s20236793 (  0) 0) |

| [5] |

Huang B, Lin J, Liu J, et al. Separating Chinese character from noisy background using GAN. Wireless Communications and Mobile Computing, 2021, 2021: Article ID 9922017. DOI: 10.1155/2021/9922017.

(  0) 0) |

| [6] |

da Silva L F, Conci A, Sanchez A. Automatic discrimination between printed and handwritten text in documents. Proceedings of SIBGRAPI 2009-22nd Brazilian Symposium on Computer Graphics and Image Processing. Rio de Janeiro, Brazil, 2009, 261-267. DOI:10.1109/SIBGRAPI.2009.40 (  0) 0) |

| [7] |

Garlapati B M, Chalamala S R. A system for handwritten and printed text classification. Proceedings of 2017 UKSim-AMSS 19th International Conference on Modelling and Simulation(UKSim). Piscataway: IEEE, 2017: 50-54. DOI:10.1109/UKSim.2017.37

(  0) 0) |

| [8] |

Jindal A, Amir M. Automatic classification of handwritten and printed text in ICR boxes. Souvenir of the 2014 IEEE International Advance Computing Conference, IACC. 2014. Piscataway: IEEE, 2014: 1028-1032. DOI:10.1109/IAdCC.2014.6779466

(  0) 0) |

| [9] |

Malakar S, Das R K, Sarkar R, et al. Handwritten and printed word identification using gray-scale feature vector and decision tree classifier. Procedia Technology, 2014, 10: 831-839. DOI:10.1016/J.PROTCY.2013.12.428 (  0) 0) |

| [10] |

Saidani A, Echi A K. Pyramid histogram of oriented gradient for machine-printed/handwritten and Arabic/Latin word discrimination. Proceedings of 2014 6th International Conference on Soft Computing and Pattern Recognition(SoCPaR). Tunis, 2014, 267-272. DOI:10.1109/SOCPAR.2014.7008017 (  0) 0) |

| [11] |

Kavallieratou E, Stamatatos S, Antonopoulou H. Machine-printed from handwritten text discrimination. Proceedings of Ninth International Workshop on Frontiers in Handwriting Recognition. Piscataway: IEEE, 2004: 312-316. DOI:10.1109/IWFHR.2004.65

(  0) 0) |

| [12] |

Saba T, Almazyad A S, Rehman A. Language independent rule based classification of printed & handwritten text (Classification of Printed & Handwritten Text). Proceedings of 2015 IEEE International Conference on Evolving and Adaptive Intelligent Systems. Piscataway: IEEE, 2015: 1-4. DOI:10.1109/EAIS.2015.7368806

(  0) 0) |

| [13] |

Perveen N, Kumar D, Bhardwaj I. An overview on template matching methodologies and its applications. International Journal of Research in Computer and Communication Technology, 2013, 2(10): 988-995. (  0) 0) |

| [14] |

Jain A K, Bhattacharjee S. Text segmentation using gabor filters for automatic document processing. Machine Vision and Applications, 1992, 5(3): 169-184. DOI:10.1007/BF02626996 (  0) 0) |

| [15] |

Kumar S, Gupta R, Khanna N, et al. Text extraction and document image segmentation using matched wavelets and MRF model. IEEE Transactions on Image Processing, 2007, 16(8): 2117-2128. DOI:10.1109/TIP.2007.900098 (  0) 0) |

| [16] |

Guo J K, Ma M Y. Separating handwritten material from machine printed text using hidden Markov models. Proceedings of the Sixth International Conference on Document Analysis and Recognition. Piscataway: IEEE, 2001: 439-443. DOI:10.1109/ICDAR.2001.953828

(  0) 0) |

| [17] |

Barlas P, Adam S, Chatelain C, et al. A typed and handwritten text block segmentation system for heterogeneous and complex documents. Proceedings of 2014 11th IAPR International Workshop on Document Analysis Systems. Tours, 2014, 46-50. DOI:10.1109/DAS.2014.39 (  0) 0) |

| [18] |

Dutly N, Slimane F, Ingold R. PHTI-WS: A printed and handwritten text identification web service based on FCN and CRF post-processing. Proceedings of 2019 International Conference on Document Analysis and Recognition Workshops. Sydney, 2019, 20-25. DOI:10.1109/ICDARW.2019.10033 (  0) 0) |

| [19] |

Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 39(4): 640-651. DOI:10.1109/TPAMI.2016.2572683 (  0) 0) |

| [20] |

Jo J, Koo H I, Soh J W, et al. Handwritten text segmentation via end-to-end learning of convolutional neural networks. Multimedia Tools and Applications, 2020, 79: 32137-32150. DOI:10.1007/s11042-020-09624-9 (  0) 0) |

| [21] |

Ronneberger O, Fischer P, Brox T. U-Net: Convolutional networks for biomedical image segmentation. Proceedings of International Conference on Medical Image Computing and Computer-Assisted Intervention. Ithaca: Cornell University, 2015: 234-241. DOI:10.48550/arXiv.1505.04597

(  0) 0) |

| [22] |

Fang H. Semantic segmentation of PHT based on improved DeeplabV3+. Mathematical Problems in Engineering, 2022. Article ID 6228532. DOI: 10.1155/2022/6228532.

(  0) 0) |

| [23] |

Chen L C, Zhu Y, Papandreou G, et al. Encoder-decoder with atrous separable convolution for semantic image segmentation. Proceedings of the 2018 European Conference on Computer Vision. Ithaca: Cornell University, 2018: 833-851. DOI:10.48550/arXiv.1802.02611

(  0) 0) |

| [24] |

Chen L C, Papandreou G, Kokkinos I, et al. Semantic image segmentation with deep convolutional nets and fully connected CRFs. Computer Science, 2014, 4: 357-361. DOI:10.48550/arXiv.1412.7062 (  0) 0) |

| [25] |

Chen L C, Papandreou G, Kokkinos I, et al. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(4): 834-848. DOI:10.1109/TPAMI.2017.2699184 (  0) 0) |

| [26] |

Chen L C, Papandreou G, Schroff F, et al. Rethinking atrous convolution for semantic image segmentation. Ithaca: Cornell University, 2017. DOI:10.48550/arXiv.1706.05587

(  0) 0) |

| [27] |

Chollet F. Xception: Deep learning with depthwise separable convolutions. Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2017: 1800-1807. DOI:10.48550/arXiv.1610.02357

(  0) 0) |

| [28] |

Howard A G, Zhu M, Chen B, et al. Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications. Ithaca: Cornell University, 2017. DOI:10.48550/arXiv.1704.04861

(  0) 0) |

| [29] |

Wang M, Liu B, Foroosh H. Design of efficient convolutional layers using single intra-channel convolution, topological subdivisioning and spatial "bottleneck" structure. Computer Vision and Pattern Recognition, 2016. (  0) 0) |

| [30] |

He K, Zhang X, Ren S, et al. Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2016: 770-778.

(  0) 0) |

| [31] |

Yu F, Koltun V. Multi-scale contexts aggregation by dilated convolutions. International Conference on Learning Representations. Ithaca: Cornell University, 2016: 1-13. DOI:10.48550/arXiv.1511.07122

(  0) 0) |

| [32] |

Sermanet P, Eigen D, Zhang X, et al. Overfeat: Integrated recognition, localization and detection using convolutional networks. Ithaca: Cornell University, 2013. DOI:10.48550/arXiv.1312.6229

(  0) 0) |

| [33] |

Giusti A, Ciresan D, Masci J, et al. Fast image scanning with deep max-pooling convolutional neural networks. Proceedings of the IEEE International Conference on Image Processing. Piscataway: IEEE, 2013: 4034-4038. DOI:10.1109/ICIP.2013.6738831

(  0) 0) |

| [34] |

Papandreou G, Kokkinos I, Savalle P A. Modeling local and global deformations in deep learning: Epitomic convolution, multiple instance learning, and sliding window detection. Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2015: 390-399. DOI:10.1109/CVPR.2015.7298636

(  0) 0) |

| [35] |

He K, Zhang X, Ren S, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2015, 37(9): 1904-1916. DOI:10.1109/TPAMI.2015.2389824 (  0) 0) |

| [36] |

Xie G, Yin F, Zhang X, et al. Document dewarping with control points. Proceedings of International Conference on Document Analysis and Recognition. Switzerland: Springer, Cham, 2022: 466-480. DOI:10.1007/978-3-030-86549-8_30

(  0) 0) |

2024, Vol. 31

2024, Vol. 31