2. Department of Information Technology, Gokaraju Rangaraju Institute of Engineering and Technology, Kukatpally, Hydarabad 500090, Telangana, India;

3. Department of Computer Science and Engineering, Koneru Lakshmaiah Education Foundation, Vaddeswaram, Guntur 522302, Andhra Pradesh, India

Proposal frameworks are assuming a significant part of online business sites.The recommender framework assumes an essential part to the client's greatest advantage, as numerous items are being bought on the web and expanding client interest for an enormous measure of things accessible everywhere on the sites. These sites use recommender frameworks for separating explicit things can be all the more handily established by the client's advantage. A proposal framework is a form of information extraction technique employed to predict the rating or preference a user might assign to a product. User actions and feedback can be stored in the recommender database. Gather recommender information from the client either certainly or expressly. A certain procurement in the film suggestion framework utilizes the client's conduct while watching the motion pictures. An unequivocal securing in film proposal framework utilizes the client's previous evaluations or history. A few organizations have conveyed suggestion frameworks to direct their clients. Recommender frameworks have added to the economy of online business sites like Amazon, Netflix, pendula, and Yahoo. Machine learning involves the application of artificial intelligence, enabling systems to autonomously learn and improve from experience without explicit programming. For instance, a computer program is considered to learn from experience in a specific set of tasks (T), and its performance in these tasks, as measured by P, enhances over time with accumulated experience (E). AI utilizes its calculations to perform programs. Data processing is an undertaking of changing information from crude information over to a usable and wanted structure like diagrams, tables, pictures, graphs, movement, and some more contingent upon the prerequisites of the machine. This whole interaction is to be acted in an extremely organized way. The essential advances remembered for information handling are data collection, data investigation and treatment, data investigation and change, data preparation, and data experimentation. Information perception utilizes a variety of static and intelligent visuals in a particular setting to assist individuals with comprehension and make use of a lot of information. The information regularly appeared in text design that envisions examples, patterns, and connections. Information representation utilizes measurable designs, plots, data illustrations, and different instruments for clear and viable correspondence. Information perception assists with recognizing the territories, which need more consideration. Representation impacts displaying from multiple points of view like the EDA (Exploratory Data Analysis) stage. KNN calculation is perhaps the least complex calculation to perform arrangement. It is a non-parametric strategy, which implies that when arranging information points, we do not make any presumptions on the dissemination of the information (dissimilar to methods like direct relapse or Gaussian Mixture Model). KNN is also referred to as a lazy learning algorithm. Regression, on the other hand, is a supervised learning technique employed to identify relationships among variables, allowing us to predict the continuous output variable based on one or more predictor variables. Regression depicts a line or curve that best fits the data points on a scatter plot, minimizing the vertical distance between the data points and the regression line. Relapse depends on the speculation like straight, quadratic, polynomial, non-direct, and so forth. In the preparation stage, the secret boundaries are enhanced, including the information esteems introduced in the preparation. The cycle of improvement is called slope respectable calculation. When the speculation boundaries are prepared, at that point similar theory with prepared boundaries is utilized to anticipate the yield with genuine qualities. By performing relapse, we can simply decide between the main factor, the most un-significant factor, and what each factor is meaning for different components. Direct relapse is a factual relapse strategy. It is one of the straightforward calculations and shows the connection between the constant factors. Straight relapse shows the direct connection between free factor and ward variable. Basic straight relapse contains single information and various direct relapses contain more than one information. Strategic relapse calculation works with straight-out factors like 0 or 1, Yes or No, and True or False. It is a prescient examination calculation, which chips away at the idea of likelihood. Strategic relapse utilizes sigmoid capacity, which is unpredictable expense work. This sigmoid capacity is utilized to show the information in calculated regression. Polynomial relapse is a sort of relapse, which models the non-straight datasets utilizing a direct model. It is like various relapses. In polynomial relapse, the first highlights are changed into polynomial highlights of a given degree and afterward displayed utilizing a direct model.

The model is yet straight as the coefficients are yet direct quadratic. This is not the same as numerous direct relapses so in polynomial relapse, a solitary component has various degrees rather than different factors with a similar degree. Support vector machine is an administered learning calculation, which can be utilized as relapse just as order issues. It deals with ceaseless factors. A few watchwords, that are utilized in help vector relapse, are the following: Kernel, Hyperactive plane, Boundary line, and Support vectors. The principle objective of help vector relapse is to consider the extreme information focuses inside the limit lines and the hyperactive plane (best-fit line) should contain the greatest number of information points. Decision tree relapse can likewise be utilized to tackle both grouping and relapse issues. It can likewise tackle issues for both all-out and mathematical information. Choice tree relapse assembles a tree-like construction in which each inside hub addresses the test for a property. A choice tree is developed beginning from the root hub (dataset), which parts into left and right youngster hubs (subsets of the dataset). The model is attempting to foresee the decision of an individual between sports vehicles or luxury cars. The random forest relapse is a gathering learning strategy, which consolidates various choice trees and predicts the last yield dependent on the normal of each tree yield. The consolidated trees are called base models. Arbitrary woodland relapse utilizes the bagging or bootstrap aggregation procedure of gathering learning in which choice tree runs equally and does not converge with one another. We can forestall overfitting in the model by making irregular subsets of the dataset. Classification is the way to classify a given arrangement of information into given classes. It very well may be performed on either organized or unstructured information. The interaction begins with foreseeing the class of given information focuses. It indicates the class to which information components have a place with best utilized when the yield has limited and discrete qualities. It predicts a class for an information variable too. Bunching is an unaided learning. It is an interaction to discover significant construction, informative fundamental cycles, generative highlights, and groupings. Bunching is an undertaking of partitioning the information focused into several gatherings in a manner that the information focuses in the same gathering are more to other information focuses in the same gathering and unlike the information points in different gatherings. It is fundamentally the assortment of items between the likeness and difference. Various deep learning applications like forgery detection[1], text recognition[2], agriculture disease prediction[3], cancer prediction[4], and regression techniques[5-6] play a vital role in the research community. The scope of this approach is content-based recommendations, user engagement, scalability, and simplicity.

Motivation:

1) Enhanced user experience: A well-designed video recommendation system powered by KNN can significantly improve user experience by offering personalized content, leading to increased user satisfaction and prolonged platform engagement.

2) Content diversity and discovery: Recommending videos based on similarities can expose users to a variety of content they might not have discovered otherwise, expanding their viewing horizons.

3) Addressing cold start problem: KNN can help address the 'cold start' problem for new users with limited viewing history. It can suggest videos based on their content without needing extensive user data.

4) Robustness in sparse data environments: KNN is relatively robust in handling sparse data, making it suitable for scenarios where user interaction data is limited or sparse.

5) Explain ability: KNN offers transparency in its recommendations. Users can understand recommendations based on the similarity of video content, contributing to better trust and transparency in the recommendation process.

6) Faster prototyping and testing: Its simplicity and ease of implementation enable quicker prototyping and testing of recommendation strategies before deploying more complex models.

KNN algorithm has lots of advantages, some are simplicity and intuitiveness, no training phase, content-agnostic, non-parametric model, dynamic adaptability, and sparse data handling as some limitations such as scalability issues in large datasets and sensitivity to the choice of distance metric. Therefore, the choice of recommendation algorithm should be based on the specific needs and characteristics of the recommendation system and dataset.

1 Literature SurveyMohamed[7] depicted that the methodologies were content-based sifting, community filtering, and hybrid separating. These give the client's past behavior, interests, and likenesses with different client profiles. In recommender, the framework requires a tremendous dataset of film focal point-like data. The paper examines some recommender framework challenges like virus start, adaptability, protection, Shilling assault, and oddity. It additionally gives answers for defeat difficulties and its benefits. A portion of the locales utilize recommender frameworks like Netflix, Amazon, Pandora, and Yahoo. Ahuja et al.[8] stated that a film recommender framework was constructed utilizing K-implies bunching and K-Nearest Neighbor calculations. A proposal framework gathers information about the client's inclinations either verifiably or unequivocally. The dataset was taken from Kaggle. The framework was executed in Python programming language. At last, the yield was acquired as various upsides of Root Mean squared Error(RMSE). RMSE esteem is a preferable proposed strategy over existing procedures with less number of groups. The nostalgic analysis idea can be utilized in the future to improve the efficiency of the film suggestion framework. Kumar et al.[9] presented a movie recommender framework. The strategies utilized in this recommender framework like K-implies grouping, content-based separating, and communitarian sifting. The client's rating arranged the prescribed film list. The rating and votes of clients are partitioned into 3 different ways least, medium, and maximum. The paper utilizes pre-sifting strategies like genre, actor, director, year, and rating before utilizing K-implies calculation. For gathering data, they gave an inquiry free. K-implies grouping is utilized to expand the precision of the recommender framework. This framework is created in PHP utilizing Dreamweaver 6.0 and Apache Server 2.0. Lenhart et al.[10] proposed a games news recommender framework that utilized three separating procedures like content-based, collaborative filtering, and hybrid filtering. The recommender framework is a forceful passionate connection regularly for peruses or chosen group or player. They fostered a dashboard and incorporated it into a site. Makes client profiles dependent on certain criticisms circulated by the client when understanding articles. This methodology is exact however needs variety. Half-and-half filtering gives the arrangement variety. It likewise talks about recommender framework challenges like virus start and gives its answer. Ponnam et al.[11] proposed the film recommender framework utilizing thing collective separating strategy, which has an extremely high fame due to its effectiveness. In this item based shared sifting procedure, we initially look at the user thing-rating network and find out the connections among different things. Subsequently, we utilize these connections to register the proposals for the user. recommender frameworks are utilized to foresee the appraisals where the client will in general give for a thing. These conventional community-oriented sifting frameworks can deliver standard suggestions, in any event, for wide-running issues. While different strategies like substance-based experiences helpless exactness, adaptability, information sparsity, and large mistake forecast. Luo et al.[12] elaborated on a proficient approach to collaborative filtering for recommender systems using non-negative matrix factorization. Communitarian separating is quite possibly the most proficient procedure utilized for building the recommender framework. Framework Factorization(MF)-based methodologies end up being profoundly precise and adaptable. This study focuses on developing a collaborative filtering model based on Non-Negative Matrix Factorization(NMF) using a single-component approach. The concept involves exploring the non-negative update process dependent on each latent component rather than global feature matrices. RSNMF(Restricted Single-component Non-negative Matrix Factorization) proves particularly suitable for addressing collaborative filtering challenges with the constraint of non-negativity. Experimental results on extensive industrial datasets demonstrate that RSNMF achieves high accuracy with low computational complexity. Halder et al.[13] proposed a film swarm mining idea. In this paper, a cloud-based climate is to create a rundown of suggested motion pictures dependent on the client's profile data. This is used to tackle the virus start issue to prescribe things to the new clients with how to oversee and expand seeing occasions for the most current and famous films. This calculation utilized two pruning rules and successive thing mining. This framework is carried out by utilizing content-based sifting and synergistic separating strategies. The result is a development on the significance of proposal frameworks because of their capacity to take care of this decision over-burden issue, by giving clients the most applicable items from a wide range of decisions. Portugal et al.[14] has introduced a novel recommender framework dependent on customized notion mining. They thought about the client's suppositions and decisions to make the suggestions. Several strategies, including unigrams, bigrams, Bernoulli, Naive Bayes, support vector machines, and random forests, were employed on two datasets (Yelp and Movie Lens). The ALS (Alternating Least Squares) method and sentiment generation scheme proposed in this study resulted in lower RMSE compared to other strategies. However, as the number of users and items to recommend increases, there is a corresponding increase in time consumption, leading to computational overhead. Govind et al.[15] presented that the rise of the online media sharing locales has presented new difficulties in program proposal in online organizations. In any case, there is a bottleneck in that the measure of accessible review logs and client kinship networks are too restricted to even consider planning powerful suggestion calculations. Accordingly, completing a perceptive program suggestion framework is significant for these locales. In this work, we propose a novel model, which goes to the interpersonal organizations, and mine client inclinations data communicated in microblogs for assessing the comparability between online films and TV scenes. Besides, the principal approach can settle the "cool beginning" issue in the film and TV proposal framework. Comparable projects found from the informal community are additionally used to propose programs in other media gadgets. This work can be handily applied in online media streaming destinations, and canny proposals of projects can be made to the clients through mining microblogs. A sophisticated film recommendation system was put forth through cluster-level sentiment analysis on microblogs[16]. This study evaluated the resemblance between TV episodes and online movies. The proposed intelligent approach made strides in bridging the gap between TV and film audiences. The practical applications of this work extended to various domains, including recommendations for online TV and film programs, advertising and service suggestions, customization for home and mobile devices, and an insightful TV system integrated with social interaction. Ahmed et al.[17] developed a smart recommender system that leverages IoT data, user interactions, and textual reviews to provide personalized recommendations for IoT device usage. This can enhance user experiences, encourage device adoption, and optimize device settings to align with user preferences. Additionally, it aims to address the unique challenges and opportunities presented by IoT data and user feedback in the context of recommendation systems. Kumar et al.[18] presented a comprehensive overview of the development and implementation of the ML-APS for HR appraisal, including data collection and preprocessing, model selection, integration, ethical considerations, and evaluation. The author aims to demonstrate the potential benefits of using machine learning in HR appraisal while addressing its challenges and implications. Zhu et al.[19] designed, implemented, and evaluated a novel data security detection algorithm specifically tailored to IoT environments, leveraging the K-means clustering approach. The research aims to address the growing concerns surrounding data security in the context of IoT and provide a practical solution to enhance security measures in IoT systems. Gaddam et al.[20] designed and executed a comprehensive study comparing the performance of optimization algorithms on a generated dataset. The goal is to provide insights into the strengths and weaknesses of these algorithms under specific conditions, contributing to the field of optimization and aiding researchers and practitioners in selecting the most suitable algorithms for their particular tasks.

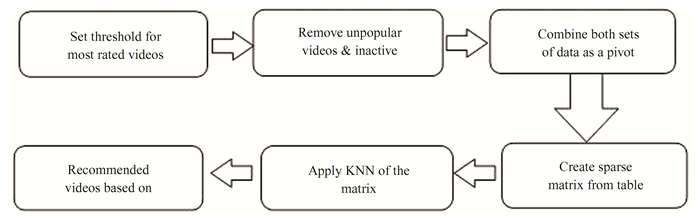

2 MethodologyTo utilize a dataset in machine learning, the dataset is initially divided into a preparation and test set. The preparation set is utilized to prepare the model. The test set is utilized to test the precision of the model. Regularly, split 80% preparation, and 20% test. The information separated from clients is genuine information which requires preprocessing steps like cleaning, combination, change, and so forth. For building this suggestion framework, different preprocessing steps are utilized like word division, stop-words evacuation, and portrayal of surveys. These are applied to preprocess the crude video audits. Significant highlights are extricated from the surveys given by the different clients. For each element, angle-arranged assessment mining is utilized to gauge the survey conclusions whether they are positive, unbiased, or negative. In this progression, information is gathered from various clients and put away in the data set. From the survey information base, it is assessed and arranged to be understood or an unequivocal perspective. The conclusions are distinguished from the surveys, which are gathered from the clients. The suppositions are classified into three sorts positive, negative, and unbiased. For instance, the film Batman is perhaps one of the best films. A portion of the clients watch the substance like innovation, information, and so on, and then consider it useful for them and give the positive survey for that film. A few clients notice think about unrefined language and brutality; they will not suggest this film i.e., negative survey. A few clients watch the film depending on the group in the film whether it is fortunate or unfortunate; at that point, it goes under nonpartisan. In light of a few qualities, the suppositions are detected. The comparability among clients is figured by utilizing KNN order. At that point, develop the lattice for evaluations and clients. Audits are given positioning dependent on the diving request. Fig. 1 shows the process flow diagram for recommending videos.

|

Fig.1 Process flow diagram for recommending videos |

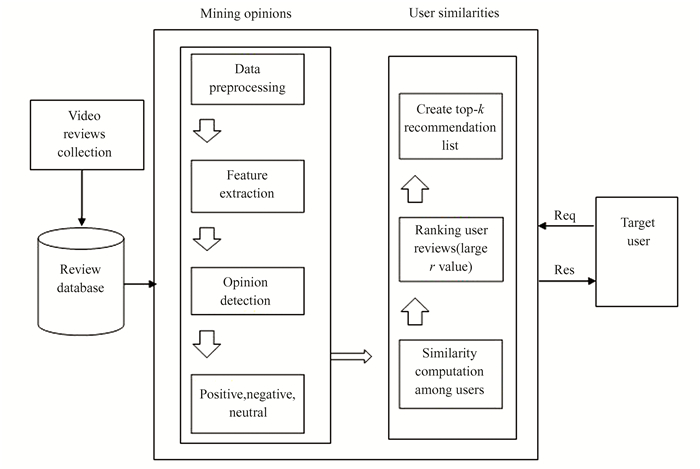

The bigger worth indicates that the clients are more comparative in terms of film surveys. At that point, make the top-k suggestion list for the target client and react to them. Like this, the recordings are prescribed to the objective client. Review information base is the assortment of the audits of a few recordings given by various clients. These audits are offered to dig thoughts for information preprocessing, including extraction, assessment identification, and closeness calculation among users. Sparse networks turn up a great deal in applied machine learning. SciPy gives instruments to making meager grids utilizing different information structures, just as devices for changing a thick lattice over to an inadequate framework. Fig. 2 shows the main architecture of the video recommendation system. The following are the steps included in designing the recommendation system.

|

Fig.2 Architecture of the system |

1) Mean Squared Error. Mean Squared Error (MSE) serves as a metric for assessing the accuracy of an estimator. A lower MSE value indicates more accurate predictions. Mathematically, MSE is defined as the mean of the squared deviations between predicted values and true values, with 'n' representing the total number of samples in the data.

| $ \operatorname{MSE}=\frac{1}{n} \sum\limits_{i=1}^n\left(Y_i-\hat{Y}_i\right)^2 $ |

2) Cross-Entropy. Cross entropy, rooted in information theory, is a widely utilized concept. It measures the amount of bits required to encode specific information based on initial hypotheses.

| $ H_{y^{\prime}}(y):=-\sum\limits_i y_i^{\prime} \log \left(y_i\right)+\left(1-y_i^{\prime}\right) \log \left(1-y_i\right) $ |

In the context of classification, where a network produces two distinct outputs, this function addresses false positives by incorporating an additional term on the right side of the expression to penalize them. Alternatively, it can be expressed as:

| $ H_{y^{\prime}}(y):=-\sum\limits_i y_i^{\prime} \log \left(y_i\right) $ |

3) Hinge Loss. The hinge loss function is commonly associated with Support Vector Machines (SVMs) and is employed in the training of classifiers.

| $ l(y)=\max (0, 1-t . y) $ |

Here, t represents the desired output, and y denotes the classifier score. Although hinge loss is a convex function, its lack of differentiability limits the available optimization methods.

4) Huber loss. In statistics, the Huber loss serves as a loss function in robust regression, offering reduced sensitivity to outliers compared to the squared error loss. A modified version is occasionally utilized for classification purposes.

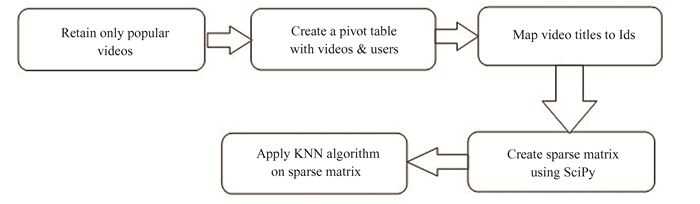

5) Processing the dataset. The datasets are processed by the phases shown in Fig. 3.

|

Fig.3 Processing of dataset |

By setting the threshold limit, the datasets are divided into popular and unpopular videos based on the ratings given by the users. The pivot table is created for both the popular and unpopular videos along with active and inactive users. The pivot table consists of a large number of rows and columns with video titles and userIds. Then sparse matrix is created for the pivot table using SciPy, which is an open-source Python library used for technical computing. A sparse matrix is a matrix with less number of non-zero elements and a large number of zero elements. Once more, associate video titles with userIds and implement KNN classification. The K-Nearest Neighbors algorithm is a straightforward approach that retains all existing instances and categorizes new instances by assessing their similarity using measures like distance functions. K-Nearest Neighbors are measured by using Minkowski distance, if k=1 then Manhattan distance, if k=2 then Euclidean distance. Based on the distances, the nearest data points are observed. According to those data points, the videos are recommended to the user based on the videos searched by the user.

3 Results and DiscussionThe results of this paper show that a recommended system performs well for the summarization of findings and similarities of an active user based on his interests. Our results cast a new light on the recommended system usage in business applications. The following Table 1 shows the list of results of Top 5 movies. Table 2 shows the Top 5 ratings for the raspective movieId's.

| Table 1 List of results: Top 5 movies |

| Table 2 Top 5 ratings for the respective movieId's |

Unique ratings in sorted order in an array:

array([0.5, 1.0, 1.5, 2.0, 2.5, 3.0, 3.5, 4.0, 4.5, 5.0]);

Total number of movies in an array: 9742;

Total number of ratings in an array: 100836;

Total number of ratings provided by the n-unique users: 610.

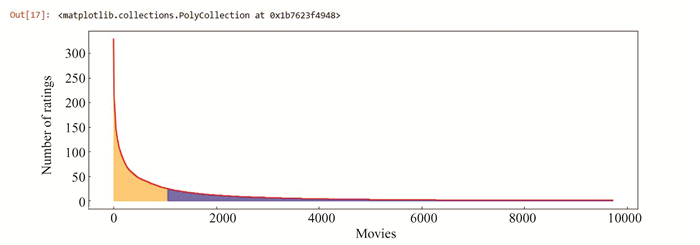

Parameters 1:

On X-axis: Movies;

1Unit=2000 movies;

On the Y-axis: Number of ratings;

1Unit=50 ratings;

Threshold values: popular_movies_thresh =

25;

active_users_thresh = 100.

From Fig. 4, we can differentiate between popular and unpopular movies with the help of a divider point bypassing the threshold values. The head part of the graph labeled with orange color indicates popular movies and the long tail part of the graph labeled with red indicates unpopular movies.

|

Fig.4 Long-tail graph for visualizing popular and unpopular movies based on parameters 1 |

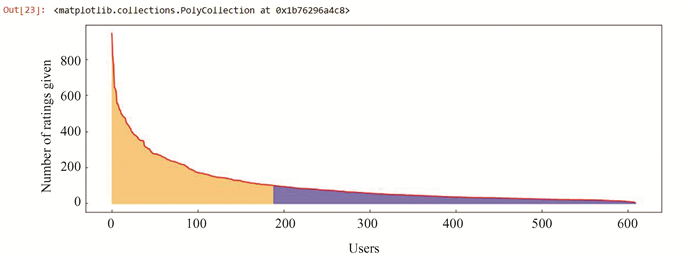

Parameters 2:

On the Y-axis: Number of ratings given;

1Unit=200 ratings;

On X-axis: Users;

1Unit =100 users;

Threshold values: popular_movies_thresh =

25;

active_users_thresh = 100.

From Fig. 5, we can differentiate between active and inactive movies with the help of a divider point by passing the threshold values. The head part of the graph labeled with orange color indicates active users and the long tail part of the graph labeled with red indicates users.

|

Fig.5 Long-tail graph for visualizing popular and unpopular movies based on parameters 2 |

Total number of ratings in an array before discarding unpopular movies: 100836;

Total number of popular ratings in popular movies: 62518;

Number of movies before discarding unpopular movies: 9724;

Number of movies after discarding unpopular movies: 1050.

Number of rows in ratings data after removing unpopular movies: 62518;

Number of rows in ratings data after removing unpopular and inactive users: 44588;

Number of users in data before removing inactive users: 610;

Number of users in data after removing inactive users: 188;

The number of movies in the pivot table returns no of rows in the table: 1050;

Number of users in the pivot table: 188.

Applying K-Nearest Neighbour algorithm:

NearestNeighbours(algorithm='brute', leaf_size=30, metric='cosine', metric_params=None, n_jobs=None, n_neighbors=5, p=2, radius=1.0).Table 3 shows the Top 5 rows in a pivot table, which was the result of the applying KNN algorithm.

| Table 3 Top 5 rows in a pivot table |

4 Conclusions

A recommended system permits a client to choose his decisions from a given arrangement of properties and afterward suggest him a film list dependent on the cycle of data separating framework, which is utilized to anticipate the "rating" or "inclination" utilizing client's past evaluations, history, interest, IMDB rating, etc. The proposed work manages the acquaintance of different ideas related with AI, Using K-Nearest Neighbor is carried out on the film focal point dataset. This methodology utilizes the data given by clients, dissects them and then suggests the motion pictures that are most appropriate to the client around then to get the best-streamlined outcome. Recommender systems have added to the economy of a portion of the internet business sites (like Amazon.com) and Netflix. Anyway, the recommended system is not restricted to motion pictures, it is likewise generally utilized in some business applications like food ordering, investing in stock market, website proposal, point of sales, and so forth. In the close to include, datasets will be refreshed persistently and it will make online genuine rating forecast to the clients whose propensities are changing step by step. Thus, it will meet the assumptions for a functioning client's inclinations.

| [1] |

Santhosh Kumar B, Cristin R, Karthick K, et al. Study of shadow and reflection based image forgery detection. Proceeding of the 2019 International Conference on Computer Communication and Informatics. Coimbatore, 2020.1-5. DOI: 10.1109/ICCCI.2019.8822057.

(  0) 0) |

| [2] |

Santhosh Kumar B, Daniya T, Ajayan J. Breast cancer prediction using machine learning algorithms. International Journal of Advanced Science and Technology, 2020, 29(3): 7819-7828. (  0) 0) |

| [3] |

Daniya T, Kumar S, Cristin R. Least square estimation of parameters for linear regression. International Journal of Control and Automation, 2020, 13(2): 447-452. (  0) 0) |

| [4] |

Daniya T, Geetha M, Suresh Kumar K. Classification and regression trees with gini index. Advances in Mathematics: Scientific Journal, 2020, 9(10): 8237-8247. DOI:10.37418/amsj.9.10.53 (  0) 0) |

| [5] |

Daniya T, Vigneshwari S. A review on machine learning techniques for rice plant disease detection in agricultural research. International Journal of Advanced Science and Technology, 2019, 28(13): 49-62. (  0) 0) |

| [6] |

Geetha M, Pooja R C, Swetha J, et al. Implementation of text recognition and text extraction on formatted bills using deep learning. International Journal of Control and Automation, 2020, 13(2): 646-651. (  0) 0) |

| [7] |

Mohamed M H, Khafagy M H, Ibrahim M H. Recommender systems challenges and solutions survey. Proceedings of the 2019 International Conference (ITCE'2019). Piscataway: IEEE, 2019.149-155. DOI: 10.1109/ITCE.2019.8646645.

(  0) 0) |

| [8] |

Ahuja R, Solanki A, Nayyar A. Movie recommender system using K-Means clustering AND K-Nearest neighbour. Proceedings of the 2019 9th International Conference on Cloud Computing. Noida. 2019.263-268. DOI: 10.1109/CONFLUENCE.2019.8776969.

(  0) 0) |

| [9] |

Kumar M, Yadav D K, Singh A. A movie recommender system: MOVREC. International Journal of Computer Applications, 2015, 124(3): 7-11. DOI:10.5120/ijca2015904111 (  0) 0) |

| [10] |

Lenhart P, Herzog D. Combining content-based and collaborative filtering for personalized sports news recommendations. CBRecSys@RecSys, 2016, 2016: 45-91. (  0) 0) |

| [11] |

Ponnam L T, Deepak Punyasamudram S, Nallagulla S N, et al. Movie recommender system using item-based collaborative filtering technique. Proceedings of the 2016 International Conference on Emerging Trends in Engineering, Technology and Science (ICETETS). Piscataway: IEEE, 2016.1-5. DOI: 10.1109/ICETETS.2016.7602983.

(  0) 0) |

| [12] |

Luo X, Zhou M, Xia Y, et al. An effi cient non-negative matrix-factorization-based approach to collaborative filtering for recommender systems. IEEE Transactions on Industrial Informatics, 2014, 10(2): 1273-1284. DOI:10.1109/TⅡ.2014.2308433 (  0) 0) |

| [13] |

Halder S, Sarkar A M J, Lee Y-K. Movie recommendation system based on movie swarm. 2012 Second International Conference on Cloud and Green Computing. Xiangtan, 2012.804-809. DOI: 10.1109/CGC.2012.121.

(  0) 0) |

| [14] |

Portugal I, Alencar P, Cowan D. The use of machine learning algorithms in recommender systems. arXiv: 1511.05263v4. DOI: 10.48550/arXiv.1511.05263

(  0) 0) |

| [15] |

Govind B S S, Tene R, Saideep K L. Novel recommender systems using personalized sentiment mining. 2018 IEEE International Conference on Electronics, Computing, and Communication Technologies. Bangalore, 2018.1-5. DOI: 10.1109/CONECCT.2018.8482394.

(  0) 0) |

| [16] |

Wang J-H, Liu T-W. Improving sentiment rating of movie review comments for recommendation. 2017 IEEE International Conference on Consumer Electronics- Taiwan (ICCE-TW). Taipei, China, 2017.433-434. DOI: 10.1109/ICCE-China.2017.7991181.

(  0) 0) |

| [17] |

Ahmed M, Ansari M D, Singh N, et al. Rating-based recommender system based on textual reviews using IoT smart devices. Mobile Information Systems, 2022, 2022: Article ID 2854741. DOI:10.1155/2022/2854741 (  0) 0) |

| [18] |

Kumar M R, Gunjan V K, Ansari M D, et al. Machine learning-based-HR appraisal system (ML-APS). International Journal of Applied Management Science, 2023, 15(2): 102-116. DOI:10.1504/IJAMS.2023.131669 (  0) 0) |

| [19] |

Zhu J X, Huo L N, Ansari M D, et al. Research on data security detection algorithm in IoT based on K-means. Scalable Computing Practice and Experience, 2021, 22(2): 149-159. DOI:10.12694/scpe.v22i2.1880 (  0) 0) |

| [20] |

Gaddam D K R, Ansari M D, Vuppala S, et al. A performance comparison of optimization algorithms on a generated dataset. ICDSMLA, 2020, 1407-1415. DOI:10.1007/978-981-16-3690-5_135 (  0) 0) |

2024, Vol. 31

2024, Vol. 31