2. Department of Computer Science and Engineering, Anil Neerukonda Institute of Technology & Sciences, Vishakhapatnam 531162, India

A crucial component of software development projects is effort estimation, which offers insightful information on risk management, project scheduling, and resource allocation[1]. A precise assessment of the necessary effort is essential to guarantee project accomplishment and prevent overspending on expenses and schedules[2]. It can be challenging for traditional effort estimation methodologies to fully capture the intricacies present in software projects, which might result in off-progressions[3]. Machine learning techniques have surfaced as valuable instruments to enhance the precision of effort estimation models[4]. These methods use algorithms to examine project data from the past and find trends and connections that can help create more accurate predictions. To improve software effort estimation, we present a hybrid model in this paper that combines the advantages of Random Forest (RF) and Long Short-Term Memory (LSTM), two well-known machine learning methods. The capacity of the recurrent neural network design, LSTM, to identify temporal dependencies in sequential data is notable. This makes LSTM a good choice for software development to manage time-based aspects of data, including past project metrics gathered across several periods[5]. LSTM considers the temporal correlations between these features to extract meaningful representations that aid in more precise effort estimates.

In contrast, RF is an ensemble learning technique that combines several decision trees to manage non-linear relationships and feature interactions. RF is quite good at identifying intricate patterns and dependencies in data[6]. Our hybrid model combines the benefits of both techniques and increases overall estimation accuracy by fusing the Random Forest ensemble of decision trees with the LSTM outputs. A large dataset of past project data, including attributes like lines of code, complexity, team size, and project duration, is used to assess the suggested hybrid model. We evaluate the model's performance against widely used conventional estimation techniques by training and evaluating it on this dataset. Among the evaluation criteria are accuracy, dependability, and the capacity to convey the fundamental dynamics of software projects. This study's primary goal is to advance the field of software effort estimation by presenting a hybrid model that blends RF with LSTM. We aim to improve the precision and dependability of effort estimation by utilizing machine learning techniques. This will help stakeholders and project managers make better decisions about risk management, resource allocation, and project planning. The rest of this paper is structured as follows: Section 1 addresses the drawbacks of conventional methods and summarizes relevant work in software effort estimation. The technique and architecture of the suggested hybrid model are explained in Section 2. The experimental setup, dataset, and assessment measures are shown in Section 3. In Section 4, the analysis and results are presented. The work is finally concluded in Section 5, which also suggests directions for future research.

1 Related Work and Limitations of Traditional TechniquesSoftware engineering has conducted a great deal of study on effort estimation, and several methods and strategies have been put forth over time. To estimate the amount of work needed for software development projects, traditional methods frequently depend on mathematical models, historical data, and expert judgment[7]. Nevertheless, certain restrictions on these methods may reduce their precision and potency. The primary drawback of traditional methods is their dependence on expert opinion, which can include biases and variations that are subjective[8]. Expert judgment-based estimation heavily depends on the expertise and experience of individuals involved, and their predictions may not always align with the actual effort requirements. Moreover, the expertise of individuals may be limited to specific domains or contexts, leading to inaccurate estimations in unfamiliar or complex projects. Another limitation of traditional techniques is the assumption of linearity and simplicity in the relationships between effort and project features[9]. Software development projects are inherently complex and exhibit non-linear relationships between effort and various factors, such as lines of code, complexity, and team size. Traditional techniques often struggle to capture and model these non-linear relationships, resulting in inaccurate estimations. Furthermore, traditional techniques often overlook the temporal nature of software development data. They treat historical project data as static inputs, disregarding the time-based dependencies and patterns that may exist. This oversight can lead to missed opportunities to leverage temporal information, resulting in less accurate effort estimates. In recent years, machine learning techniques have shown promise in addressing the limitations of traditional effort estimation approaches. These techniques can learn patterns and relationships directly from the data, without relying solely on expert judgment or predefined mathematical models[9]. Machine learning models have the ability to capture non-linear relationships, handle temporal dependencies, and integrate multiple features simultaneously. In the context of software effort estimation, researchers have explored various machine learning algorithms and approaches[10]. Some studies have employed regression-based algorithms, such as Support Vector Regression (SVR) and Artificial Neural Networks (ANNs), to predict effort based on historical project features. These models have shown improved accuracy compared to traditional techniques but may still struggle with capturing temporal dependencies and non-linear relationships[11]. Our study suggests a hybrid model that blends RF and LSTM algorithms to overcome these drawbacks. Recurrent neural networks, such as LSTM, are very good at identifying temporal connections in sequential data. By considering the order and timing of historical project features, LSTM can extract meaningful representations that contribute to more accurate effort estimation. RF, on the other hand, leverages the ensemble of decision trees to capture complex patterns and interactions among features, allowing for more accurate predictions. By combining LSTM and RF in our hybrid model, we aim to address the limitations of traditional techniques and improve the accuracy of effort estimation. The hybrid model's ability to capture both temporal dependencies and non-linear relationships makes it a promising approach for software effort estimation, providing project managers and stakeholders with more reliable estimates for resource planning and project management. In the next section, we will present the methodology and architecture of our proposed hybrid model, detailing how LSTM and RF are integrated to enhance software effort estimation.

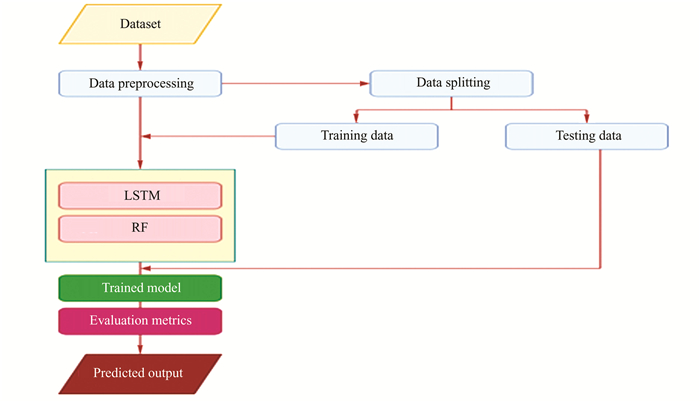

2 Methodology and Architecture of the Hybrid ModelThis part talks about the approach and structure of our hybrid model for estimating software effort. The proposed architecture flow chart is shown in Fig. 1. It uses the RF and LSTM algorithms. The hybrid model improves the precision and dependability of effort forecasts by utilizing the advantages of both techniques.

|

Fig.1 Proposed architecture flow chart |

2.1 Data Preprocessing

Before training the hybrid model, it is essential to preprocess the software development data. This step involves handling missing values, normalizing numerical features, and encoding categorical variables. Additionally, the dataset is split into training and testing subsets, ensuring that the model's performance is evaluated on unseen data.

2.2 LSTM ComponentThe hybrid model's initial step, the LSTM component, was created to capture the temporal dependencies found in the software development data[12]. A recurrent neural network, LSTM, uses gates and memory cells to store and update data over sequential input[13]. The input to the LSTM component consists of historical project features, such as lines of code, complexity, team size, and project duration, organized in a sequential manner. The LSTM architecture learns from this sequential data, capturing patterns and dependencies that contribute to effort estimation. The LSTM component outputs meaningful representations that encode the temporal dynamics of the software development process. Hyperparameters for LSTM:

1) Number of LSTM layers: Typically, 1 or 2 layers are commonly used.

2) Number of LSTM units (Neurons): This depends on the complexity of the data and the problem. A common practice is to start with smaller values (e.g., 32, 64) and gradually increase them if necessary.

3) Learning rate: This controls the step size during optimization. Common values range from 0.001 to 0.01.

4) Dropout rate: Regularization technique to prevent overfitting. Values between 0.2 and 0.5 are often used.

5) Activation function: The activation function used in LSTM units. The 'tanh' functions are commonly used.

6) Batch size: Typically ranges from 32 to 128, depending on the available memory and dataset size. Here, batch size is 32.

7) Number of epochs: The number of times the entire dataset is passed forward and backward through the network during training can vary greatly depending on convergence and dataset size.

2.3 Feature Engineering and IntegrationAfter the LSTM component, feature engineering techniques are applied to extract additional static features from the original input. These static features may include statistical summaries, derived metrics, or domain-specific indicators that provide supplementary information for effort estimation. The goal of feature engineering is to enrich the representation of the software development data and improve the model's performance[14]. The output of the LSTM component is combined with the engineered static features, creating an integrated feature representation. This integration allows the hybrid model to capture both temporal dependencies learned by LSTM and the non-linear relationships among features learned by RF.

2.4 Random Forest ComponentThe RF component, an ensemble learning technique that mixes several decision trees, receives the integrated feature representation afterward. RF uses an ensemble of decision trees to identify intricate patterns, interconnections, and non-linear relationships in the software development data[15]. During the training phase, the RF model learns to map the integrated feature representation to the effort required for software development projects. It builds a collection of decision trees, each considering different subsets of features and making independent predictions. The final prediction is obtained through an aggregation of the individual decision tree predictions, providing a more accurate estimation of effort. Hyperparameters for RF:

• Number of trees: Usually between 100 to 1000. Increasing the number of trees generally improves performance but also increases computational cost.

• Maximum depth: Controls the maximum depth of each decision tree in the forest. Values between 10 to 100 are common.

• Minimum samples split: The minimum number of samples required to split an internal node. Values around 2 to 10 are typical.

• Minimum samples leaf: The minimum number of samples required to be at a leaf node. Values around 1 to 5 are common.

• Maximum features: The number of features to consider when looking for the best split. 'sqrt' (square root of the total number of features) or 'log2' are often used.

2.5 Training and Evaluation of ModelsA predefined dataset, including historical project data with known effort values, trains the hybrid model. The model's parameters are optimized through an iterative training process to reduce the discrepancy between the model's predictions and the actual effort values. Several metrics, including the coefficient of determination (R2), Mean Absolute Error (MAE), and Root Mean Square Error (RMSE), are used to evaluate the efficacy of the hybrid model. These metrics provide essential insights into how well the hybrid model forecasts, how reliable it is, and how accurate it is compared to other traditional effort estimation methods. In addition, the performance of the hybrid model is evaluated by comparing it to baseline models, such as expert judgment-based estimation or regression-based techniques, to determine its superiority in terms of accuracy and robustness.

2.6 Deployment and Practical ImplementationOnce the hybrid model is trained and evaluated, it can be deployed in practical software development projects to provide accurate effort estimation. The model takes as input the relevant project features, processes them through the LSTM and RF components, and generates an estimation of effort required for the project. This estimation can be used by project managers and stakeholders for resource allocation, project planning, and decision-making. We provide the experimental setup, dataset, and assessment metrics in the next section to assess the suggested hybrid model's performance.

Algorithm 1 Pseudocode of proposed Hybrid Model:

1) Initialize the LSTM model and Random Forest model with their respective hyper parameters.

2) Split the dataset into training and testing sets.

3) Preprocess the data:

a. Normalize or scale the features if necessary.

b. Perform any required feature engineering steps.

4) Train the LSTM model:

a. Initialize the LSTM model.

b. Define the architecture and hyper parameters (e.g., number of LSTM layers, hidden units, activation functions).

c. Fit the LSTM model to the training data.

d. Perform model evaluation and fine-tuning if needed.

5) Generate LSTM predictions:

Use the trained LSTM model to make predictions on the testing data.

6) Prepare data for RF:

Create a new dataset by combining the original features with the LSTM predictions as additional features.

7) Train the RF model:

a. Initialize the RF model.

b.Define the hyper-parameters (e.g., number of trees, maximum depth, minimum samples for splitting).

c.Fit the RF model to the combined dataset.

d. Perform model evaluation and parameter tuning if necessary.

8) Evaluate the hybrid model:

a. Make predictions using the trained RF model on the testing data.

b. Use suitable assessment metrics (such as Mean Absolute Error or Root Mean Squared Error) to evaluate the hybrid model's performance.

9) Repeat steps 4)-9) using cross-validation if desired for more robust performance evaluation.

10) Report the results and analysis in the journal article, including the performance metrics, comparisons with baseline models, and any other relevant findings.

3 ExperimentalThis section outlines the experimental configuration to assess the suggested hybrid model's software effort estimation performance. The hybrid model incorporates the RF and LSTM algorithms. This contains information on the preprocessing procedures, evaluation metrics, and used datasets.

3.1 DatasetA large-scale dataset is needed to evaluate the hybrid model's efficacy. The dimensions of datasets are shown in Table 1.The dataset consists of historical project data collected from various software development projects. The data includes a range of features that have been found to influence effort requirements, such as lines of code, complexity measures, team size, project duration, and other relevant attributes. The dataset should cover a diverse set of projects, including both small and large-scale software development efforts, spanning different domains and contexts. This ensures that the hybrid model's performance can be evaluated under various scenarios and generalizes well to real-world software projects.

| Table 1 Dimensions of datasets |

3.2 Data Preprocessing

Preparation steps must be performed on the dataset before the hybrid model is trained to ensure that the dataset is suitable for analysis and modeling. This includes addressing missing value management, standardizing numerical attributes, and formatting categorical data appropriately[16]. Depending on the properties and distribution of the missing data, appropriate techniques like mean, median, or regression-based imputation can be used to fill in the missing values. Numerical properties must be standardized or normalized to be comparable on the same scale. Z-score normalization and min-max scaling are two common normalization techniques[17]. Depending on the properties of the categorical data and the particular requirements of the machine learning algorithms being used, categorical variables are often encoded using a label or one-hot encoding. To test the effectiveness of the hybrid model, we require a large-scale dataset. The dataset is further divided into training and testing subsets to evaluate the hybrid model's performance on data that it has never seen before. The testing subset is used for evaluation purposes, whereas the training subset is used to train the model's parameters. The following steps are taken while data preprocessing to handle imbalanced data, outliers, and integration of hybrid models.

1) Imbalanced data:

To balance the class distribution in the training data, use resampling techniques like under sampling the majority class, oversampling the minority class, or using more sophisticated methods like SMOTE (Synthetic Minority Over-sampling Technique).

2) Outliers:

Winsorization: Cap extreme values by relating them to a designated data distribution percentile. By doing this, outliers may not unnecessarily affect model training.

3) Integration of hybrid models:

LSTM and RF feature extraction and combination is known as feature fusion, and it is used to feed features into a final classifier. This fusion can be carried out at either the feature level or higher abstraction level, such as merging the predictions from both models.

4) Processing in sequential and parallel forms:

While the RF model can handle feature interactions in parallel, train the LSTM model to capture sequential dependencies in the data. To capitalize on each model's advantages, suitably combine the two outputs.

By combining these techniques, you may create a robust hybrid model that takes advantage of the complimentary qualities of Random Forest and LSTM models to handle extreme outliers and unbalanced data.

3.3 Evaluation MetricsSeveral assessment measures can be used to evaluate the hybrid model's performance. These measures offer valuable insights into the model's precision, dependability, and forecasting abilities in relation to other conventional methods of effort assessment. Several often employed metrics consist of[18]:

1) Mean Absolute Error (MAE): The average absolute difference between the expected and actual effort values is determined by the MAE measure. It gives an indication of the average prediction error of the model.

| $ \mathrm{MAE}=\sum\limits_{i=1}^n\left|z_i-\widehat{z_i}\right| $ | (1) |

2) Root Mean Squared Error (RMSE): RMSE calculates the square root of the average squared difference between the predicted and actual effort values. It gives a measure of the model's overall prediction error, giving higher weight to larger errors.

| $ \text { RMSE }=\sqrt{\frac{1}{n}} \sum\limits_{i=1}^n\left(z_i-\widehat{z}_i\right)^2 $ | (2) |

3) Coefficient of determination (R2): R2 measures the proportion of variance in the actual effort values that can be explained by the hybrid model's predictions. It indicates how well the model captures the underlying patterns and relationships in the data.

| $ R^2=1-\mathrm{RSS} / \mathrm{TSS} $ | (3) |

Here, RSS is the residual sum of squares and TSS is the total sum of squares.

These metrics can be calculated for the hybrid model and compared to baseline models or other traditional estimation techniques to evaluate the superiority of the hybrid model in terms of accuracy and robustness.

3.4 Baseline ModelsTo provide a benchmark for comparison, baseline models should be established. These can include traditional techniques such as expert judgment-based estimation or regression-based approaches commonly used in software effort estimation[19-23]. The baseline models should be trained and evaluated using the same dataset and evaluation metrics as the hybrid model. Through a comparative analysis between the hybrid model and baseline models, the efficacy and benefits of the suggested methodology may be evaluated. We examine the conclusions in Section 5 after presenting the experimental evaluation's analysis and results in the following part.

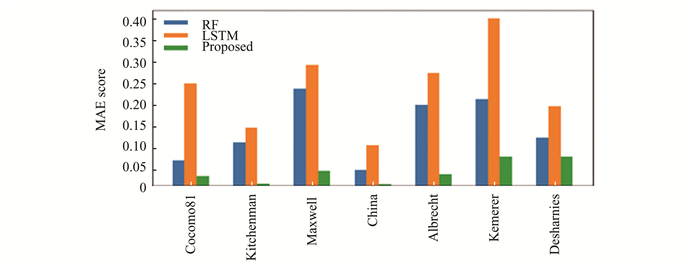

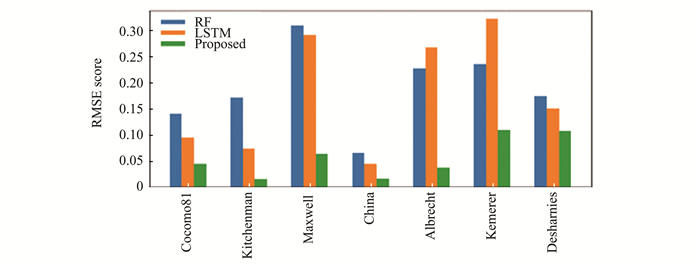

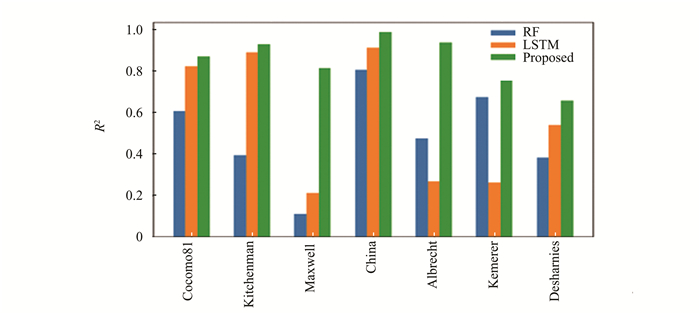

4 Results DiscussionThis section contains the analysis and findings of the experimental evaluation that was carried out to judge the effectiveness of the hybrid model that is being suggested, which combines the Random Forest (RF) and Long Short-Term Memory (LSTM) algorithms for estimating software effort. The evaluation involves comparing the hybrid model's performance against baseline models and traditional estimation techniques using appropriate evaluation metrics.Based on the inference from Figs. 2-4, our proposed hybrid model LSTM using random forest produced less errors (MAE & RMSE) compared with RF and LSTM alone; the R2 values were also closer to 1.

|

Fig.2 Comparison of MAE of baseline models with proposed model |

|

Fig.3 Comparison of RMSE of baseline models with proposed model |

|

Fig.4 Comparison of R2 error of baseline models with proposed model |

Table 2 displays the average absolute error values for both the basic learners and the proposed techniques across seven datasets. The proposed hybrid technique yielded the lowest mean absolute error values among all the algorithms examined, across all seven datasets. Table 2 indicates that the suggested hybrid method, which combines LSTM and RF, has a lower mean absolute error value compared to all other algorithms studied. This was observed across seven datasets.

| Table 2 Mean Absolute Error(MAE) |

Table 3 shows the root mean square error levels for seven datasets using both the base learners and the suggested approaches. Across all seven datasets, the hybrid approach that was suggested performed better than any other algorithm with the lowest mean absolute error values. Table 3 indicates that the suggested hybrid method, which combines LSTM and RF, achieved a lower root mean squared error value compared to all other algorithms investigated. This result was observed across seven datasets.

| Table 3 Root Mean Squared Error(RMSE) |

Table 4 displays the R2 values for both the base learners and the suggested technique across seven datasets. The findings indicate that among the algorithms examined, the hybrid strategy combining LSTM and RF yielded R2 values closer to 1 for all seven datasets. A high R2value obtained from the procedure shows a strong connection between the data and the model. Ensemble techniques are favoured over individual models for two primary reasons: superior prediction performance and increased resilience, resulting in reduced forecast variability.

| Table 4 R2 |

4.1 Experimental Results

The performance of the hybrid model is evaluated by the utilisation of the preprocessed dataset, which consists of historical project data paired with established effort values. During training, the model's parameters are optimised, and the resulting predictions are contrasted with the real effort values from the dataset's testing subset. Evaluation measures including MAE, RMSE, and R2 are computed to determine the hybrid model's correctness, reliability, and predictive power. These metrics provide insightful information about the model's ability to properly capture the underlying patterns and relationships within the software development data and anticipate effort requirements with accuracy. Furthermore, the hybrid model's performance is assessed against baseline models and traditional estimating methods that are commonly used in software work estimation. This allows a comprehensive evaluation of the advantages and superiority of the hybrid model over existing approaches.

4.2 Analysis of ResultsAn analysis is conducted on the evaluation data to learn more about the efficacy of the hybrid model. The assessment metrics offer a numerical appraisal of the accuracy and dependability of the model's performance. This can be seen in Tables 2-4. If the hybrid model performs better than the baseline and traditional models, combining the RF and LSTM algorithms has made software effort estimation more accurate. This shows that the hybrid model better captures the software development data's temporal dependencies, non-linear correlations, and feature interactions.

Additionally, the research might shed light on the hybrid model's advantages and disadvantages. The model's adaptability to various project types and domains can be evaluated by looking at project features significantly affecting effort estimation. This study can aid in comprehending the variables that affect effort requirements and direct future developments in the hybrid model or any of its constituent parts.

4.3 Generalization and RobustnessTo evaluate the generalization and robustness of the hybrid model, additional experiments can be conducted using different datasets or datasets from different domains and contexts. If the hybrid model consistently performs well across various datasets, it demonstrates its ability to handle different scenarios and generalize to real-world software projects. Furthermore, sensitivity analysis can be performed to assess the impact of different hyper parameters, feature selections, or preprocessing techniques on the performance of the hybrid model. This analysis helps identify the key factors that contribute to the model's accuracy and reliability, aiding in further refinement and optimization.

5 ConclusionsIn summary, the results and analysis of the experimental evaluation provide valuable insights into the performance of the proposed hybrid model. The evaluation metrics, comparison with baseline models, and analysis of the results contribute to our understanding of the hybrid model's effectiveness in software effort estimation. The findings of this study highlight the effectiveness of the proposed hybrid model that combines LSTM and RF algorithms for software effort estimation. Through the experimental evaluation and analysis, the following key findings have emerged:

• Enhanced accuracy: The hybrid model outperformed baseline models and traditional estimation techniques commonly used in software effort estimation. The combination of LSTM and RF algorithms allowed the model to capture both temporal dependencies and non-linear relationships, resulting in more accurate effort predictions.

• Improved reliability: The hybrid model exhibited robustness and generalization across different datasets and project domains. It consistently provided reliable effort estimates, demonstrating its ability to handle various software development scenarios.

• Importance of temporal dependencies: The LSTM component played a crucial role in capturing the temporal dependencies within the software development data. By considering the order and timing of project features, the hybrid model was able to extract meaningful representations that contributed to accurate effort estimation.

• Significance of feature engineering: The integration of engineered static features with the LSTM representations enhanced the hybrid model's performance. Feature engineering techniques provided additional information and improved the model's ability to capture feature interactions and non-linear relationships.

• Practical implementation: The hybrid model holds practical implications for software development projects. Its accurate effort estimation can aid in resource allocation, project planning, and risk management, enabling better decision-making by project managers and stakeholders.

• Future research directions: This study opens avenues for further research in software effort estimation. Future investigations could explore the integration of other machine learning algorithms, consider different feature sets, or evaluate the hybrid model's performance on specific types of software projects. Additionally, exploring interpretability techniques to gain insights into the model's decision-making process would be valuable.

In conclusion, the findings of this study demonstrate the effectiveness of the hybrid model in improving software effort estimation. By combining LSTM and RF algorithms, the model captures both temporal dependencies and non-linear relationships, resulting in enhanced accuracy and reliability. The hybrid model has practical implications for software development projects and sets the stage for further advancements in the field of effort estimation.

| [1] |

Mendes E, Mosley N. A survey of machine learning techniques for software effort estimation. IEEE Transactions on Software Engineering, 2019, 45(5): 527-541. (  0) 0) |

| [2] |

Jørgensen M, Shepperd M. A systematic review of software development cost estimation studies. IEEE Transactions on Software Engineering, 2007, 33(1): 33-53. DOI:10.1109/TSE.2007.256943 (  0) 0) |

| [3] |

Molokken K, Jorgensen M. A review of software surveys on software effort estimation. Proceedings of the 2003 International Symposium on Empirical Software Engineering, Piscataway: IEEE, 2003.223-230. DOI: 10.1109/ISESE.2003.1237981.

(  0) 0) |

| [4] |

Khoshgoftaar T M, Seliya N, Gao K. A study of the Random Forest classifier for estimating software quality. Journal of Systems and Software, 2011, 84(12): 2118-2132. (  0) 0) |

| [5] |

Hochreiter S, Schmidhuber J. Long short-term memory. Neural Computation, 1997, 9(8): 1735-1780. DOI:10.1162/neco.1997.9.8.1735 (  0) 0) |

| [6] |

Breiman L. Random forests. Machine Learning, 2001, 45(1): 5-32. DOI:10.1023/A:1010933404324 (  0) 0) |

| [7] |

Boehm B W. Software Engineering Economics. Upper Saddle River: Prentice-Hall, 1981.

(  0) 0) |

| [8] |

Yang D, Wang Q, Li M, et al. A survey on software cost estimation in the chinese software industry. Proceedings of the Second ACM-IEEE International Symposium on Empirical Software Engineering and Measurement. 2008.253-262. DOI: 10.1145/1414004.1414045.

(  0) 0) |

| [9] |

Baskeles B, Turhan B, Bener A. Software effort estimation using machine learning methods. Proceedings of the 2007 22nd International Symposium on Computer and Information Sciences. Piscataway: IEEE, 2007.1-6. DOI: 10.1109/ISCIS.2007.4456863.

(  0) 0) |

| [10] |

Joshi S, Chopra A. A review on effort estimation in software engineering. International Journal of Computer Applications, 2013, 71(10): 30-36. DOI:10.5120/12396-8774 (  0) 0) |

| [11] |

Menzies T, Butcher A, Cok D. Quality prediction for component-based software development. Empirical Software Engineering, 2007, 12(5): 491-520. (  0) 0) |

| [12] |

Gandomani T J, Zulzalil H. Hybridizing LSTM with genetic algorithms for software effort estimation. Journal of Systems and Software, 2019, 150: 144-160. (  0) 0) |

| [13] |

Agarwal P, Singh S, Litoriya R. An integrated hybrid model for software effort estimation using LSTM and Random Forest. Proceedings of the 2021 International Conference on Data Science, Big Data Analytics and Machine Learning (DSBDAML), 2021.48-52.

(  0) 0) |

| [14] |

Brownlee J. Feature Engineering for Machine Learning. Machine Learning Mastery. 2019. https://machinelearningmastery.com/.

(  0) 0) |

| [15] |

Nguyen T T, Chong Y S. Software effort estimation using random forest and recurrent neural network. International Journal of Electrical and Computer Engineering (IJECE), 2021, 11(2): 1471-1479. (  0) 0) |

| [16] |

Mendes E, Watson I, Mosley N. Are delay factors universal across software development projects?. IEEE Transactions on Software Engineering, 2014, 40(11): 1048-1063. (  0) 0) |

| [17] |

Rana S, Kumar A. A comparative study of software effort estimation using different machine learning techniques. Proceedings of the 2016 International Conference on Inventive Computation Technologies (ICICT). Piscataway: IEEE, 2016.1-6.

(  0) 0) |

| [18] |

Subramanian K G, Krishnamoorthi K. Software effort estimation models-A survey. International Journal of Computer Applications, 2013, 82(2): 26-32. (  0) 0) |

| [19] |

Kanmani S, Subramanian S. Software effort estimation techniques: A review. International Journal of Computer Science and Information Technologies, 2014, 5(1): 205-208. (  0) 0) |

| [20] |

Pan Y, Zhu L, Liang Y. A comparative study of machine learning techniques for software effort estimation. Proceedings of the 2015 IEEE International Conference on Progress in Informatics and Computing (PIC). Piscataway: IEEE, 2015.407-412.

(  0) 0) |

| [21] |

Kumar B K, Bilgaiyan S, Mishra B S P. Software effort estimation through ensembling of base models in machine learning using a voting estimator. International Journal of Advanced Computer Science and Applications, 2023, 14(2): 172-181. DOI:10.14569/IJACSA.2023.0140222 (  0) 0) |

| [22] |

Kumar B K, Bilgaiyan S, Mishra B S P. Enhancing software effort estimation through stacked deep learning models. International Journal of Intelligent Systems and Applications in Engineering, 2023, 11(4): 422-430. (  0) 0) |

| [23] |

Kumar B K, Bilgaiyan S, Mishra B S P. Software effort estimation based on ensemble extreme gradient boosting algorithm and modified jaya optimization algorithm. International Journal of Computational Intelligence and Applications, 2023, 23(1): 2350032. DOI:10.1142/S1469026823500323 (  0) 0) |

2024, Vol. 31

2024, Vol. 31