2. Department of Chemical and Petroleum Engineering, School of Engineering, Holy Spirit University of Kaslik, Jounieh 1200, Lebanon

Materials science which started by materials discovered in nature, is quickly evolving to designed materials that are able to adapt to their environment. Starting from atoms and molecules, materials can now be designed from scratch[1].

The evolution of materials science is driven by the synergy of physics, mechanics, and engineering where image processing reveals important contribution in this challenge[2]. In particular, it is crucial to design and analyze materials by synthesizing new models from two or three-dimensional image models, with applications in the characterization of the physical assembly process of materials, virtual material design, studying imaging techniques interaction with materials, separating materials appearance from their intrinsic properties, and obtaining a realistic description of the material structure. However, the appearance of the material normally varies with the image scale, image settings, and imaging techniques[3-5].

Due to the fact that the textured images are diverse[6], various synthesis approaches have been proposed. They can be divided into three major families: procedural, model-based, and exemplar-based synthesis methods.

The procedural texture synthesis algorithms generate textures using mathematical functions executed with a constant computational cost. Therefore, they are decent for generating the texture of objects in virtual environments, such as video games[7]. These methods rely on transforming certain pre-defined signals into a desired texture. They are usually used to synthesize structured or unstructured textures, such as the Worley noise[8].Other noise functions have been also proposed, such as the Gabor noise[9-10].

The model-based algorithms generate a probabilistic model that can be used to describe and synthesize the texture. The model parameters should be able to capture the essential visual characteristics of the texture. Different models have been proposed in Refs.[11-17].

The exemplar-based algorithms take one or more example textures as input. Most of them perform the synthesis by directly copying pixels or patches from the input images. Thus, these methods produce a new texture which is as similar as possible to the input texture. They are able to synthesize various types of textures, and they can be divided into three families: parametric texture synthesis by analysis[18-20], pixel by pixel texture synthesis[21-23], and patch-based texture synthesis[24-25].

The proposed algorithm in this paper is based on the pixel by pixel texture synthesis method of Wei and Levoy[21]. The latter relies on a Markov random field, modeling the texture as a stationary and local random process. More precisely, every pixel is predictable from the few pixels in its neighborhood and the spatial statistics are invariant by translation.

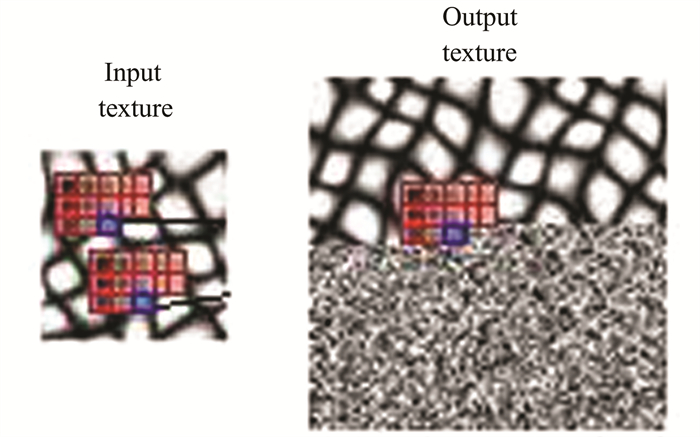

This aims to generate an output texture that is locally similar to a sample texture patch. Thus, each synthesized pixel is determined so that local resemblance is preserved between the input and output textures. The algorithm starts from a sample texture and an output image initialized by a white random noise. The synthesis process consists in modifying the noisy output image to make it look like the input sample[26-27]. To do so, for each output pixel, the neighborhood is extracted and the most similar one is searched for in the exemplar. The corresponding pixel is then copied to the target position in the output texture[21] (as shown in Fig. 1). The Euclidean distance is adopted to compute the resemblance between two neighborhoods. The synthesis is usually performed using an L-shaped causal neighborhood with a lexicographical scan type (i.e., scan-line). Therefore, the synthesis of a new pixel depends only on the previously synthesized pixels. Note that the neighborhood may contain noise pixels for the first few rows and columns of the output texture. However, as the algorithm progresses, all the other neighborhoods completely contain synthesized pixels. That is, the noise is only used to synthesize the first rows and columns of the output texture, which makes the synthesis result random, and it is then ignored. A non-causal neighborhood with a completely random walk can also be used. It allows the synthesized pixel to free itself from its past, which may consequently result in various configurations.

|

Fig.1 Illustration of the W & L synthesis process[21] using an L-shaped neighborhood with the scan-line type |

This study proposes an image synthesis algorithm capable of generating material textures of arbitrary shapes. This algorithm is divided to three stages: 1) A field of second-moment matrices, referred to as the constraint, is created from an arbitrary chosen textured image. 2) A dictionary of intensities and second-moment matrices is constructed from the initial textured image. 3) The generated constraint is used to constrain the synthesis of the textured image by searching the best resemblance within the dictionary.

The remainder of this paper is organized as follows. In Section 1, the second-moment matrix field is reviewed and the creation of the constraint field of second-moment matrices from an imposed arbitrary texture is presented. Section 2 details the construction of the searching dictionary and the synthesis process constrained by the field of second-moment matrices. The obtained results are discussed in Section 3. Finally, the conclusions are drawn in Section 4.

1 Construction of the Constraint Field 1.1 Second-Moment Matrix FieldThe second-moment matrix is a mathematical tool commonly used to describe local patterns in several image processing applications such as image synthesis and inpainting[14]. The second-moment matrix field (SSMF) of an image IIM assigns a matrix for each pixel (x, y) of the image. It is defined as the field of local covariance matrices of the first partial derivatives of IIM expressed as Ref. [15]:

| $ \begin{aligned} \boldsymbol{S}_{\mathrm{SMF}}=\boldsymbol{G}_{\boldsymbol{\sigma}} & * \nabla\left(\boldsymbol{I}_{\mathrm{IM}}\right) \nabla\left(\boldsymbol{I}_{\mathrm{IM}}^{\mathrm{T}}\right)= \\ \boldsymbol{G}_{\boldsymbol{\sigma}} & *\left[\begin{array}{cc} I_{\mathrm{IM} x}^2 & I_{\mathrm{IM}_x} I_{\mathrm{IM} y} \\ I_{\mathrm{IM} x} I_{\mathrm{IM} y} & I_{\mathrm{IM} y}^2 \end{array}\right] \end{aligned} $ | (1) |

where ▽IIM=[IIMx, IIMy] are the gradient fields of image IIM, Gσ is a Gaussian weighting kernel used to smooth the matrix, [ ]T is the matrix transpose operator, and '*' denotes the convolution operator.

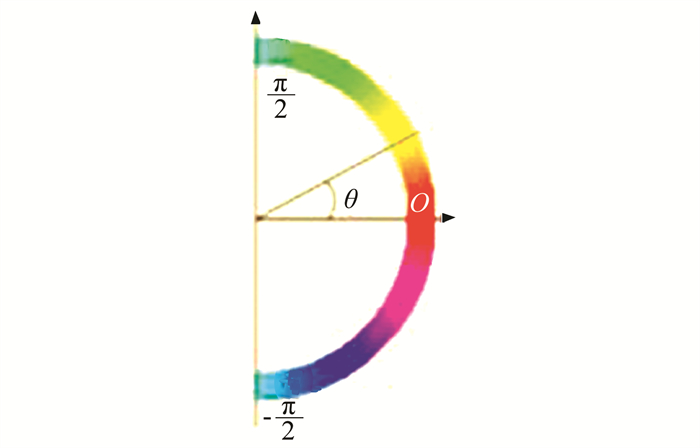

The orientation factor Or(x, y) of a second-moment matrix SSMF(x, y), varying between -π/2 and π/2, is calculated from its first eigenvector [ex, ey] as:

| $ O_{\mathrm{r}}(x, y)=\tan ^{-1}\left(\frac{\boldsymbol{e}_y}{\boldsymbol{e}_x}\right) $ | (2) |

An orientation field Or can then be extracted from a second-moment matrix field SSMF. Or consists of an orientation image presenting at each position (x, y), the orientation factor Or(x, y) of the corresponding matrix SSMF(x, y) at the same position. The palette used to represent the orientation fields is shown in Fig. 2. Note that this palette is used for all the illustrations and results presented in the sequel.

|

Fig.2 The palette adopted for representation of orientation fields |

1.2 Calculation of the Constraint Second-Moment Matrix Field

The first step of the proposed synthesis algorithm consists in constructing the constraint second-moment matrix field SSMFcon from an imposed arbitrary textured image IIMcon, which is divided into the following three steps:

1) Calculation of the second-moment matrix field SSMFcon of IIMcon and its associated orientation field Orcon.

2) Calculation of the second-moment matrix field SSMFin of the input material texture sample IIMin.

3) Modulation of the orientations of SSMFin by Orcon, which is performed as follows:

At each position (x, y) of the field SSMFin, a rotation for matrix SSMFin(x, y) by the orientation angle Orcon(x, y) is performed. The latter is the angle at the corresponding position in the orientation field Orcon. The constraint field is then computed by updating SSMFcon, at each position (x, y) as follows:

| $ \boldsymbol{S}_{\mathrm{SMFcon}}(x, y) \leftarrow \boldsymbol{R}(x, y) . \boldsymbol{S}_{\mathrm{SMFin}}(x, y) . \boldsymbol{R}(x, y)^{\mathrm{T}} $ | (3) |

where '.' is the matrix product and R(x, y) is the rotation matrix at position (x, y):

| $ \boldsymbol{R}(x, y)=\left[\begin{array}{ll} \cos \left\{O_{\text {con }}(x, y)\right\} & \sin \left\{O_{\text {con }}(x, y)\right\} \\ -\sin \left\{O_{\text {con }}(x, y)\right\} & \cos \left\{O_{\text {con }}(x, y)\right\} \end{array}\right] $ | (4) |

This process is adopted to ensure that the matrices of SSMFcon are rotated versions of SSMFin that faithfully represent the structure of IIMcon.

2 The Synthesis ProcessThe second phase of the proposed algorithm involves the establishment of a dictionary of intensities and matrices using the material texture sample IIMin and its field of second-moment matrices SSMFin as input. The construction of this dictionary is summarized as follows.

The dictionary

| $ N_k=N_k^{I_{\text {IMin }}} \cup N_k^{S_{\mathrm{SMFin}}} $ | (5) |

where NkIIMin is the kth intensity neighborhood in IIMin, and NkSSMFin is the corresponding second-moment matrix neighborhood in SSMFin.

It is important to mention that causal square neighborhoods or causal L-shaped neighborhoods can be used as intensity and second-moment matrix neighborhoods.

Due to the fact that D(IIMin) does not necessarily contain the different structures of SSMFcon, a larger dictionary DM(IIMin) which includes D(IIMin) is then constructed. DM(IIMin) consists of neighborhoods extracted from images { Rotm(IIMin), m=0, ..., M-1}, where Rotm(IIMin) is the rotation of IIMin by an angle φm=2πm/M, with the corresponding second-moment matrices.

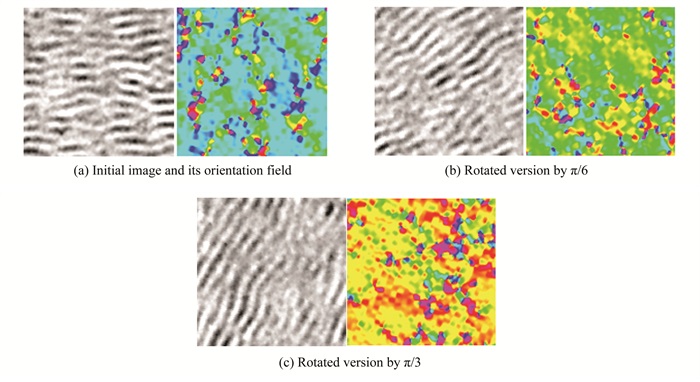

Fig. 3 illustrates an example of rotated versions created from an initial material sample IIMin, where Figs. 3(a)-(c) present an initial anisotropic composite material sample, Fig. 3(b) shows its rotated version by an angle of π/6, and Fig. 3(c) shows its rotated version by an angle of π/3, while the orientation images extracted from the corresponding second-moment matrix fields are shown at the right of each image. Those images represent samples of Rotm(IIMin) and their matrix fields that are used to construct DM(IIMin). Note that parameter φm directly affects the quality of the obtained result, as will be shown in the sequel. More precisely, a lower φm value increases the number of the structures in the dictionary, which makes it richer in terms of material patterns. However, increasing the size of the dictionary leads to a higher computational load.

|

Fig.3 Examples of rotated versions Rotm(IIMin) created from an initial material sample IIMin |

After determining the constraint second-moment field SSMFcon and the dictionary DM(IIMin), the output texture IIMout is first initialized by values chosen randomly from the input material sample IIMin. It is then updated during the synthesis process as follows: For each position o=(x, y) in IIMout, the neighborhood No=NoIIMout ∪ NoSSMFcon is extracted. More precisely, NoIIMout is the neighborhood of intensities at position o in IIMout, and NoSSMFcon is the second-moment matrix neighborhood at the corresponding position in SSMFcon. The best resemblance is then identified in DM(IIMin) and the corresponding pixel is copied to the target position o in IIMout.

To calculate the similarity between two neighborhoods Nk and No, the method involves employing the sum of squared errors (SSSE) for intensity neighborhoods and the sum of second-moment matrices dissimilarities (SSSMD) defined in Eq. (7) for the second-moment matrix neighborhoods:

| $ \begin{aligned} D\left(N_k, N_o\right)= & W \cdot S_{\mathrm{SSE}}\left(N_k^{I_{\mathrm{IMin}}}, N_o^{I_{\mathrm{IMout}}}\right)+ \\ & (1-W) \cdot \boldsymbol{S}_{\mathrm{SSMD}}\left(N_k^{S_{\mathrm{SMFin}}}, N_o^{S_{\mathrm{SMFcon} }}\right) \end{aligned} $ | (6) |

where NkIIMin and NkSSMFin are respectively the kth intensity and second-moment matrix neighborhoods in dictionary DM(IIMin), NoIIMout and NoSSMFcon are respectively the intensity and second-moment matrix neighborhoods at position o in IIMout and the corresponding position in SSMFcon, W is a weighting factor, and the SSSMD metric is expressed as:

| $ \boldsymbol{S}_{\mathrm{SSMD}}\left(N_1^{S M_1}, N_2^{S M_2}\right)=\sum\limits_{i=1}^{N_M} d_{\mathrm{dist}}\left(N_1^{S M_1}(i), N_2^{S M_2}(i)\right) $ | (7) |

where NM is the number of matrices in each neighborhood, NSM(i) is the ith second-moment matrix in neighborhood NSM, and ddist is one of the four metrics of second-moment matrix resemblance proposed in Ref. [26] (the Euclidean distance is used in this study).

The pseudocode of the whole synthesis algorithm is shown as follows:

Input: IIMin and IIMcon

SSMFcon ←ConstraintCalculation(IIMin & IIMcon)

DM(IIMin)←DictionaryConstruction(IIMin & SSMFin)

IIMout←NoiseInitialization(IIMin)

For each position o=(x, y) in IIMout and SSMFcon

PPIXk←argmax{BestResemblance (Nk vs No)}

IIMout(x, y) ← PPIXk

End For

Output: IIMout

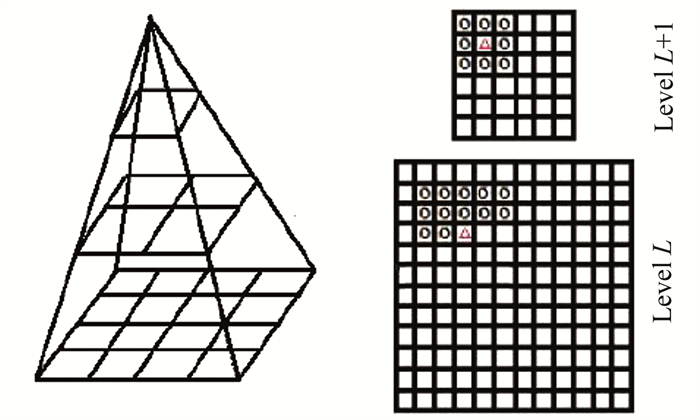

In the case of large-scale material samples, the use of large neighborhoods requires a high computational load. Gaussian pyramids of images and second-moment matrix fields[27-30] are then used to retranscribe the structures at different scales. Therefore, the DM(IIMin) dictionary is constructed using multi-scale neighborhoods extracted from intensity and matrix pyramids. The pyramids are built from M rotated textures and their corresponding second-moment matrix fields. Using the same approach, texture and matrix pyramids are built from IIMout and SSMFcon, respectively. The synthesis process begins from the top of the pyramid and descends to the lowest level. More precisely, in order to ensure that the added high frequency details are compatible with the already synthesized low frequency structures, the multi-resolution neighborhood at position o=(x, y) of level L contains its neighborhood at the same level as well as the neighborhood of the corresponding position o′=(x/2, y/2) at the previously synthesized level L+1[24]. Fig. 4 shows an example of Gaussian pyramid of three levels (left) and multi-resolution causal 5×5 neighborhood (right).

|

Fig.4 Illustration of multi-resolution Gaussian pyramid and neighborhood |

3 Experimental Results

This section presents examples of results obtained using the proposed algorithm. In addition, results obtained with different angular step values are utilized to build the searching dictionary, are shown to highlight the influence of this parameter on the quality of the synthesized images. Moreover, the results obtained by the proposed algorithm are compared with those obtained by directly synthesizing the output texture using the field of second-moment matrices of the arbitrarily imposed texture as a constraint for the synthesis process, is presented.

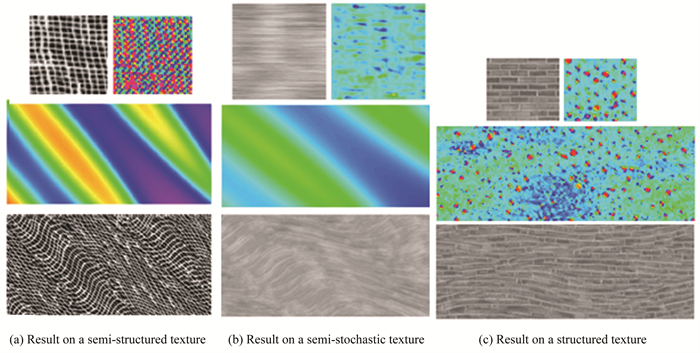

Fig. 5 shows examples of results obtained using the proposed approach. Figs. 5 (a), (b), (c), show from the 1st to the 3rd row, the input material sample (IIMin) and its second-moment matrix field (SSMFin) represented by its orientation field, the second-moment matrix field of the arbitrarily imposed texture represented by its orientation image Orcon, and the output texture (IIMout).

|

Fig.5 Results yielded using the proposed method |

It can be seen that the synthesized texture, cladded by the patterns of the input material, respects the structures of the imposed texture except that the synthetic texture in Fig. 5(a) presents blocky artifacts due to the abrupt orientation variation (green/blue) of Orcon.

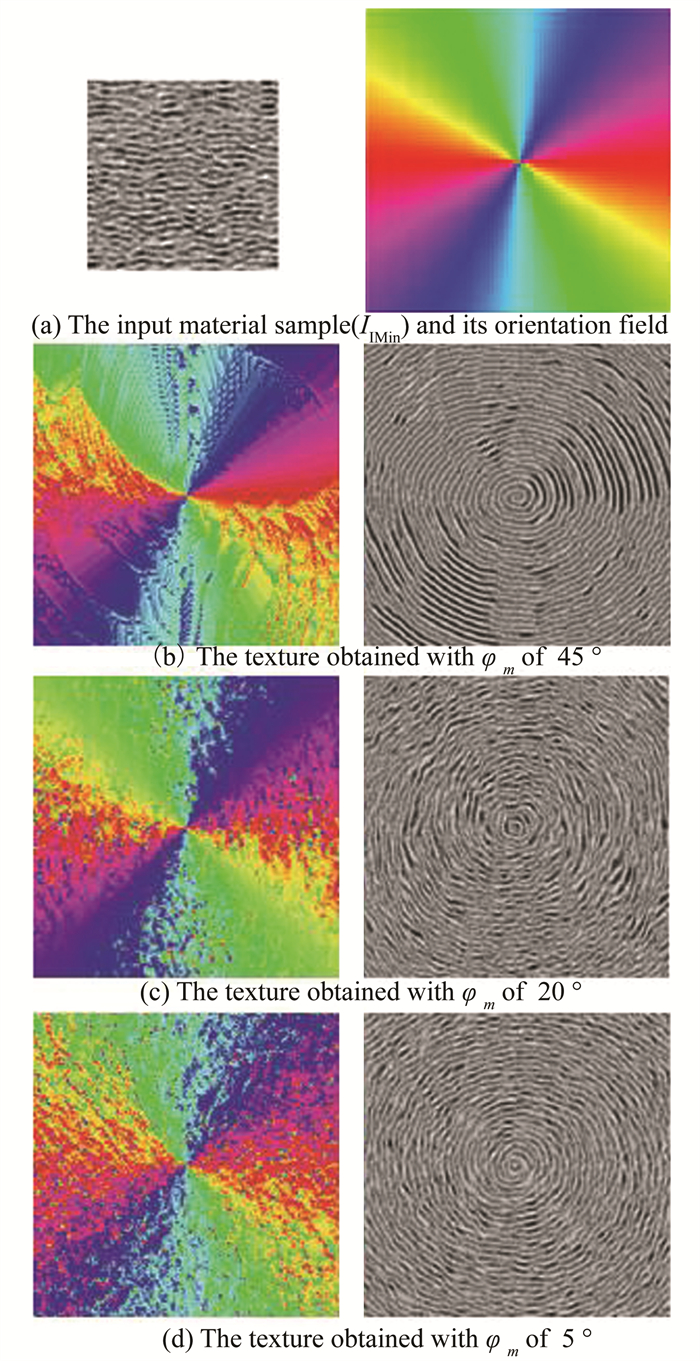

Fig. 6 shows results obtained using three different values for the rotation angle φm used to construct the dictionary. The first row shows the input sample (IIMin) and its second-moment matrix field (SSMFin) represented by its orientation field. The 2nd to 4th row show the textures obtained by dictionaries built with φm of 45°, 20°, and 5°, respectively. The monoscale approach is adopted with 11×11 causal neighborhood, lexicographical scan, and two iterations.

|

Fig.6 Synthesis results obtained using three different rotational angle values φm |

It can be seen that the synthetic texture obtained with φm of 45° presents an octogonal structure showing warped patterns. This is attributed to the fact that the built dictionary does not comprise the versions of the input sample and its second-moment matrices that contain enough orientations to reproduce the circular shape of the output texture. When φm is equal to 20°, the defects of the synthesized texture disapper.

Although the resulting texture has higher quality compared with the one obtained using a φm value of 45 °, unwanted patterns which do not exist in the input sample can be clearly observed. On the contrary, the synthesized texture with φm of 5° leads to a texture filled by the intensities of the input sample while being faithful to its characteristics. In summary, it is essential to accurately adapt the angular step to the input sample of the synthesis algorithm.

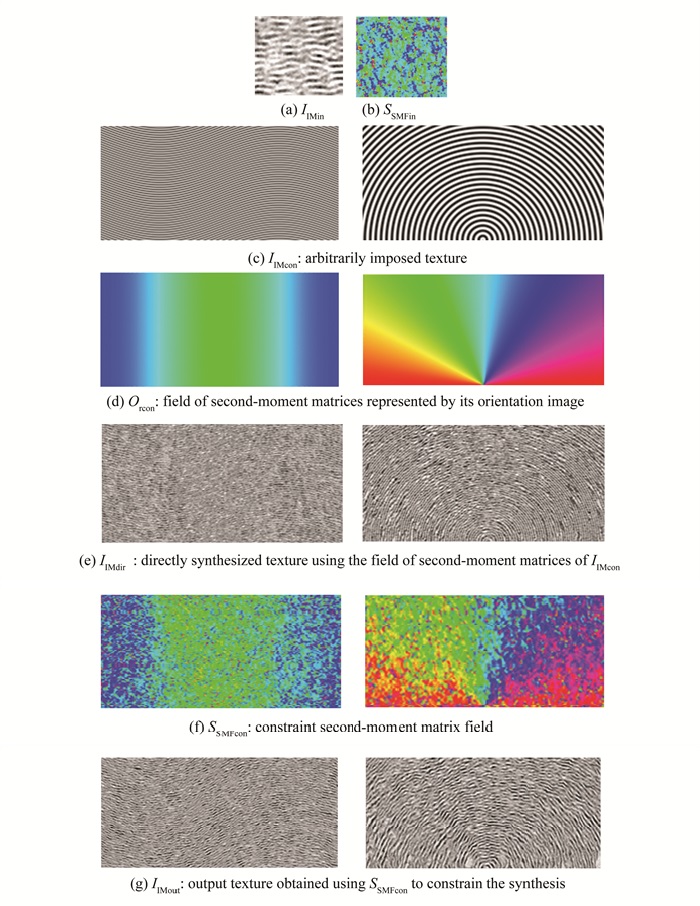

Fig. 7 shows two different results obtained using imposed textures (IIMcon) having waves (left) and semicircles (right) shapes. The 1st row of the Fig. 7 shows the input material sample (IIMin) and its second-moment matrix field (SSMFin) represented by its orientation field. The 2nd to 6th rows respectively show the arbitrarily imposed texture IIMcon, its field of second-moment matrices represented by its orientation image Orcon, the output texture (IIMdir) directly synthesized using the field of second-moment matrices of IIMcon as a constraint for the synthesis process, the constraint second-moment matrix field (SSMFcon) constructed using the proposed approach, and the output texture (IIMout) obtained using SSMFcon to constrain the synthesis. For the two results, a searching dictionary with φm of 5° is used. Gaussian pyramids of two and three scales are used in the first and second result, respectively. All the presented results are obtained after applying three iterations with a causal neighborhood and a lexicographical scan. It can be observed that the proposed method succeeds in constructing a second-moment matrix field that represents the structures (orientations) of the arbitrarily imposed texture. Consequently, the synthesis process constrained by the obtained field of second-moment matrices leads to restructured textures of arbitrary shapes covered by the patterns of the exemplar, while respecting its dynamics and visual aspects. On the contrary, the synthesis process constrained by the second-moment matrix field of the arbitrary textures leads to images having regular patterns that do not exist in the input material sample. This justifies the importance of the constraint second-moment matrix field instauration algorithm.

|

Fig.7 Two synthesis results obtained using the proposed algorithm |

It is important to mention that in the proposed algorithm, it is assumed that an input material sample having the same size of the arbitrary texture is available. Otherwise, a solution consists in synthesizing from the input sample, which usually has a small size, a textured image of same size as the arbitrarily imposed texture. The resulting texture is then used for the instauration of the constraint second-moment matrix field.

In order to quantitatively evaluate the obtained results, the peak signal-to-noise ratio (PSNR) and structure similarity (SSIM) index are computed between the input sample and the synthesize texture using the approach consisting of directly synthesizing the output texture by adopting the field of second-moment matrices of the arbitrarily imposed texture as a constraint for the synthesis process (referred to as direct synthesis in the sequel), as well as the synthesized texture obtained using the proposed method. The obtained results are presented in Table 1.

| Table 1 PSNR and SSIM of textures obtained with direct synthesis and the proposed method |

4 Conclusions

This paper proposes a texture synthesis algorithm allowing to synthesize material samples of arbitrary shapes. A constraint second-moment matrix field is first built by modulating the orientations of the input material sample by those of an arbitrary imposed texture. A searching dictionary is then constructed from the input material sample and its second-moment matrix field. In the synthesis process, the constraint second-moment matrix field is used to constrain the synthesis of the output texture by searching the best-match in the dictionary. The obtained results show that the proposed method succeeds in synthesizing textured images of specified shapes while preserving the essential local characteristics of the material exemplar. In future work, we aim at extending the proposed approach to the synthesis of arbitrary-shaped 3D textures from 2D material samples, which would be beneficial in the analysis and design of virtual materials.

| [1] |

Bréchet Y. Materials Science: From Materials Discovered by Chance to Made-to-Measure Materials. Paris: Collège de France, 2016. DOI:10.4000/lettre-cdf.1998 (  0) 0) |

| [2] |

Liu Y, Zhang X. Metamaterials: A new frontier of science and technology. Chemical Society of Review, 2011, 40: 2494. DOI:10.1039/c0cs00184h (  0) 0) |

| [3] |

Leyssale J M, Da Costa J P, Germain C, et al. An image guided atomistic reconstruction of pyrolytic carbons. Applied Physical Letter, 2009, 95: 231912. DOI:10.1063/1.3265987 (  0) 0) |

| [4] |

Leyssale J M, Da Costa J P, Germain C, et al. Structural features of pyrocarbon atomistic models constructed from transmission electron microscopy images. Carbon, 2012, 50: 4388-4400. DOI:10.1016/j.carbon.2012.05.014 (  0) 0) |

| [5] |

Conners R W, Harlow C A. A theoretical comparison of texture algorithms. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1980, PAMI-2(3): 204-222. DOI:10.1109/TPAMI.1980.4766966 (  0) 0) |

| [6] |

Forsyth D A, Ponce J. Texture, Computer Vision, A Modern Approach. London: Pearson Education, 2003. (  0) 0) |

| [7] |

Ebert D S, Musgrave F K, Peachey D, et al. Texturing and Modeling: A Procedural Approach. San Francisco: Morgan Kaufmann Publishers Inc., 2002.

(  0) 0) |

| [8] |

Worley S. A cellular texture basis function. SIGGRAPH 23rd Annual Conference on Computer Graphics and Interactive Techniques. New York: ACM Press, 1996: 291-294. DOI:10.1145/237170.237269

(  0) 0) |

| [9] |

Lagae A, Lefebvre S, Drettakis G, et al. Procedural noise using sparse Gabor convolution. ACM Transactions on Graphics, 2009, 28(3): 1-10. DOI:10.1145/1531326.1531360 (  0) 0) |

| [10] |

Galerne B, Gousseau Y, Morel J M. Random phase textures: Theory and synthesis. IEEE Transactions on Image Processing, 2011, 20: 257-267. DOI:10.1109/TIP.2010.2057446 (  0) 0) |

| [11] |

Cross G, Jain A. Markov Random field texture models. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1983, PAMI-5(1): 25-39. DOI:10.1109/TPAMI.1983.4767341 (  0) 0) |

| [12] |

Chellappa R, Kashyap R L. Texture synthesis using 2-D noncausal autoregressive models. IEEE Transactions on Acoustics, Speech, and Signal Processing, 1985, 33(1): 194-203. DOI:10.1109/TASSP.1985.1164550 (  0) 0) |

| [13] |

Turner M R. Texture discrimination by Gabor functions. Biological Cybernetics, 1986, 55(2-3): 71-82. DOI:10.1007/BF00336961 (  0) 0) |

| [14] |

Francos J M, Meiri A Z, Porat B. A unified texture model based on a 2-D wold-like decomposition. IEEE Transactions on Signal Processing, 1993, 41(8): 2665-2678. DOI:10.1109/78.258082 (  0) 0) |

| [15] |

Chellappa R, Kashyap R, Manjunath B. Model based texture segmentation and classification. Handbook of Pattern Recognition and Computer Vision. World Scientific Publishing, 1993: 277-310. DOI: 10.1142/9789814343138_001110.

(  0) 0) |

| [16] |

Lee J, Goo W, Park W B, et al. Virtual microstructure design for steels using generative adversarial networks. Engineering Reports, 2021, 3(1): e12274. DOI:10.1002/eng2.12274 (  0) 0) |

| [17] |

Amiri H, Vasconcelos I, Jiao Y, et al. Quantifying complex microstructures of earth materials: Reconstructing higher-order spatial correlations using deep generative adversarial networks. ESS Open Archire, 2022. DOI:10.1002/essoar./05/0988.1 (  0) 0) |

| [18] |

DeBonet J S. Multiresolution sampling procedure for analysis and synthesis of texture images. SIGGRAPH Annual Conference on Computer Graphics and Interactive Techniques. New York: ACM Press/Addison-Wesley Publishing Co., 1997: 361-368. DOI:10.1145/258734.258882

(  0) 0) |

| [19] |

Portilla J, Simoncelli P. A parametric texture model based on joint statistics of complex wavelet coefficients. International Journal of Computer Vision, 2000, 40(1): 49-70. DOI:10.1023/A:1026553619983 (  0) 0) |

| [20] |

Rabin J, Peyre G, Delon J, et al. Wasserstein barycenter and its application to texture mixing. International Conference on Scale Space and Variational Methods in Computer Vision. Berlin: Springer, 2010. DOI: 10.1007/978-3-642-24785-9_37.

(  0) 0) |

| [21] |

Wei L Y, Levoy M. Fast texture synthesis using tree-structured vector quantization. Proceedings of the 27th International Conference on Computer Graphics and Interactive Techniques. New York: ACM Press, 2000: 479-488. DOI:10.1145/344779.345009

(  0) 0) |

| [22] |

Efros A, Leung T. Texture synthesis by non-parametric sampling. International Conference on Computer Vision. Piscataway: IEEE, 1999, 2: 1033-1038. DOI:10.1109/ICCV.1999.790383 (  0) 0) |

| [23] |

Tong X, Zhang J, Liu L, et al. Synthesis of bidirectional texture functions on arbitrary surfaces. In SIGGRAPH Annual Conference on Computer Graphics and Interactive Techniques. New York: ACM Press, 2002: 665-672. DOI:10.1145/566654.566634

(  0) 0) |

| [24] |

Efros A, Freeman W T. Image quilting for texture synthesis and transfer. SIGGRAPH 28th Annual Conference on Computer Graphics and Interactive Techniques. New York: ACM Press, 2001: 341-346. DOI:10.1145/383259.383296

(  0) 0) |

| [25] |

Xu Y Q, Guo B, Shum H. Chaos mosaic: Fast and memory efficient texture synthesis. Technical Report MSRTR-2000-32, Microsoft Research, 2000.

(  0) 0) |

| [26] |

Wei L Y, Levoy T. Texture synthesis from multiple sources. ACM SIGGRAPH 2003 Sketches and Applications. New York: ACM Press, 2003: 1. DOI:10.1145/965400.965507

(  0) 0) |

| [27] |

Akl A, Yaacoub C, Donias M, et al. Texture synthesis using the structure tensor. Transactions on Image Processing. Piscataway: IEEE, 2015, 24(11): 4082-4095. DOI:10.1109/TIP.2015.2458701 (  0) 0) |

| [28] |

Akl A, Yaacoub C, Donias M, et al. Structure tensor based synthesis of directional textures for virtual material design. IEEE International Conference on Image Processing (ICIP). Piscataway: IEEE, 2014, 4867-4871. DOI:10.1109/ICIP.2014.7025986 (  0) 0) |

| [29] |

Seibert P, Raßloff A, Kalina K, et al. Microstructure characterization and reconstruction in Python: MCRpy. Integrating Materials and Manufacturing Innovation, 2022, 11: 450-466. DOI:10.1007/s40192-022-00273-4 (  0) 0) |

| [30] |

Lauff C, Schneider M, Montesano J, et al. An orientation corrected shaking method for the microstructure generation of short fiber-reinforced composites with almost planar fiber orientation. Composite Structures, 2023, 322: 117352. DOI:10.1016/j.compstruct.2023.1173 (  0) 0) |

2025, Vol. 32

2025, Vol. 32