2. School of Electronic Engineering, Xi'an University of Posts and Telecommunications, Shaanxi 710121, China;

3. School of Computer Science and Technology, Xi'an University of Posts and Telecommunications, Shaanxi 710121, China

With the advancement of the information industry, video processing in multimedia applications has become one of the most popular areas in decades. Video processing is now oriented towards ultra-high definition applications, and the amount of data for video processing has increased dramatically. HEVC is the most widely used video compression standard[1], which defines three units: Coding Unit (CU), Prediction Unit (PU), and Transform Unit (TU). The size of the CU is 64×64 pixels to 8×8 pixels, and the PU has two and eight selectable modes according to intra/inter-prediction respectively, and the size of the TU is 32×32 pixels to 4×4 pixels, which shows that HEVC supports more coding modes and unit sizes than H.264/AVC. In order to determine the coding scheme for these different combinations, HEVC encoder is highly dependent on an exact cost function. RDO is a nearly optimal cost function in terms of coding efficiency, and thus has been widely used in video compression technology. However, in order to traverse all combinations of modes and unit sizes, RDO must be performed iteratively, which leads to a lengthy encoding time. Moreover, each of the iteration of RDO involves a complete reconstruction process, including transformation, quantization, inverse transformation, inverse quantization, Context-Adaptive Binary Arithmetic Coding (CABAC), and distortion calculation. All of these processes are of high complexity and computationally intensive.

Recently, several works aiming at reducing RDO operation time for HEVC have been proposed, and these approaches can be divided into three categories. The first is the intra-frame rate distortion. Refs. [2-3] focused on this: Ref. [2] proposed a simplified algorithm which is easier to implement in hardware; Ref. [3] proposed an RDO algorithm which is based on Structural Similarity (SSIM). The second category is the inter-frame rate distortion, many previous works[4-5] studied on this. Ref. [4] improved the RDO algorithm based on the hierarchical temporal dependence. Ref. [5] used Lagrangian multiplier adaption method to optimize algorithm. The third category is fast algorithm for the RDO in the encoding process. These fast algorithms skip or terminate some modes or sizes early, which reduces the number of RDO iterations. Ref. [6] used an early skip mode and coding unit decision method to reduce RDO execution time. Ref. [7] discussed the relationship between motion divergence and R-D cost, and then skipped some units. These works are all improvements in software algorithms and have the highest flexibility. But they do not possess the fast computing capability compared with hardware implementations.

In order to achieve high throughput, Ref. [8] analyzed motion estimation and RD mode at the same macroblock stage on the premise of simplifying the mode selection algorithm. In the VLSI architecture design, hardware resource overhead is reduced through loop structure reconstruction and RDO mode decision. Ref. [9] proposed a four-pixel-strip based Enhanced Sum of Absolute Difference (ESAD) to estimate the distortion, and quantized TU coefficients based linear model to estimate the rate part of the RD cost function. Such hardware-friendly method for RDO of HEVC intra coding effectively reduces area consumption and increased throughput. Ref. [10] proposed an adaptive hardware architecture of RDO algorithms of different sizes to cope with different candidate modes for intra prediction. This scalable architecture provides an effective trade-off between throughput and compression efficiency. Ref. [11] proposed a low computational complexity Discrete Cosine Transform (DCT) based on Walsh-Hadamard transform, which further reduces the computational complexity of the RDO algorithm by reducing the computational complexity of DCT. Ref. [12] proposed an RDO algorithm with a quantization-free framework, which maintains a high coding efficiency by taking the syntax element organization and possible HEVC models.

Although the traditional hardware architecture based on the optimized RDO algorithm can effectively improve the computation speed of the algorithm, the fixed hardware architecture cannot adapt well to RDO algorithms of different scales. The reconfigurable architecture can be well adapted to its characteristics, and meet the needs of multi-scale RDO algorithms. Refs. [13-14] proposed a row-based configuration network, a column-based data delivery network. This row or column-based control network can achieve fast data transfer, but when the array size becomes larger, the bit width will continue to increase. Ref. [15] proposed a fishing net-like configuration management network, which organizes the distributed control structure of a uniformly distributed manner to complete the unified management of the entire array. The design of the distributed programmable controller can effectively perform parallel processing of batch data, but the number of controllers is large, and the controller range is easy to overlap, which makes the control process more complicated. Ref. [16] proposed a Distributed Control Network (DCN), which is essentially a centralized controller and peer-to-peer control network connected to each Configurable Cell (RC). The register chain in the DCN controls the order in which the RC is executed. This control network can flexibly schedule each RC, and through the combination of register chains in the DCN, multi-thread execution in the RC array can be realized. But as the number of RC cores increases, place and routing for the back-end design becomes more difficult.

2 MotivationAlthough reconfigurable structures are useful and efficient in solving the parallelism and flexibility of algorithms, there are two problems with the reconfigurable implementation of RDO algorithm: fast mapping between RDO algorithms of different scales and the scalability of computing resources for RDO algorithms.

Many scholars have made relevant research. Refs. [17-18] used a point-to-point configuration network to achieve configuration information arriving at any Processing Element (PE) in one clock cycle, but the wiring cost of this architecture is high. Ref. [19] proposed a configuration network based on the row and column, and the Context Memory (CM) module has one read port for each row and column of the PE array. Although its wiring cost is less and implementation is simpler, the required clock for the configuration will increase along with the increasing number of PEs in the row and column. There is only one read port for the entire PE array[20]. There is a large delay, and more configuration registers are needed.

In all aforementioned methods, the researchers focus on the rapid load of configuration information and the wiring cost, but they have poor scalability. This paper proposes a new dynamic reconfigurable structure of RDO processing on the customized CGRA-like architectures to balance the rapid delivery and scalability of configuration information. In order to realize the fast switching between RDO algorithms, this paper designs an HCN based on Huffman-coding-like addressing method. It automatically issues the corresponding call instruction to complete the fast switching between the algorithms, and realizes the requirement of different RDO array size by controlling the bit length of the addressing code. Originally, the H-tree is widely used to achieve simultaneous delivery of clock signal in large-scale integrated circuit design with smaller skew. Compared with its original use of transferring clock signal, the amount of reconfiguration contexts that needs to be delivered into the reconfigurable domain is much larger. Hence, achieving simultaneous update is difficult. The HCN based on Huffman-coding-like addressing method can transfer reconfigurable contexts in unicast, multicast, or broadcast mode and trigger them simultaneously, thus reducing the time of algorithm reconstruction. Meanwhile, the proposed reconfigurable architecture can arrive at each PE in the scale of 32×32 PE array architecture with 10-bit address bus in one clock cycle. This architecture can expend to larger scale PE array. For example, with 10-bit address bus of H-tree, it can access 1024-PE array with little performance degradation.

3 Rate Distortion Optimization Algorithm and Parallelism AnalysisThe development of computer architectures of multi-core or even many-core processors provides a direction for the parallelization of algorithms. RDO in HEVC is one of the most time-consuming algorithms in video encoders, so the parallelization of RDO is of great significance for reducing computational complexity and execution time of the algorithm. Therefore, under the premise that the data correlation does not affect the coding quality, how to maximize the parallelism of RDO is a challenge in parallel implementation.

The key research content of rate-distortion optimization theory is the relationship between coding bit rate and coding distortion. If the source is X, the sink is Y, xi and yj are the respective values of X and

| $ R\left( D \right) = \mathop {{\rm{min}}}\limits_{p\left( {{y_j}|{x_i}} \right) \in {B_D}} I\left( {X;Y} \right) $ | (1) |

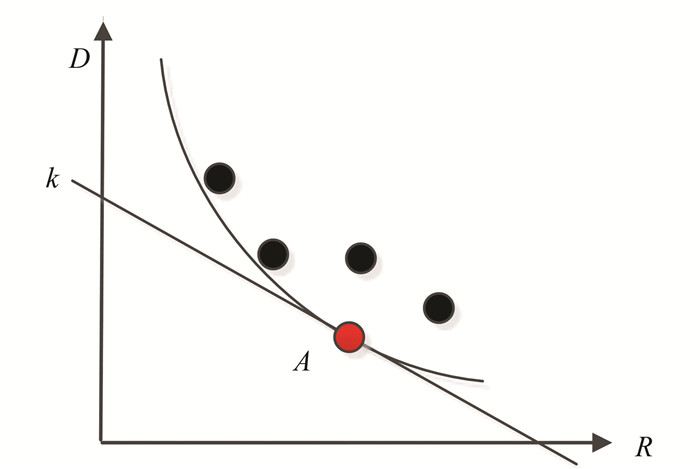

This is the rate distortion expression. Using less bitrate during the encoding process increases coding distortion. Conversely, if you want to reduce coding distortion, it will consume more bitrate, and the relationship between the two can be described by a smooth downward convex monatomic curve[21], as shown in Fig. 1, where D represents the resulting distortion and R represents the bitrate.

|

Fig.1 Diagram of relationship between R and D |

RDO in video coding systems is based on rate-distortion theory to select optimal coding parameters. HEVC adds a variety of new coding methods under the premise of AVC, which leads to a sharp increase in the number of prediction modes of the encoder, such as the increase of the intra prediction mode to 35. If all the candidate modes are traversed according to the exhaustive method, the required amount of computation is very large, and it is difficult to perform coding quickly, thus hard to implement in actual video coding applications. Therefore, the encoding process can be divided into multiple sub-processes, and the optimal encoding parameters were selected respectively for each sub-process. The whole encoding process is divided into N sub-processes, which are independent of each other, and the operable points corresponding to the i-th process are the code rate Ri, j, the distortion Di, j, and the minimum of all sub-processes under the defined condition RC. The distortion is

| $ {\rm{min}}\sum\limits_{i = 1}^N {{D_{i, j}}} \;\;\;\;\;{\rm{ s}}{\rm{.t}}{\rm{.}}\sum\limits_{i = 1}^N {{R_{i, j}} < {R_c}} {\rm{ }} $ | (2) |

For this limited optimization problem, it can be solved by dynamic programming method and Lagrangian optimization method. The dynamic programming method obtains the optimal candidate pattern by constructing the grid, and its complexity increases with the number of nodes, so it is difficult to be applied to actual coding. At present, the general method adopted by the video encoder is the Lagrangian optimization method, which is easy to operate and has low complexity. This method introduces the Lagrangian factor and transforms the constraint problem into a non-constrained problem. Eq. (2) can be transformed into

| $ {\rm{min}}J, J = \sum {D_{i, j}} + \lambda \sum {R_{i, j}} $ | (3) |

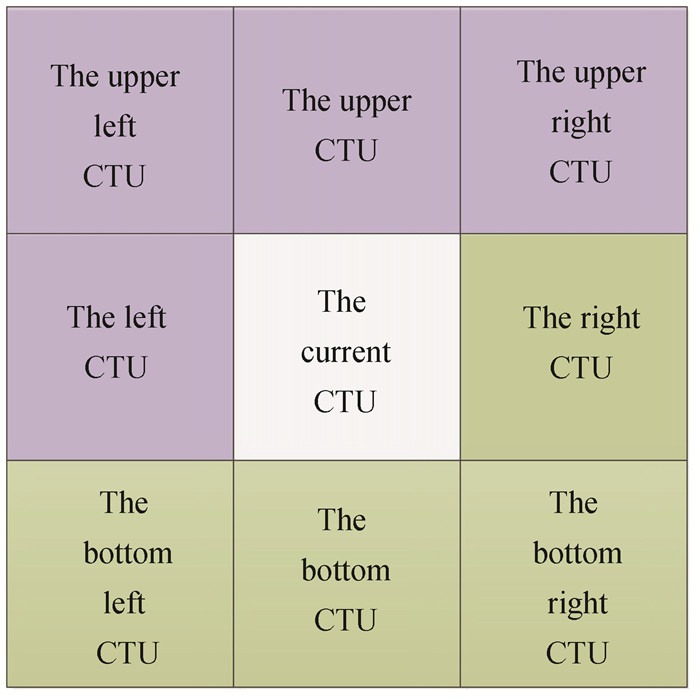

In HEVC, most of the coding parameters come from the CTU, CU, and PU layers. The CTU is the largest coding unit, and there is some data correlation between the current CTU, left CTU, upper CTU, upper left CTU, and upper right CTU. There is no data correlation between the current CTU, right CTU, bottom CTU, bottom left CTU, and bottom right CTU, so these CTUs can be executed in parallel, as shown in Fig. 2. Similarly, there is such a data correlation between CUs.

|

Fig.2 Diagram of relationship between CTUs |

PU can be divided into two modes: intra prediction and inter prediction. Among them, intra prediction has 35 prediction modes, and the best one is selected from the 35 modes. The rate distortion optimization method is

| $ {\rm{min}}J, J = D\left( {{\rm{Mode}}} \right) + {\lambda _{{\rm{Mode}}}}R({\rm{Mode}}) $ | (4) |

Inter-frame prediction needs to combine Merge, AMVP, and other technologies to select the optimal prediction mode. DFD (Motion) is the motion compensation prediction residual, and RMV (Motion) is the motion vector coding bit number. The rate distortion optimization method is

| $ {\rm{min}}J, J = {\rm{DFD}}({\rm{Motion}}) + {\lambda _{{\rm{Motion}}}}{R_{{\rm{MV}}}}\left( {{\rm{Motion}}} \right) $ | (5) |

These different prediction modes can be executed in parallel, so the parallelism of the rate-distortion optimization algorithm is equal to the product of the CTU-level parallelism, the CU-level parallelism, and the PU-level parallelism, as shown in Eq. (6):

| $ D{P_{{\rm{depth\_map}}}} = D{P_{{\rm{CTU}}}} \times D{P_{{\rm{CU}}}} \times D{P_{{\rm{PU}}}} $ | (6) |

This section discusses the dynamic reconfigurable structure with HCN. Fig. 3 shows a reconfigurable array structure of 4×4 array size. This structure consists of an HCN based on Huffman-coding-like addressing method, PE array, configuration memory, data memory, and host interface to interact with the host machine.

|

Fig.3 Reconfigurable array structure |

In order to realize fast mapping between RDO algorithms, as well as adjusting the size of the algorithm mapping array according to the size of the candidate range for different encoding algorithms in HEVC, an HCN with Huffman-coding-like addressing method is firstly proposed, as shown in Fig. 4. The controller monitored the operating status of each functional unit and determined the operating mode and selected a suitable algorithm in real time for PE[22]. In order to show it clearly, Fig. 4 only presents 8×8 PEs.

|

Fig.4 H-tree topology of 8×8 PEs |

The instructions were stored in the ROM part of instruction memory in the PE and the real-time instruction PE passed from the HCN were stored in the RAM section. The controller determined the operating mode and selected the appropriate algorithm for PE(s).

The HCN was used to transmit the instructions to a specific PE or associated PEs, and collect status information of each PE. Taking an 8×8 PE array as an example, as shown in Fig. 4, the bus width is 38 bits, wherein bus [37:32] is 6-bit address information, and bus [31:0] is 32-bit instruction information. The HCN will access the destination (each PE) in the form of a six-depth binary tree by 6-bit address information. When working in unicast mode, the 6-bit address information can be judged bit by bit, as shown in Fig. 4, starting from the root node. Judging from the highest bit of the address information, when the bit [6] of the address information is logic "0", the HCN will turn left, to node2_1. If the bit [6] of the address information is logic "1", the HCN will turn right, to node2_2. The address bus width will be the 5-bit width after the first turn, the 4-bit width after the second turn, and so on. Finally, it will be sent to the PE. The addressing information includes both the mask and address, where the mask is used to pick the directions at a specific node in HCN while working in multicast or broadcast mode. Fig. 5 shows the judgment flow of the mask detection.

|

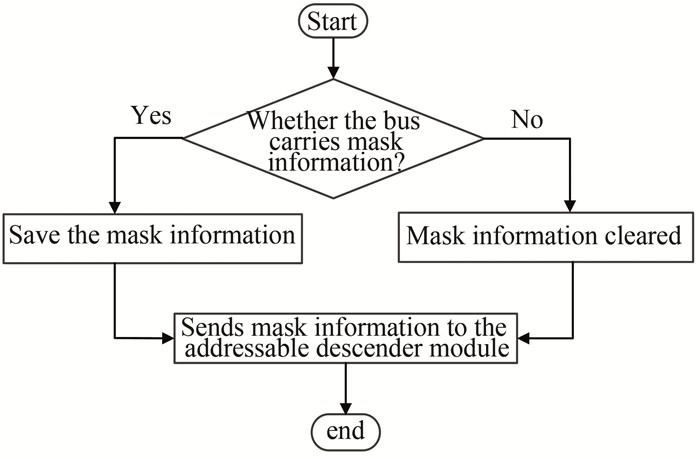

Fig.5 Mask judgment process |

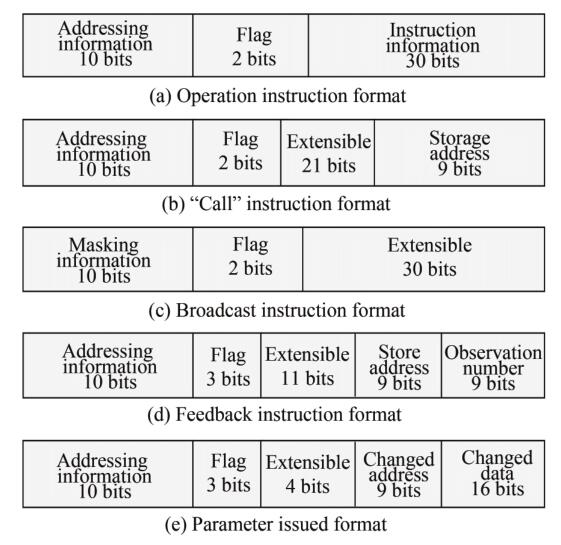

If bus [31:28] =4′b1010 is detected, it means that the bus carries the mask information, and saves the six bits of the bus [37:32] to the mask register. Otherwise, the mask information is not carried, and the signal is set to zero. If the mask of a corresponding bit of the address bus is 1, the corresponding bit of the address bus is masked, so that the instruction or configuration is simultaneously transmitted in the left and right directions. As shown in Fig. 4, the 4×4 PE broadcast in the upper left corner was taken as an example. Firstly, the controller sent a pair of bus information. The 6-bit address information was 6′b001111, which was saved by the mask detection module as mask information. Then, the second bus information was sent, and the 6-bit addresses information 6′b00xxxx was combined with the 6-bit mask for multicasting. This kind of addressing is easy to expand to facilitate a larger scale PE array with low cost. With the Huffman-coding-like addressing method, the controller can transmit/receive five kinds of instruction/context information with 32-bit width. Fig. 6 shows the bus information format.

|

Fig.6 Bus format |

1) The operation instruction issuing the following format: 2-bit flag of "01" + 30-bit RISC-like instruction.

2) The "call" instruction issuing the following format: 2-bit flag of "11" + 9-bit invoking address + 21-bit extensible space.

3) The broadcast SIMD instruction issuing the following format: 2-bit flag of "10" + 30-bit extensible space. In this format, the broadcast mask should be transmitted first with the 2-bit flag of "10", and at each turn, the switch node will mark the transmitting direction according to the bit value. If that bit is logic "1", the instruction to be sent next will be transmitted in both directions. As for logic "0", the instruction to be sent next will be transmitted according to the address bit value.

4) The status feedback instruction issuing the following format: 3-bit flag of "000" + 11-bit extensible space+ 9-bit address+ 9-bit number. This is used for collecting the status of each computing resource for performance evaluation, and it is running parallel with the computing resource without disturbing the normal operation.

5) The status parameters issuing the following format: 3-bit flag of "001" + 4-bit extensible space+ 9-bit address+16-bit data. This format is used to change the parameters according to the algorithm or operating status.

The controller monitored the operating status of each functional unit, determined the operating mode, and selected a particular algorithm based on the operating status in real time. When the host accessed the array processor, the controller received the bus information from the host interface and judged whether the instruction was unicasted, multi-casted, broadcasted, feedback, or parameter change according to the flag.

Data transfer was through the distributed shared memory under the unified addressing, where each 4×4 PE formed a processing element cluster, numbering each PE according to the row and column of the PE, as shown in Fig. 7. Unified addressing mode could provide a logically shared on-chip large-capacity storage for the software staff, so it was easy to program. In order to meet the high bandwidth and low latency application requirements, the on-chip storage resources were segmented in the physical realization. In the local data access, the high-speed switching structure was realized, and could meet 16-channel data parallel read/write access in the 4×4 PE array.

|

Fig.7 Distributed shared storage structure |

5 Parallel Mapping of RDO Algorithm on Reconfigurable Array Structures

As shown in Fig. 8, the RDO firstly predicted the current encoding block, then transformed and quantified the residual value, and finally obtained the actual output bit rate through entropy coding. Through inverse quantitative, inverse transform and reconstruction could get reconstructing pixel values, which were then compared with the original pixel values to get the encoding distortion.

|

Fig.8 RDO technology block |

RDO calculation was divided into two parts: Lagrange factor calculation and R-D cost calculation. The former constitutes the major amount of calculation. The Lagrange factor of intra prediction was calculated as

| $ {\lambda _{{\rm{Mode}}}} = \alpha \cdot {W_k} \cdot {2^{(\left( {QP - 12} \right)/3)}} $ | (7) |

where QP is the quantization parameter, Wk is the weighting factor, and α is a variable. When α and Wk take fixed value, QP takes a maximum value of 51, (QP-12)/3 takes a maximum value of 13. Calculating λ requires 13 multiplications, 1 subtraction, and 1 division. For each intra prediction mode, the rate distortion cost was calculated according to Eq. (4), and one multiplication and one addition were required. Considering the resource consumption brought by the hardware circuit, the multiplication and division operations could be implemented by simple operations such as addition, subtraction, and shift instructions. Through the analysis of RDO, it was found that it was a computationally intensive algorithm. Although the data correlation between each mode was extremely large, there was a high degree of parallelism between the modes, when each mode was independently operated.

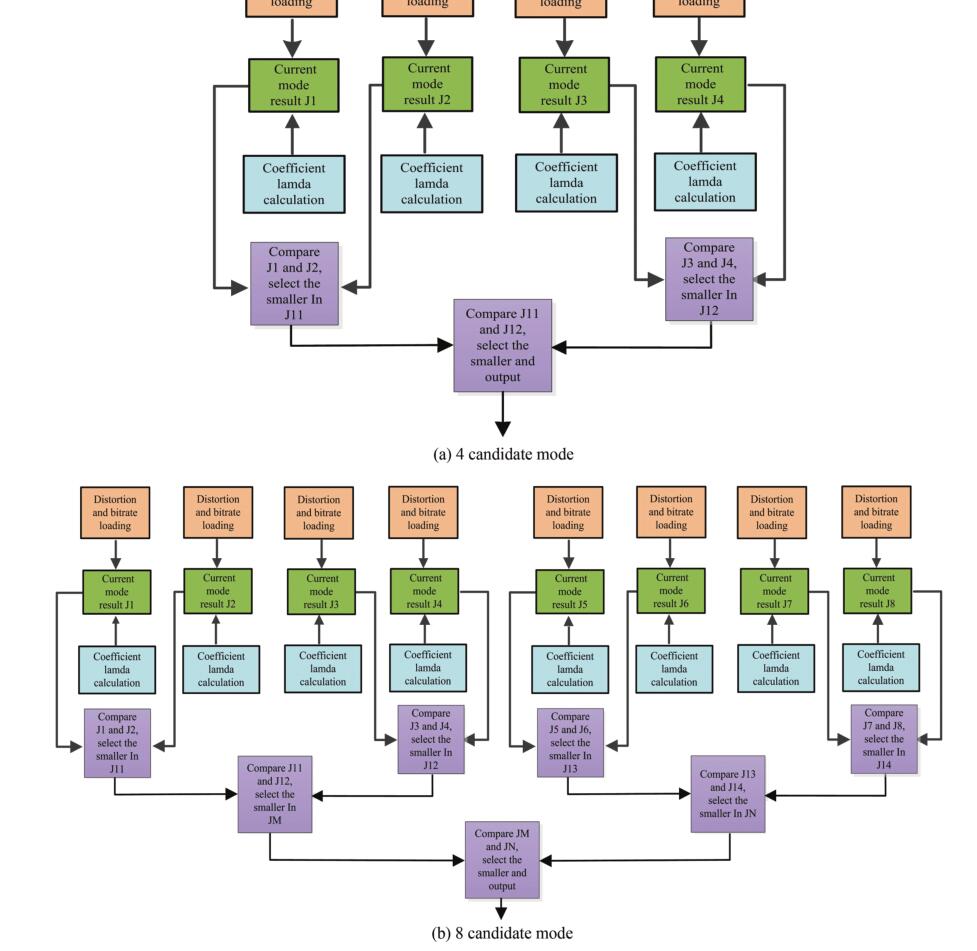

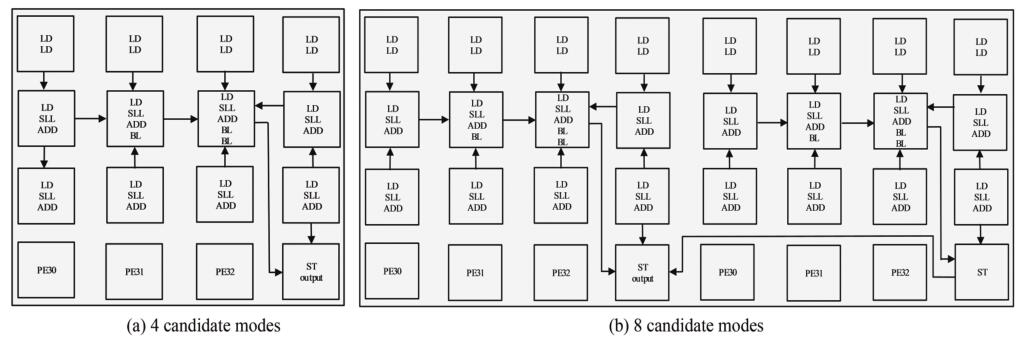

Fig. 9 depicts the Data Flow Graph (DFG) of the RDO algorithm in 4 candidate modes and 8 candidate modes. Firstly, the distortion and bit rate were loaded into the processing element through external storage. The coefficient of λ was calculated in the process of data loading. After the data load and λ were completed, the results of the current mode were obtained and saved through the shift and addition operations. In the same way, the results of other modes were calculated and compared, and the minimum result was output. According to the DFG of Fig. 9, the corresponding mapping structure of 4 candidate modes and 8 candidate modes is shown in Fig. 10.

|

Fig.9 Dataflow of the RDO algorithm |

|

Fig.10 Mapping structure of RDO algorithm |

4 candidate modes were taken as an example, and PE33 was used to load/store data through adjacent interconnects. PE10-PE23 were used to execute shift and add operation. Firstly, all of the 8 PEs executed shift and add operations. Then PE10 sent its result to PE11 to make a comparison, in the same way as PE13 sent its result to PE12. When those operations were finished, PE11 sent its result to PE12. Finally, PE12 sent its result to PE33 to output. Detailed steps are as follows:

1) Firstly, load distortion and bit rate values. PEG00 has a 16-bit deep external memory connected to PE00 for storing distortion and bit rate.

2) Load the data. After storing the corresponding data, it began to distribute data onto each PE. Among them, the four PEs PE00-PE03 performed data loading operations respectively, and the distortion and bit rate for the four modes were loaded into respective data storage units of the four PEs.

3) The main task of PE00 was to retrieve data from external storage and send it to each PE. PE00 took data through the shared register R10, and the original data accessed from the outside would be written into the data storage of PE00, starting from address 0. Every time a data of one mode was fetched, these data were written into the data storage units of PE01, PE02, and PE03 respectively using shared storage, and the storage addresses started from 0, i.e., the data onto the address of the PE00 data storage unit was sent to the address of the PE01-PE03 data storage.

4) When PE00 made a judgment to take a mode and sent it to different PEs, PE00 would send an indication signal to the 4 PEs through shared storage. After PE00-PE03 received the PE00 indication signal, each PE could start working, otherwise the operator should wait.

5) After the handshake succeeded, each PE began to perform shift and subtraction calculations. PE20-PE23 first took the current QP values from their respective data stores and performed subtraction operations, then a one-step division calculation was performed by shifting and subtracting operations, and then multiplication calculations were performed by shifting and adding. Finally, the calculation results were sent to PE10-PE13 respectively.

6) After PE10-PE13 received the calculation result of PE20-PE23, they performed a multiplication operation by shift and addition to obtain the R-D cost of the current block.

7) After each PE completed operation with all R-D costs of its own mode, PE10 sent the result to PE11 for comparison. PE13 sent the result to PE12 for comparison and selected the smallest R-D cost value.

8) After these two comparisons were completed, PE11 sent its result to PE12 for comparison again and selected the minimum R-D cost value. Then PE12 wrote the minimum R-D cost value obtained in PE33's data storage unit through the shared storage mechanism.

The previous section explored the parallelism of 4 prediction modes and 8 prediction modes on a 4×4 array structure and an 8×4 array structure. However, there were many modes that needed to be processed for the HEVC standard. This section used the 8×4 array structure to perform mode reconstructions of different sizes, which were the reconstruction of 4 prediction modes and 8 prediction modes, respectively. The reason for selecting the rate distortion optimization algorithms of 4 prediction modes and 8 prediction modes was that these two modes included a special prediction mode of intra prediction, which could meet the requirements of the HEVC standard for processing different video sequences and good scalability. The specific reconstruction process is as follows:

1) Store the 4 kinds of prediction mode instructions in the addresses 0 to 255 of the corresponding local instruction memory in the 4×4 array structure. The 8 types of prediction mode instructions were stored in addresses 256 to 511 of the corresponding local instruction memory in the 8×4 array structure.

2) In order to improve the efficiency of the algorithm, the first 4 prediction modes were calculated. The HCN first sent a mask instruction 0000001111_10_xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx, and then sent a call instruction 0000000000_11_xxxxxxxxxxxxxxxxxxxxx_000000000, according to the mask information and addressing judgment to PEG00. All PEs started working and performed configuration 1 based on the address of the call instruction.

3) After configuration 1 or 4 prediction modes were calculated, it was necessary to determine whether to perform 8 kinds of prediction mode calculations based on the results. In this case, the HCN may send a data feedback instruction to read the current calculation result from the data memory. For example, 0000001111_000_xxxxxxxxxxx_000000000_000000101.

4) If the calculation result did not meet expectations, turn on configuration 2, i.e., 8 kinds of prediction mode calculations should be turned on. At this time, the HCN first sent a mask instruction 0000011111_10_xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx, then sent a call instruction 0000000000_11_xxxxxxxxxxxxxxxxxxxxx_100000000. The code information and the addressing judgement worked on all PEs of PEG00 and PEG01, and performed configuration 2 according to the address of the call instruction. Otherwise, the calculation ended.

6 Results and Analysis 6.1 Configurable Network FPGA Test Results and Performance ComparisonThe Verilog HDL hardware description language was used to describe the circuit. After simulating with QuestaSim 10.1d, Xilinx's ISE14.7 development tool was used for synthesis, and BEEcube's BEE4 series development board was used to complete the FPGA test. After the global controller and the Processing Element Array (PEA) were interconnected and connected to the port of the BRAM, the data was written into the address corresponding to the BRAM. Then the written data were read from the address of the BRAM by the command of "bramdump < bram_name> < size>". With a bus width of 42 bits, the designed HCN could control 1024 PEs, support both SISD and SIMD modes of operation, and switch between multiple modes through configuration commands.As shown in Table 1, compared with Ref.[23] and Ref. [24], a new data feedback function was added which could monitor and control the state information of a specific processing module, so as to facilitate the rational allocation of computing resources and algorithms. The implementation of the dynamic reconfigurable processor was effectively improved. Moreover, compared with the controller of the PPA structure proposed in Ref. [24], the number of controllable processing elements could be increased by 32 times and the execution period could be reduced by 50% when the control bit width was increased by only 31.25%.

| Table 1 Issued network performance comparison |

6.2 Performance Analysis and Comparison of Dynamic Reconfigurable Mechanisms

For the dynamic reconfiguration structure designed in this paper, 4 PEGs were interconnected and the circuit was synthesized using Design Compiler under the process of 90 nm CMOS technology. Ref.[16] proposed a coarse-grained reconfigurable processor architecture supporting CCF and RSF. All processors in the CCF architecture were fully interconnected and any configuration storage could be enabled. All processors in the RSF architecture could perform single-cycle operations. Interconnect and configuration storage was shared between two adjacent processing elements. Table 2 summarizes the comparison of the dynamic reconfiguration structure designed in this paper and the parameters in Ref.[16] under the condition of a PEG array.

| Table 2 Parameters of a PEG scale in this paper and Ref. [16] |

Table 3 shows that the structure designed in this paper has certain advantages over the four PEG scales, compared with that proposed in Ref. [16]. Compared with the BASE structure, the design of this paper reduced 28.5% and 17.6% in area and critical path delays, respectively. Compared with the CCF structure, it was reduced by 32.6% and 27.8%, respectively. Compared with the RSF structure, it was reduced by 31.8% and 25.1%, respectively.

| Table 3 Performance comparison in 4 clusters between this paper and Ref.[16] |

6.3 RDO Performance Comparison

Compared with that in Ref. [12], the parallel mapping structure of RDO designed in this paper had an area increase of 35% and an operating frequency increase of 5.7%. Compared with Ref. [25], the area reduction was 56.4% and the operating frequency was increased by 12.5%. Compared with Ref. [10], the operating frequency was increased by 42.8% when the area increased by 12.4%. The circuit comparison aspects of the proposed architecture and other architectures are shown in Table 4.

| Table 4 Design compiler results comparison |

The measured RDO computation time of the original RDO was given and RDO was proposed as shown in Table 5. The results corresponded to the configuration of QP 27, Race Horses 300 frames, Blowing Bubbles 500 frames, and Basketball Pass 500 frames. From Table 5, it can be seen that it took a lot of time for RDO processing, which was hard for the software to meet the real time RDO processing. Therefore, the hardware accelerator is highly desired.

| Table 5 Measured RDO computation time of original RDO and proposed RDO |

Finally, the performance analysis of the two reconfiguration methods proposed in this paper was carried out. As shown in Table 6, the reconfiguration method based on context switching took the 4 candidate mode and the 8 candidate mode RDO algorithm into the instruction storage in advance, and implemented flexible switching of the two algorithms through the "Call" instruction. It only took 8 clock cycles from the request of the array to the completion of the algorithm switch. The reconfiguration time required by the reconfiguration method of the HCN was related to the algorithm's number of instructions. 4 candidate modes required 193 clock cycles, and 8 candidate modes required 349 clock cycles.

| Table 6 Number of cycles required for two reconstruction methods |

7 Conclusions

Prior reconfigurable architectures that process various video processing algorithms suffer from performance bottleneck due to the inadequate flexibility and low context switching speed. The HCN designed by this study could control 1024 PEs, support both SISD and SIMD modes of operation, and switch between multiple modes through configuration commands. Based on the HCN, a fast dynamical reconfigurable structure in a massive homogeneous PE array was proposed to achieve the simultaneous movement of reconfiguration contexts among particular/related PEs in unicast, multicast, or broadcast modes. The dynamic reconfigurable structure was designed in Verilog, simulated with Questa-sim, synthesized using Design Compiler, and implemented on an FPGA board. The experiment results show that compared with prior RDO algorithm implement, this proposed structure has improved the work frequency by an average of 12.5% and an area reduction of 56.4%.

| [1] |

Sullivan G J, Ohm J R, Han W J, et al. Overview of the high efficiency video coding (HEVC) standard. IEEE Transactions on Circuits and Systems for Video Technology, 2012, 22(12): 1649-1668. DOI:10.1109/TCSVT.2012.2221191 (  0) 0) |

| [2] |

Hu L D, Sun H M, Zhou D J, et al. Hardware-oriented rate-distortion optimization algorithm for HEVC intra-frame encoder. Proceedings of the 2015 IEEE International Conference on Multimedia & Expo Workshops (ICMEW). Piscataway: IEEE, 2015. 1-6. DOI: 10.1109/ICMEW.2015.7169808.

(  0) 0) |

| [3] |

Cen F, Lu Q L, Xu W S. SSIM based rate-distortion optimization for intra-only coding in HEVC. Proceedings of the 2014 IEEE International Conference on Consumer Electronics (ICCE). Piscataway: IEEE, 2014. 17-18. DOI: 10.1109/ICCE.2014.6775889.

(  0) 0) |

| [4] |

Gao Y B, Zhu C, Li S. Hierarchical temporal dependent rate-distortion optimization for low-delay coding. Proceedings of the 2016 IEEE International Symposium on Circuits and Systems (ISCAS). Piscataway: IEEE, 2016. 570-573. DOI: 10.1109/ISCAS.2016.7527304.

(  0) 0) |

| [5] |

Li S, Zhu C, Gao Y, et al. Inter-frame dependent rate-distortion optimization using Lagrangian multiplier adaption. Proceedings of the 2015 IEEE International Conference on Multimedia and Expo (ICME). Piscataway: IEEE, 2015.1-6. DOI: 10.1109/ICME.2015.7177467.

(  0) 0) |

| [6] |

Hu Q, Zhang X Y, Shi Z R, et al. Neyman-Pearson-based early mode decision for HEVC encoding. IEEE Transactions on Multimedia, 2015, 18(3): 379-391. DOI:10.1109/TMM.2015.2512799 (  0) 0) |

| [7] |

Xiong J, Li H, Wu Q, et al. A fast HEVC inter CU selection method based on pyramid motion divergence. IEEE Transactions on Multimedia, 2014, 16(2): 559-564. DOI:10.1109/TMM.2013.2291958 (  0) 0) |

| [8] |

Pastuszak G. Architecture design of the H. 264/AVC encoder based on rate-distortion optimization. IEEE Transactions on Circuits and Systems for Video Technology, 2015, 25(11): 1844-1856. DOI:10.1109/TCSVT.2015.2402911 (  0) 0) |

| [9] |

Shen W W, Fan Y B, Huang L L, et al. A hardware-friendly method for rate-distortion optimization of HEVC intra coding. Technical Papers of 2014 International Symposium on VLSI Design, Automation and Test. Piscataway: IEEE, 2014. 1-4. DOI: 10.1109/VLSI-DAT.2014.6834898.

(  0) 0) |

| [10] |

Pastuszak G, Abramowski A. Algorithm and architecture design of the H. 265/HEVC intra encoder. IEEE Transactions on Circuits and Systems for Video Technology, 2015, 26(1): 210-222. DOI:10.1109/TCSVT.2015.2428571 (  0) 0) |

| [11] |

Lee B, Kim M. A CU-level rate and distortion estimation scheme for RDO of hardware-friendly HEVC encoders using low-complexity integer DCTs. IEEE Transactions on Image Processing, 2016, 25(8): 3787-3800. DOI:10.1109/TIP.2016.2579559 (  0) 0) |

| [12] |

Sun H M, Zhou D J, Hu L D, et al. Fast algorithm and VLSI architecture of rate distortion optimization in H. 265/HEVC. IEEE Transactions on Multimedia, 2017, 19(11): 2375-2390. DOI:10.1109/TMM.2017.2700629 (  0) 0) |

| [13] |

Zhao Z Y, Sheng W G, He W F, et al. A static-placement, dynamic-issue framework for CGRA loop accelerator. Proceedings of the Conference on Design, Automation & Test in Europe. Piscataway: IEEE, 2017. 1348-1353. DOI: 10.23919/date.2017.7927202.

(  0) 0) |

| [14] |

Pager J, Jeyapaul R, Shrivastava A. A software scheme for multithreading on CGRAs. ACM Transactions on Embedded Computing Systems (TECS), 2015, 14(1): 19:1-19:26. DOI:10.1145/2638558 (  0) 0) |

| [15] |

Prabhakar R, Zhang Y Q, Koeplinger D, et al. Plasticine: A reconfigurable accelerator for parallel patterns. IEEE Micro, 2018, 38(3): 20-31. DOI:10.1109/MM.2018.032271058 (  0) 0) |

| [16] |

Liu L B, Wang B, Deng C C, et al. Anole: A highly efficient dynamically reconfigurable crypto-processor for symmetric-key algorithms. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 2018, 37(12): 3081-3094. DOI:10.1109/TCAD.2018.2801229 (  0) 0) |

| [17] |

Shafique M, Bauer L, Henkel J. Adaptive energy management for dynamically reconfigurable processors. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 2014, 33(1): 50-63. DOI:10.1109/TCAD.2013.2282265 (  0) 0) |

| [18] |

Cong J, Huang H, Ma C Y, et al. A fully pipelined and dynamically composable architecture of CGRA. Proceedings of the 2014 IEEE 22nd Annual International Symposium on Field-Programmable Custom Computing Machines. Piscataway: IEEE, 2014.9-16. DOI: 10.1109/FCCM.2014.12.

(  0) 0) |

| [19] |

Jafri S M A H, Daneshtalab M, Abbas N, et al. Transmap: Transformation based remapping and parallelism for high utilization and energy efficiency in CGRAs. IEEE Transactions on Computers, 2016, 65(11): 3456-3469. DOI:10.1109/TC.2016.2525981 (  0) 0) |

| [20] |

Ashammagari A R, Mahmoodi H, Homayoun H. Exploiting STT-NV technology for reconfigurable, high performance, low power, and low temperature functional unit design. Proceedings of the Conference on Design, Automation & Test in Europe. Piscataway: IEEE, 2014. 1-6. DOI: 10.7873/DATE.2014.348.

(  0) 0) |

| [21] |

Wang S S. Research on High Efficiency Video Coding Technology Based on Rate Distortion Optimization. Harbin: Harbin Institute of Technology, 2014: 33-36.

(  0) 0) |

| [22] |

Waeijen L, She D, Corporaal H, et al. A low-energy wide SIMD architecture with explicit datapath. Journal of Signal Processing Systems, 2015, 80(1): 65-86. DOI:10.1007/s11265-014-0950-8 (  0) 0) |

| [23] |

Ferreira R, Denver W, Pereira M, et al. A dynamic modulo scheduling with binary translation: Loop optimization with software compatibility. Journal of Signal Processing Systems, 2016, 85(1): 45-66. DOI:10.1007/s11265-015-0974-8 (  0) 0) |

| [24] |

Park H, Park Y, Mahlke S. Polymorphic pipeline array: a flexible multicore accelerator with virtualized execution for mobile multimedia applications. Proceedings of the 42nd Annual IEEE/ACM International Symposium on Microarchitecture. Piscataway: IEEE, 2009. 370-380. DOI: 10.1145/1669112.1669160.

(  0) 0) |

| [25] |

Zhu J, Liu Z Y, Wang D S, et al. Fast prediction mode decision with Hadamard transform based rate-distortion cost estimation for HEVC intra coding. 2013 IEEE International Conference on Image Processing. Piscataway: IEEE, 2013. 1977-1981. DOI: 10.1109/ICIP.2013.6738407.

(  0) 0) |

2020, Vol. 27

2020, Vol. 27